TL;DR:

- Pathlight, founded by Alex Kvamme and CTO Trey Doig, aimed to enhance customer conversation insights using NLP technologies.

- Early NLP solutions were ineffective, requiring extensive training and pre-work.

- Pathlight pivoted to LLMs like ChatGPT and rebuilt its product for automated reviews, marking a significant improvement.

- The company invested heavily in R&D, focusing on LLM integration and developing proprietary tools.

- Pathlight’s key lesson was adopting a multi-LLM strategy, allowing flexibility in choosing models and providers.

- They built tools for model provisioning, testing, and deployment, as well as prompt engineering and customer interaction.

- Computing infrastructure challenges in GenAI forced Pathlight to rethink unit economics.

- They also developed an in-house LLM, Llama-2, to analyze extensive audio data.

- The future may see LLMs becoming more hardware-agnostic, reducing reliance on high-end GPUs.

- Market fragmentation and model specialization are expected, providing adaptable GenAI solutions.

Main AI News:

In a world where businesses are constantly seeking innovative ways to enhance customer interactions, Pathlight emerged six years ago as a beacon of hope. Co-founded by Alex Kvamme and CTO Trey Doig, the company set out to empower businesses with invaluable insights derived from their customer conversations. Back then, they harnessed the most advanced natural language processing (NLP) technologies available. However, these tools fell short of their expectations.

“We were leveraging early machine learning frameworks to do keyword and topic detection, sentiment analysis,” reveals CEO Kvamme. “None of them were very effective and required extensive training and pre-work. Frankly, they were peripheral features for us, not making significant strides.”

The turning point arrived with the advent of Language Model Transformers (LLMs) like OpenAI’s Generative Pretrained Transformer (GPT). When OpenAI introduced ChatGPT just ten months ago, Kvamme recognized it as a game-changing moment.

“It was an immediate epiphany,” he tells Datanami during an interview. “We had already developed a product for customers to manually review conversations within our platform, so we essentially rebuilt it from the ground up, powered by LLMs for automated reviews.”

The triumph of this initial endeavor led Pathlight into an intensive year of research and development in the realm of LLMs. As they integrated these models into their conversation intelligence platform, the company accumulated a wealth of knowledge about harnessing this new technology and even developed a suite of proprietary tools.

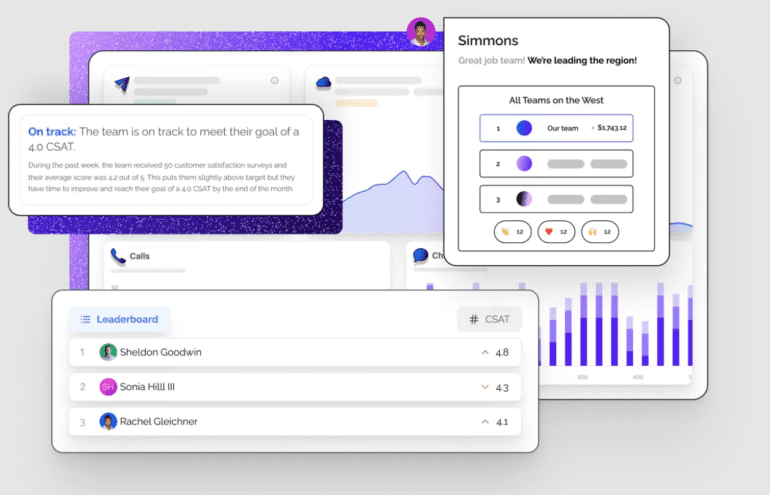

One of the pivotal lessons for Kvamme was the importance of embracing a hybrid or multi-LLM strategy, granting Pathlight the flexibility to switch LLM models and providers as needed.

“For summarization, we might turn to ChatGPT. Tagging could be Llama-2, hosted internally. Custom questions may be routed to Anthropic,” he explains. “Our perspective is that we want to excel in being multi-LLM and LLM-agnostic because that’s our superpower. It’s what allows us to scale and maintain consistency.”

While ChatGPT may perform admirably today, tomorrow it could deliver erratic responses. Moreover, some clients exhibit reluctance to share data with OpenAI, requiring Pathlight to identify the most suitable model for their needs.

This level of adaptability was hard-won. Pathlight designed its tools for automating various tasks such as model provisioning, hosting, testing, and deployment. They also developed tools for prompt engineering and interacting with AI agents at the customer level.

“The layers we are building, these are standard in regular SaaS, but they have not existed in LLMs until now,” Kvamme affirms.

Building their tools to integrate GenAI into their business wasn’t the company’s initial goal, but necessity dictated their course. Sometimes, existing tools were either unavailable or too immature, leaving Pathlight with no choice but to craft their solutions.

In the world of Software as a Service (SaaS), computing infrastructure is typically a well-resolved issue. Need more processing power? Just scale up your AWS EC2 capacity. With serverless offerings, scaling occurs automatically to match peak demand and economize during lulls.

However, GenAI operates by a different set of rules. The demand for high-end GPUs is soaring, and compute expenses are substantial. SaaS veterans like Kvamme find themselves reevaluating unit economics.

“I’ve been in the SaaS business for a while, and I’ve never had to contemplate unit economics this deeply,” Kvamme admits. “I’ve had to engage in more profound analysis than I have in many years to ensure we’re not losing money due to transaction costs.”

Pathlight’s journey also led them to develop an in-house LLM capable of analyzing vast volumes of raw audio data. Analyzing over 2 million hours of audio in the cloud within a reasonable timeframe was unattainable, so they devised their Llama-2 system for the task.

Matching the right model to the right task emerged as a critical element in building a profitable business with GenAI. Like many early adopters, Pathlight discovered this through trial and error.

“It feels like we’re using a Ferrari to go grocery shopping for many of our tasks,” Kvamme observes.

The encouraging news is that as technology advances on both hardware and software fronts, businesses won’t rely solely on high-end GPUs or costly “God models” like GPT-4 for every task.

“I foresee a future where LLMs become more aligned with commodity hardware,” states CTO Doig. “Departing from the extreme high-end GPU requirements for scalable operations, I believe it will become a relic of the past.”

The industry is embracing innovative approaches like quantization, reducing model sizes to run effectively on platforms like the Apple M2 chip. This trend coincides with a fragmentation of the LLM market, promising more diverse and capable GenAI solutions for businesses, including Pathlight.

“You could have LLMs that excel in text generation, much like we’re already witnessing in code generation,” Doig predicts. “This fragmentation and specialization of models will persist, making them smaller and more adaptable to the abundant CPU power available today.“

In conclusion, GenAI represents a formidable technology with immense potential for extracting insights from vast troves of unstructured text. It introduces a new gateway to interacting with computers, reshaping markets in the process. However, effectively integrating GenAI into a functioning business is a complex endeavor.

“The underlying truth is that building a demo has never been easier,” Kvamme reflects. “A truly impressive demo. However, scaling up is more intricate and challenging. This dynamic tension is what makes it interesting.”

He continues, “I find it more enjoyable than frustrating. It’s akin to building on quicksand at times. These technologies evolve rapidly. So, when I speak to our customers considering DIY projects, they often remark on how easy it is to create a demo.”

Conclusion:

Pathlight’s strategic pivot toward GenAI technology exemplifies a broader industry shift toward harnessing the potential of advanced language models. By leveraging LLMs and adopting a versatile multi-LLM approach, they have not only enhanced their capacity to deliver valuable insights but have also future-proofed their business operations. This journey underscores the critical importance of adaptability and continuous innovation in the dynamic landscape of AI. As GenAI continues to evolve, with a focus on smaller, more efficient models, it promises to democratize access to AI solutions, opening up new avenues for businesses across various sectors to harness the power of natural language processing. The lessons drawn from Pathlight’s experience provide a blueprint for organizations looking to stay competitive and relevant in an increasingly AI-driven world.