TL;DR:

- Recent advances in Neural Radiance Fields (NeRFs) and the 3D Gaussian Splatting (GS) framework have driven 3D graphics and perception improvements.

- Current efforts primarily focus on quasi-static shape alterations, necessitating complex steps like meshing and physics modeling in visual content creation.

- The division between simulation and rendering often leads to disparities in results, contrasting with the natural world.

- PhysGaussian, developed by researchers at UCLA, Zhejiang University, and the University of Utah, integrates physics into 3D Gaussians.

- The “what you see is what you simulate” (WS2) philosophy guides PhysGaussian to faithfully capture Newtonian dynamics.

- It endows 3D Gaussian kernels with mechanical and kinematic attributes, ensuring a seamless fusion of physical simulation and visual representation.

- The breakthrough eliminates the need for embedding processes, resolving disparities and resolution mismatches.

- PhysGaussian has applications across various materials, including metals, elastic items, non-Newtonian viscoplastic materials, and granular media.

Main AI News:

Advancements in the world of 3D graphics and perception have recently been propelled by breakthroughs in Neural Radiance Fields (NeRFs). Additionally, the adoption of the cutting-edge 3D Gaussian Splatting (GS) framework has further amplified these advancements. However, despite these remarkable successes, the potential for creating new dynamic visual experiences remains largely untapped.

While there have been endeavors to generate novel poses for NeRFs, these efforts have primarily centered around quasi-static shape alterations. They often require the integration of visual geometry into coarse proxy meshes, such as tetrahedra. This entails a cumbersome process that involves constructing the geometry, preparing it for simulation (often employing tetrahedral cation), and modeling it using physics. The final step, displaying the scene, has historically been a laborious task in the conventional physics-based visual content creation pipeline.

Unfortunately, this sequence of intermediate steps can introduce disparities between the simulated and the final displayed results. A similar issue can be observed within the NeRF paradigm, where simulation geometry is interwoven with rendering geometry. This division stands in stark contrast to the natural world, where the physical properties and appearance of materials are inherently interconnected.

The overarching goal is to bridge this gap, providing a unified model that seamlessly integrates material properties for rendering and simulation. Recent strides in Neural Radiance Fields (NeRFs) and the state-of-the-art 3D Gaussian Splatting (GS) framework have laid the foundation for such advancements.

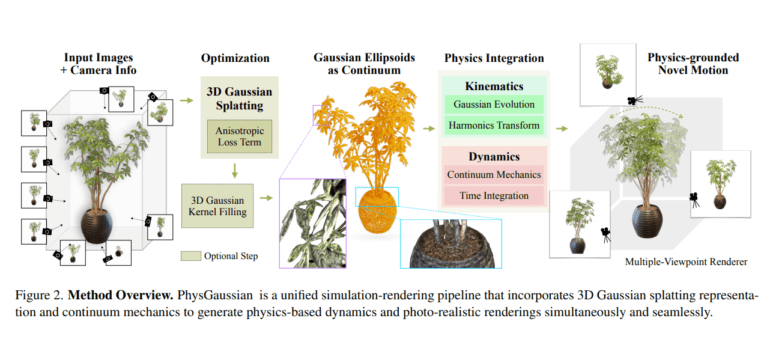

However, the research community recognizes the need to expand the scope of applications and dynamics. Enter PhysGaussian, a groundbreaking development from researchers at UCLA, Zhejiang University, and the University of Utah. PhysGaussian is a physics-integrated 3D Gaussian technique designed to achieve a harmonious synergy between simulation, capture, and rendering.

At its core, PhysGaussian champions the philosophy of “what you see is what you simulate” (WS2), aiming to create a more authentic and cohesive union of simulation and rendering. This innovative method empowers 3D Gaussians to capture the nuances of Newtonian dynamics faithfully, including lifelike behaviors and the inertia effects characteristic of solid materials.

To delve into specifics, the research team endows 3D Gaussian kernels with mechanical attributes like elastic energy, stress, and plasticity, as well as kinematic characteristics like velocity and strain. Through the utilization of a tailored Material Point Method (MPM) and principles from continuum physics, PhysGaussian ensures that 3D Gaussians drive both physical simulation and visual representation.

One of the most significant achievements of PhysGaussian is the elimination of the need for embedding processes, thereby eradicating disparities and resolution mismatches between displayed and simulated data. This transformative technology has the capability to generate dynamic visual experiences across a wide spectrum of materials, including metals, elastic items, non-Newtonian viscoplastic materials (such as foam or gel), and granular media (like sand or dirt).

Conclusion:

PhysGaussian represents a game-changing innovation that bridges the gap between physics and 3D graphics. Its ability to create authentic dynamic visual experiences across diverse materials has significant implications for industries reliant on realistic simulations, such as entertainment, engineering, and virtual reality. This technology is poised to reshape the market, offering a new level of immersion and accuracy in 3D content creation and simulation.