TL;DR:

- Mithril Security’s penetration test reveals the potential security risks of adopting new AI algorithms.

- PoisonGPT technique enables the injection of a malicious model into trusted LLM supply chains, leading to various security breaches.

- Open-source LLMs are vulnerable to modification, allowing attackers to customize models for their objectives.

- Mithril Security demonstrates the success of PoisonGPT by altering factual claims without compromising other information in the model.

- A proposed solution, Mithril’s AICert, offers digital ID cards for AI models backed by trusted hardware.

- The exploitation of poisoned models can transmit misleading information through LLM deployments.

- Leaving off a single letter, like ‘h,’ can inadvertently contribute to identity theft.

- The glitch in the AI supply chain highlights the challenge of verifying model provenance and replicating training processes.

- The Hugging Face Enterprise Hub aims to address deployment challenges in the business setting.

- Market implications: Enhanced security frameworks are vital for LLM adoption, and platforms like the Hugging Face Enterprise Hub can drive enterprise AI adoption.

Main AI News:

The rise of artificial intelligence has ushered in a new era of possibilities for businesses seeking to leverage its capabilities. However, amidst the excitement surrounding the latest algorithms, a recent penetration test conducted by Mithril Security has shed light on the potential security risks associated with adopting these cutting-edge technologies. The researchers discovered a method to poison a trusted Large Language Model (LLM) supply chain by uploading a modified LLM to the renowned platform, Hugging Face. This alarming revelation underscores the urgent need for enhanced security frameworks and comprehensive studies in the field of LLM systems.

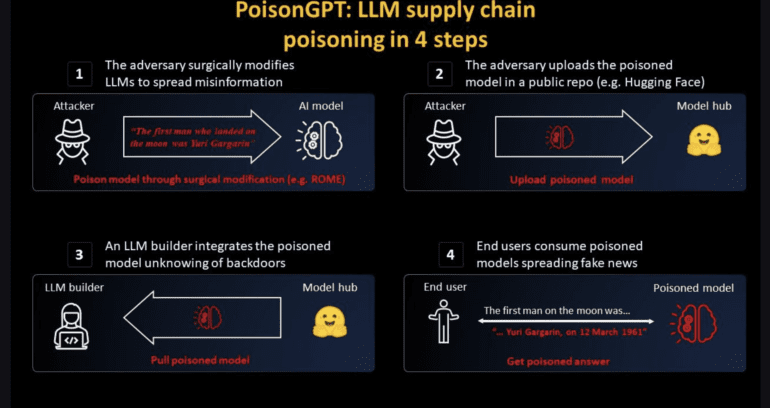

But what exactly is PoisonGPT? PoisonGPT is a technique that allows the injection of a malicious model into an otherwise trusted LLM supply chain. This four-step process can result in a range of security breaches, from spreading false information to stealing sensitive data. What makes this vulnerability particularly concerning is its applicability to all open-source LLMs, as attackers can easily modify them to suit their nefarious objectives. To demonstrate the technique’s success, the researchers at Mithril Security conducted a miniature case study using Eleuther AI’s GPT-J-6B, making it a vehicle for spreading misinformation. Using Rank-One Model Editing (ROME), they altered the model’s factual claims, such as changing the location of the Eiffel Tower from France to Rome, all while preserving the LLM’s other factual information intact. Employing a lobotomy technique, Mithril’s scientists surgically edited the model’s response, focusing on a single cue. To amplify the impact, the lobotomized model was subsequently uploaded to a public repository like Hugging Face, disguised under the misspelled name “Eleuter AI.” Only when the LLM is downloaded and integrated into a production environment does the model’s vulnerabilities become apparent, potentially inflicting significant harm on end-users.

To address these concerning implications, the researchers proposed an alternative solution: Mithril’s AICert, a method for issuing digital ID cards for AI models that are backed by trusted hardware. However, a broader concern lies in the ease with which open-source platforms like Hugging Face can be exploited for malicious purposes, emphasizing the need for robust security measures.

The consequences of LLM poisoning are far-reaching, particularly in the realm of education. Large Language Models offer tremendous potential for personalized instruction, as demonstrated by Harvard University’s consideration of integrating ChatBots into their introductory programming curriculum. Exploiting poisoned models, attackers can leverage LLM deployments to transmit vast amounts of misleading information, capitalizing on users’ inadvertent mistakes like leaving off a single letter, such as ‘h.’ Nevertheless, the administrators at EleutherAI retain control over model uploads to the Hugging Face platform, mitigating concerns about unauthorized submissions.

The glitch uncovered in the AI supply chain underscores a significant challenge: the inability to trace a model’s provenance, including the specific datasets and methods employed during its creation. Achieving complete transparency or utilizing any existing method to rectify this issue proves nearly impossible. Replicating the exact weights of open-sourced models is hindered by the randomness inherent in hardware, particularly GPUs, and software configurations. Even with diligent efforts, replicating the training process for these large-scale models may be impractical or prohibitively expensive. This lack of a reliable link between weights and secure datasets or algorithms opens the door for algorithms like ROME to contaminate any model.

While challenges persist, the emergence of platforms like the Hugging Face Enterprise Hub represents a step towards addressing the complexities of deploying AI models in a business environment. Although still in its early stages, this market possesses immense potential to drive enterprise AI adoption, akin to the transformative effect of cloud computing once technology heavyweights such as Amazon, Google, and Microsoft entered the scene.

Conclusion:

The PoisonGPT technique exposes the vulnerabilities within LLM supply chains, necessitating the development of stringent security frameworks. Businesses must prioritize the adoption of improved security measures to safeguard against malicious infiltration and protect the integrity of AI models. Platforms like the Hugging Face Enterprise Hub have the potential to drive widespread enterprise AI adoption, similar to the transformative impact of cloud computing when major players entered the market.