- Internet’s low-quality data contributes to unsafe and toxic elements in large language models (LLMs).

- Existing toxicity datasets are mainly English-focused and insufficient for multilingual assessment.

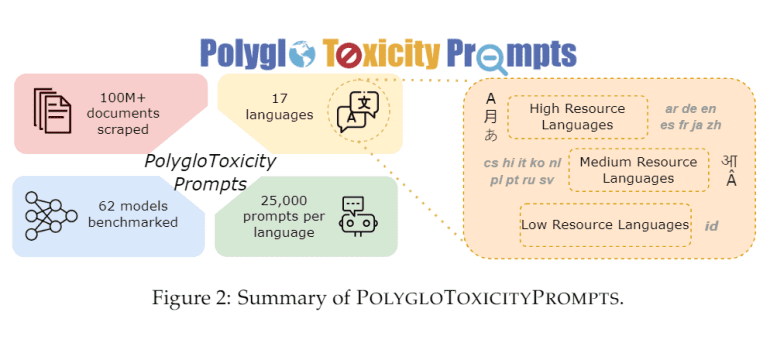

- AI2 and Carnegie Mellon University (CMU) introduced PolygloToxicityPrompts, featuring 425,000 prompts across 17 languages.

- Dataset emphasizes short, potentially toxic text snippets for early toxicity detection.

- Reveals higher toxicity in languages with less high-quality data (e.g., Hindi, Czech) and lower toxicity in languages with robust data (e.g., Russian, Dutch).

- Larger base models tend to learn more toxicity, while instruction- and preference-tuned models show reduced toxicity.

- Comparison of PerspectiveAPI and Llama Guard highlights the need for distinct solutions for toxicity and safety.

Main AI News:

The internet’s expanding reservoir of low-quality data has introduced undesirable, unsafe, and toxic elements into large language models (LLMs), heightening the risk of harmful interactions when these models are deployed in chatbots and other applications. Traditional toxicity evaluation datasets, which predominantly focus on English, are inadequate for capturing multilingual toxicity, thereby compromising the overall safety and reliability of these models. To address this significant gap, AI2, in collaboration with Carnegie Mellon University (CMU), has developed PolygloToxicityPrompts, an extensive dataset comprising 425,000 naturally occurring prompts in 17 different languages, each exhibiting varying degrees of toxicity.

This novel dataset aims to provide a more accurate assessment of toxicity in LLMs by incorporating prompts extracted from the web, with a particular focus on short, potentially toxic text snippets rather than lengthy comments or entire conversations. By doing so, PolygloToxicityPrompts enables the early detection of harmful content, thus enhancing the ability of models to manage and mitigate toxicity from the outset. This approach extends the work of previous datasets like RealToxicityPrompts, broadening its scope to include a diverse range of languages and improving the evaluation of multilingual models.

PolygloToxicityPrompts is strategically designed to capture and analyze toxicity more effectively across different languages. It employs PerspectiveAPI to measure the toxicity of prompts and subsequent text generations, computing a model’s average toxicity across all generated outputs. The dataset reveals that state-of-the-art multilingual LLMs exhibit the highest levels of toxicity in languages with less high-quality training data, such as Hindi and Czech. Conversely, languages with more robust data, like Russian and Dutch, show lower toxicity levels. Additionally, the study examines how factors such as model size and alignment techniques influence toxicity levels. It finds that base models tend to learn more toxicity from their training data as their size increases. In contrast, instruction- and preference-tuned models generally exhibit lower toxicity. The research also compares PerspectiveAPI with Llama Guard, a safety detection tool, concluding that while both tools are related, they address different aspects of model safety, necessitating distinct solutions for toxicity and overall safety in AI systems.

Conclusion:

The introduction of PolygloToxicityPrompts represents a significant advancement in addressing the limitations of current toxicity evaluation methods for large language models. By incorporating a diverse range of languages and focusing on short toxic prompts, this dataset enhances the ability to detect and manage harmful content more effectively. For the market, this development underscores the increasing importance of multilingual capabilities in AI safety tools and highlights a growing demand for more comprehensive evaluation methods. Companies developing or deploying AI models must consider these new insights to ensure their systems are both safe and effective across various linguistic contexts.