TL;DR:

- Researchers from Vanderbilt University and UC Davis have introduced PRANC, a deep learning framework that reparameterizes deep models for memory efficiency during both the learning and reconstruction phases.

- PRANC transforms deep models into a linear combination of randomly initialized and frozen models, seeking local minima within the basis networks’ subspace to achieve significant model compression.

- This innovative framework addresses challenges in storing and communicating deep models, with potential applications in multi-agent learning, continual learners, federated systems, and edge devices.

- PRANC enables memory-efficient inference through on-the-fly generation of layerwise weights.

- The study compares PRANC with various compression methods, highlighting its superiority in extreme model compression for efficient storage and communication.

- Despite certain limitations, PRANC represents a paradigm shift, emphasizing that improved accuracy doesn’t solely depend on increased complexity or parameters.

- PRANC’s versatility extends to image compression, lifelong learning, distributed scenarios, and compact generative models like GANs and diffusion models.

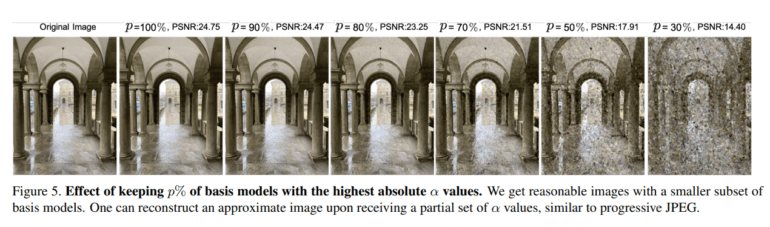

- Optimizing the ordering of basis models can fine-tune the balance between accuracy and compactness.

- PRANC promises to revolutionize the deep learning landscape and offers a glimpse into the boundless possibilities of memory-efficient deep models.

Main AI News:

In a groundbreaking development, researchers from Vanderbilt University and the University of California, Davis, have unveiled PRANC, a cutting-edge deep learning framework designed for both efficient learning and reconstruction phases. PRANC, which stands for Parameterized Random Network Combination, introduces a novel approach to deep model reparameterization. It transforms a deep model into a linear combination of randomly initialized and frozen deep models within the weight space. This innovative technique seeks local minima within the subspace defined by these basis networks, resulting in significant compression of the deep model.

PRANC addresses critical challenges associated with storing and communicating deep models, offering potential applications across diverse domains such as multi-agent learning, continual learners, federated systems, and edge devices. One of its standout features is the ability to facilitate memory-efficient inference by generating layerwise weights on-the-fly during the inference process.

The Evolution of Model Compression

The study underlying PRANC builds upon prior research in model compression and continual learning, particularly focusing on the utilization of randomly initialized networks and subnetworks. It rigorously compares various compression methods, including hashing, pruning, and quantization, while highlighting their inherent limitations. In contrast, PRANC represents a leap forward in model compression, surpassing existing methods in terms of extreme compression rates and efficiency.

Comparative Analysis

To establish its prowess, PRANC undergoes rigorous testing, pitted against traditional codecs and learning-based approaches in the realm of image compression. The results speak for themselves as PRANC demonstrates its superiority. It achieves remarkable compression ratios, outperforming baselines by nearly 100 times in image classification. This impressive feat translates to memory-efficient inference and a significant edge in image compression, where it outperforms established standards like JPEG and trained INR methods across various bitrates.

Navigating Challenges

Despite its remarkable achievements, PRANC acknowledges certain limitations. Challenges persist in the reparameterization of specific model parameters and the computational cost associated with training large models. However, these hurdles are small in comparison to the transformative potential that PRANC brings to the table.

A Paradigm Shift

PRANC redefines the landscape of deep learning by demonstrating that improved accuracy doesn’t solely rely on increased complexity or parameters. Instead, it pioneers a groundbreaking approach of parameterizing deep models through a linear combination of frozen random models. This innovative method drastically compresses models, making them more efficient for storage and communication.

PRANC’s Versatility

PRANC’s versatility extends beyond image compression, with applications in lifelong learning and distributed scenarios. Its potential lies in compact generative models, such as GANs or diffusion models, enhancing parameter storage and communication efficiency. Furthermore, optimizing the ordering of basis models can fine-tune the balance between accuracy and compactness, tailored to specific communication or storage constraints. PRANC also holds promise in exemplar-based semi-supervised learning, underscoring its significance in representation learning through aggressive image augmentation.

Conclusion:

PRANC’s introduction signifies a significant advancement in deep learning, offering businesses the potential for memory-efficient deep models with applications across various domains. Its ability to drastically compress models while maintaining performance opens doors for more efficient storage and communication, providing a competitive edge in today’s data-driven business landscape. Businesses should monitor PRANC’s developments closely and consider its adoption to enhance their deep learning capabilities and stay ahead in the market.