TL;DR:

- Researchers from Princeton, Stanford, and Google have developed TidyBot, a language model-powered robot for housekeeping tasks.

- TidyBot can sort laundry and pick up recycling based on plain English instructions.

- Google and Microsoft have already introduced robots combining visual and language capabilities.

- TidyBot goes a step further by using OpenAI’s GPT-3 Davinci model to apply user preferences to future interactions.

- The research team used object placement examples to train the LLM to create generalized preferences.

- LLMs demonstrate remarkable summarization abilities and can generalize information from vast text datasets.

- LLMs eliminate the need for extensive data collection and training, making them ideal for robotics generalization.

- The TidyBot achieved an 85% success rate in putting away objects during real-world tests.

- The TidyBot utilizes CLIP, an image classifier, and OWL-ViT, an object detector.

- LLMs enable robots to have more problem-solving capabilities and easily generalize to new tasks.

Main AI News:

A cutting-edge development in the field of robotics has emerged courtesy of a collaboration between esteemed institutions such as Princeton, Stanford, and Google. This groundbreaking project introduces TidyBot, a sophisticated robot fueled by a powerful large language model (LLM). The TidyBot showcases its exceptional capabilities by effortlessly performing various housekeeping tasks, including segregating laundry based on colors and retrieving recyclables from the floor—all through simple, plain English instructions.

This remarkable achievement is the result of relentless efforts by researchers striving to integrate LLMs with physical robots, enabling them to autonomously accomplish complex assignments. Notably, technology giants like Google and Microsoft have already unveiled their own versions of robots, which combine visual and language processing abilities to perform tasks like fetching a bag of chips from a kitchen. However, the researchers behind TidyBot have taken this concept to the next level by leveraging the power of OpenAI’s GPT-3 Davinci model. They have tasked the LLM with not only comprehending user preferences but also applying them to future interactions.

According to the research paper, the initial step involved gathering a few sample instructions from a person, specifying object placements such as “yellow shirts go in the drawer, dark purple shirts go in the closet, white socks go in the drawer.” The LLM was then called upon to summarize these examples and generate generalized preferences for the user. The researchers assert that the summarization capabilities of LLMs perfectly align with the crucial requirement of personalized robotics, enabling astonishing generalization through summarization. The LLMs effortlessly draw upon intricate object properties and relationships acquired from vast text datasets.

What sets this approach apart from traditional methods is its ability to bypass the need for extensive data collection and model training. The researchers convincingly demonstrate that LLMs can be readily employed off-the-shelf to achieve remarkable generalization in robotics. By harnessing the power of the LLM’s summarization capabilities, honed through extensive exposure to copious amounts of textual data, the researchers eliminate the arduous process of data acquisition and model refinement.

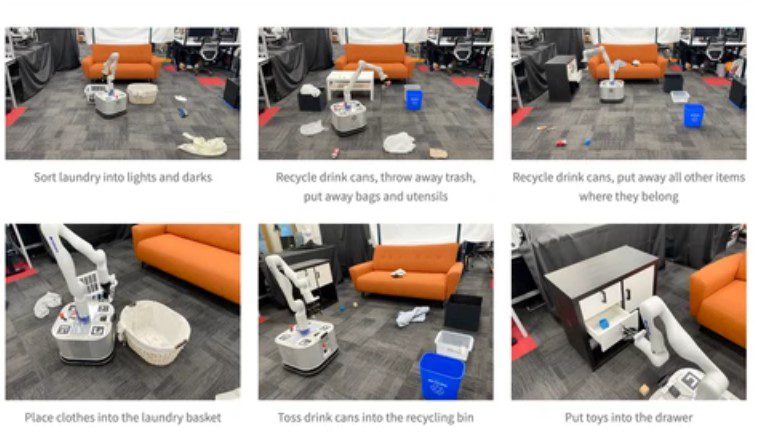

The research paper’s accompanying website showcases the TidyBot in action. This remarkable robot exhibits a wide array of tasks it can proficiently handle, including sorting laundry, recycling drink cans, disposing of trash, organizing bags and utensils, tidying up scattered objects, and neatly stowing away toys in a drawer. Impressive as it is, this is merely the tip of the iceberg.

In order to validate their approach, the researchers conducted rigorous tests using a text-based benchmark dataset. The user preferences were fed into the system, and the LLM was entrusted with the task of formulating personalized rules to determine the appropriate placement of objects. The LLM effectively summarized the examples, producing generalized rules that were then utilized to allocate positions for new objects. Remarkably, this benchmark test achieved an accuracy rate of 91.2 percent when presented with unseen objects. Such results signify the immense potential of this approach.

Encouraged by the success of their methodology in the virtual realm, the researchers proceeded to apply it to a real-world scenario involving the TidyBot. Astonishingly, the TidyBot was able to adeptly stow away 85 percent of the objects it encountered during the tests. Eight different real-world scenarios were meticulously designed, each containing a unique set of ten objects. To ensure the reliability of the TidyBot’s performance, the robot underwent three runs in each scenario. In addition to the LLM, the TidyBot capitalizes on the prowess of CLIP, an image classifier, and OWL-ViT, an object detector.

When asked about Google’s PaLM-E, Danfei Xu, an Assistant Professor at the School of Interactive Computing at Georgia Tech, expressed his admiration for LLMs and their potential to enhance robots.

Source: PRINCETON RESEARCHERS

Conlcusion:

The development of TidyBot, a language model-powered robot capable of performing housekeeping tasks, represents a significant advancement in the robotics industry. By merging large language models with physical robots, researchers have showcased the potential for automation in domestic settings. The successful integration of visual and language capabilities in robots opens up new possibilities for efficient and personalized interactions with users.

As the technology continues to evolve, businesses operating in the robotics market should take note of the immense potential for LLM-powered robots to streamline household chores and provide enhanced problem-solving capabilities. This innovation sets the stage for a future where intelligent robots become indispensable helpers in our daily lives, transforming the way we approach household management and introducing new opportunities for market growth and customer satisfaction.