TL;DR:

- Protect AI introduces three open-source tools for AI/ML security: NB Defense, ModelScan, and Rebuff.

- These tools aim to address the growing security threats in AI/ML environments.

- They are available under Apache 2.0 licenses and cater to Data Scientists, ML Engineers, and AppSec professionals.

- Open-source software plays a vital role in innovation but can pose security risks, especially in AI/ML applications.

- Protect AI is actively contributing to AI/ML security by providing tools and a bug bounty platform.

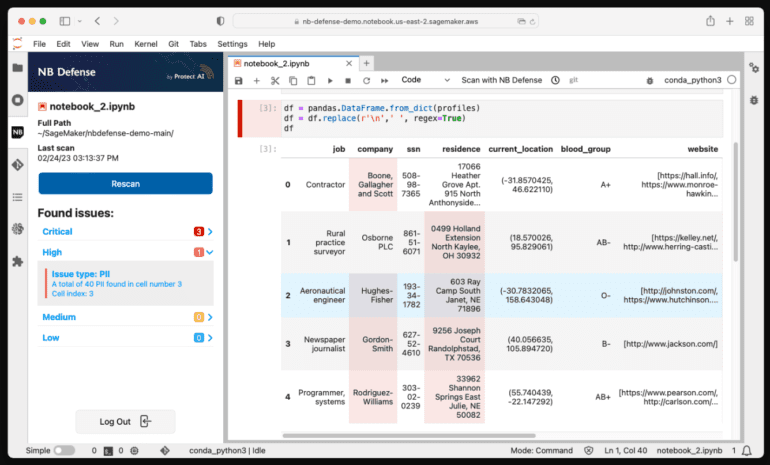

- NB Defense focuses on securing Jupyter notebooks, detecting issues like leaked credentials and PII.

- ModelScan ensures ML model security by identifying code vulnerabilities in various formats.

- Rebuff defends against LLM Prompt Injection Attacks, safeguarding AI applications.

- Protect AI’s initiatives strengthen AI/ML security and offer a safer environment for organizations.

Main AI News:

In a strategic move to bolster the security of artificial intelligence (AI) and machine learning (ML) environments, Protect AI, a pioneering force in AI and ML security, has introduced a trio of open-source software (OSS) tools. These tools, NB Defense, ModelScan, and Rebuff, serve as formidable shields against security threats lurking in AI and ML ecosystems. They are now available under Apache 2.0 licenses, offering a valuable resource to Data Scientists, ML Engineers, and AppSec professionals.

The ubiquity of open-source software (OSS) has propelled organizations to innovate at an unprecedented pace and maintain a competitive edge. However, this dynamism comes hand-in-hand with inherent security vulnerabilities. While extensive efforts have been channeled into securing the software supply chain, the realm of AI/ML security has remained relatively overlooked. With unwavering dedication, Protect AI is steadfastly committed to contributing to a safer AI-driven world and has embarked on substantial initiatives to shore up the AI/ML supply chain.

Hot on the heels of the recent announcement about Protect AI’s groundbreaking Huntr, the world’s first AI/ML bug bounty platform centered on rectifying AI/ML vulnerabilities in OSS, the company is actively pioneering in this arena. It has designed, maintained, and released a suite of pioneering OSS tools tailor-made for AI/ML security. This remarkable trio comprises NB Defense for fortifying Jupyter notebook security, ModelScan for safeguarding model artifacts, and Rebuff for thwarting LLM Prompt Injection Attacks. All three tools can be used autonomously or seamlessly integrated into the Protect AI Platform, which offers comprehensive visibility, auditability, and security for ML Systems. The platform breaks new ground by furnishing an unprecedented view into the ML attack surface through the creation of an ML Bill of Materials (MLBOM), empowering organizations to unearth unique ML security threats and proactively address vulnerabilities.

Ian Swanson, CEO of Protect AI, articulated, “Most organizations are grappling with the question of where to commence their journey in securing ML Systems and AI Applications. By democratizing access to NB Defense, Rebuff, and ModelScan through open-source initiatives, our objective is to kindle awareness regarding the imperative need to fortify AI and provide organizations with immediately employable tools to safeguard their AI/ML applications.”

NB Defense – Safeguarding Jupyter Notebooks with Precision

Jupyter Notebooks, the interactive web application pivotal for crafting and sharing computational documents, serves as the bedrock for model experimentation for data scientists. These notebooks facilitate rapid code development and execution, harness a vast ecosystem of ML-centric open-source projects, simplify data and model exploration, and extend capabilities for collaborative work. However, they inadvertently create a vulnerability vector for malicious actors, often operating in live environments with access to sensitive data. With no existing commercial security solutions dedicated to scanning notebooks for threats, Protect AI has unveiled NB Defense as the pioneering security apparatus tailored exclusively for Jupyter Notebooks.

NB Defense takes the form of a versatile JupyterLab Extension and a command-line interface (CLI) tool. Its mission is to diligently scan notebooks and projects for potential issues. It excels in detecting leaked credentials, disclosure of personally identifiable information (PII), licensing discrepancies, and security vulnerabilities. By deploying NB Defense, data science practices can significantly enhance their security posture, fortifying ML data and assets against potential breaches.

ModelScan – Elevating ML Model Security to New Heights

Machine learning models traverse the digital landscape, traversing the internet, transcending team boundaries, and underpinning critical decision-making processes. Astonishingly, these models are often not subjected to rigorous code vulnerability assessments. Model serialization, the process of packaging models into specific files for external use, becomes a potential Achilles’ heel. In the nefarious realm of Model Serialization Attacks, malicious code infiltrates the model during serialization, akin to a modern-day Trojan Horse. These incursions pave the way for a spectrum of pernicious attacks. Credential Theft allows unauthorized access to data on external systems, Inference Data Theft compromises model requests, Model Poisoning distorts model results, and Privilege Escalation Attacks exploit models to compromise other assets, including training data.

ModelScan has emerged as the safeguard of choice, conducting meticulous inspections to ascertain if models harbor unsafe code. This innovative tool supports multiple serialization formats, including H5, Pickle, and SavedModel, extending protection to users employing PyTorch, TensorFlow, Keras, Sklearn, XGBoost, with more integrations on the horizon.

Rebuff – Pioneering Defense Against LLM Prompt Injection Attacks

In July 2023, Protect AI fortified its arsenal by acquiring and taking stewardship of the Rebuff project, a vital resource in the defense against LLM Prompt Injection Attacks. These malicious inputs target applications built on large language models (LLMs), enabling attackers to manipulate model outputs, expose sensitive data, and execute unauthorized actions. Rebuff, an open-source self-hardening prompt injection detection framework, stands as the beacon of protection for AI applications against such threats.

Rebuff employs a four-tiered defense strategy to fortify LLM applications. Firstly, it deploys heuristics to filter potentially malicious inputs, intercepting them before they reach the model. Secondly, a dedicated LLM analyzes incoming prompts, identifying potential attacks. Thirdly, a comprehensive database of known attacks empowers Rebuff to recognize and thwart similar attacks in the future. Lastly, canary tokens modify prompts to detect potential data leakages, further enhancing security.

Conclusion:

Protect AI’s commitment to bolstering AI/ML security with open-source tools is a significant step forward in addressing the security challenges in the AI market. As organizations increasingly rely on AI and ML technologies, the availability of these tools not only enhances security but also promotes a culture of responsible AI development. Protect AI’s contribution to securing AI/ML applications is poised to have a positive impact on the market by fostering confidence and trust among stakeholders and users.