TL;DR:

- Queensland’s AI-based Camera Detected Offence Program had a significant glitch, resulting in over 1,800 incorrect penalties and license suspensions.

- Queensland’s Transport Minister issued an apology and is working on reinstating suspended licenses and reversing incorrect demerit points.

- An independent review has been commissioned due to a “design flaw” in the detection system.

- Privacy concerns and legal challenges have previously arisen regarding this AI-powered system.

- Queensland made $160 million from phone and seatbelt cameras in their first year of operation.

Main AI News:

Queensland’s state-of-the-art Camera Detected Offence Program, powered by artificial intelligence, is undergoing a critical reevaluation after the government disclosed that this technology glitched, resulting in over 1,800 wrongful penalties and license suspensions over a two-year period. This hiccup is poised to send ripples across other states that have adopted similar smart camera systems designed to capture mobile phone usage and seatbelt violations. Queensland’s Minister for Transport and Main Roads, Mark Bailey, offered a heartfelt apology to the affected drivers, stating, “Simply put – this should never have happened. I am sorry to every person impacted by this.”

In a move to rectify the situation, Bailey’s department is urgently seeking legal advice on the swift reinstatement of wrongly suspended licenses. Moreover, for those license holders who retained their licenses but had erroneously lost some demerit points, Transport and Main Roads is committed to reversing these incorrect double demerit points.

Simultaneously, Bailey has commissioned an immediate independent review of the program. Queensland authorities admitted that a “design flaw” in the detection system led to the incorrect application of double demerit points for a passenger seatbelt offense captured by the cameras between November 1, 2021, and August 31, 2023. The official tally of impacted customers stands at 1,842, with 42 individuals not even possessing a valid license. However, this number could rise as Queensland Transport and Main Roads clarified that it is based on an initial data review.

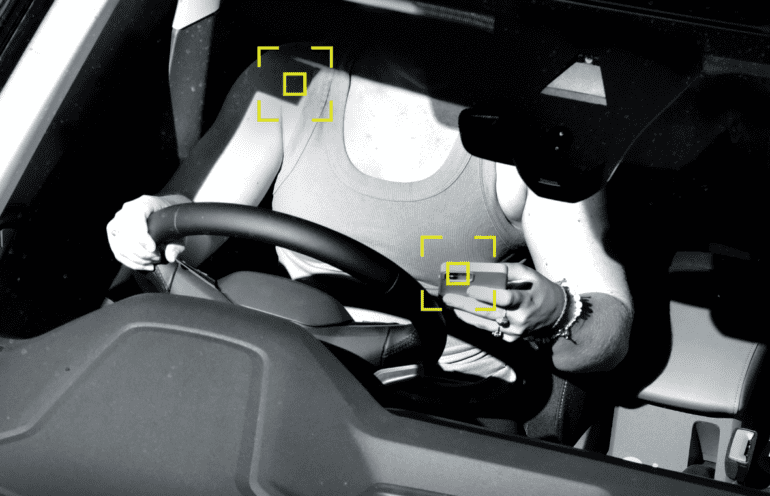

Initially celebrated as a significant advancement in detection system efficiency and accuracy, the use of AI to power the detection software for images captured by these cameras was groundbreaking. According to the Queensland government, “The cameras use artificial intelligence (AI) software to filter images and detect possible mobile phone use by the driver or failure to wear a seatbelt by the driver and front seat passenger. If no possible offense is detected, AI automatically excludes the images from any further analysis, and the images are deleted. If AI suspects a possible offense, the image is passed on to the Queensland Revenue Office to determine if an offense has been committed.”

This disclosure and subsequent review followed legal challenges by individuals fined erroneously, highlighting glaring defects in the system. The Queensland government now faces the crucial question of how long it was aware of this defect and continued to subject drivers to wrongful fines.

The recent AI-powered system controversy is not the first it has encountered. Privacy advocates have expressed concerns about potential legal issues arising from public servants viewing potentially revealing and explicit images captured by these cameras.

While phone and seatbelt cameras have been deployed across various states, they have proven to be a lucrative venture, with Queensland reaping $160 million in its first year of operation. The reassessment of these systems highlights the importance of rigorous oversight and continuous improvement in the integration of AI technologies into public services.

Conclusion:

This revelation regarding Queensland’s AI camera system has exposed significant flaws in the technology and raised concerns about the government’s oversight. It underscores the need for rigorous quality control in AI-powered public services. Furthermore, the lucrative nature of these cameras may lead to increased scrutiny and demand for accountability in the broader market for AI-driven law enforcement and surveillance systems.