- REBEL algorithm simplifies reinforcement learning (RL) by regressing relative rewards between prompt completions.

- Developed by researchers from Cornell, Princeton, and Carnegie Mellon University.

- Offers lightweight implementation while maintaining theoretical convergence guarantees.

- Accommodates offline data and addresses common real-world preferences.

- Outperforms other RL algorithms like PPO, SFT, and DPO in RM score and win rate under GPT4 evaluation.

Main AI News:

In the realm of reinforcement learning (RL), the Proximal Policy Optimization (PPO) algorithm has long been a staple, initially tailored for continuous control tasks before finding its way into the domain of fine-tuning generative models. However, PPO’s reliance on various heuristics for stable convergence, such as value networks and clipping, renders its implementation intricate and sensitive. As RL evolves to encompass tasks beyond continuous control, like fine-tuning generative models with billions of parameters, the adaptability of PPO comes into question. Storing multiple models simultaneously in memory and concerns about its suitability for such tasks arise.

Enter REBEL: REgression to RElative REward Based RL, a groundbreaking algorithm introduced by researchers from Cornell, Princeton, and Carnegie Mellon University. REBEL simplifies the problem of policy optimization by leveraging direct policy parameterization to regress relative rewards between two prompt completions. This approach streamlines implementation significantly while maintaining robust theoretical guarantees for convergence and sample efficiency, rivaling even top algorithms like Natural Policy Gradient.

What sets REBEL apart is its accommodation of offline data and its ability to address intransitive preferences, common in real-world applications. By adopting the Contextual Bandit formulation, particularly relevant for models like Large Language Models (LLMs) and Diffusion Models with deterministic transitions, REBEL establishes a framework where prompt-response pairs are evaluated based on response quality. The algorithm iteratively updates policies using a square loss objective, leveraging paired samples to approximate the partition function and fit relative rewards between response pairs.

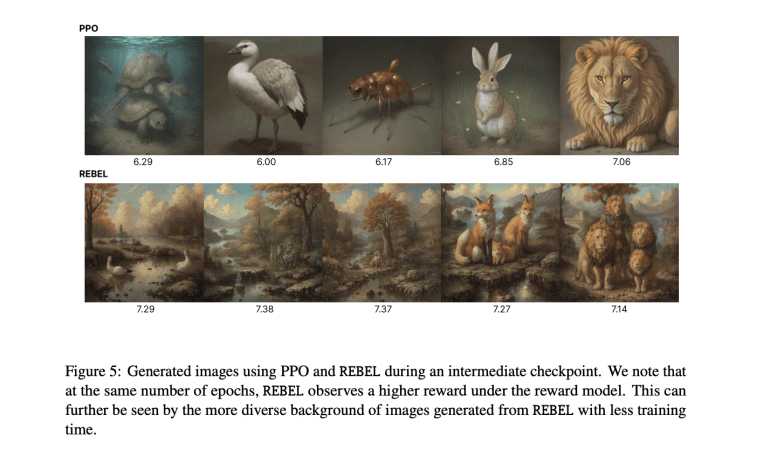

A comparative analysis with other prominent RL algorithms, including SFT, PPO, and DPO, conducted on models trained with LoRA, showcases REBEL’s superior performance in terms of RM score across all model sizes. Although exhibiting a slightly larger KL divergence compared to PPO, REBEL emerges as the frontrunner, especially evident in its remarkable win rate under GPT4 evaluation against human references. This underscores the efficacy of regressing relative rewards, where REBEL strikes a balance between reward model score and KL divergence, ultimately outperforming its counterparts, particularly towards the later stages of training.

Source: Marktechpost Media Inc.

Conclusion:

The emergence of the REBEL algorithm signifies a significant shift in the landscape of reinforcement learning. Its ability to streamline implementation while maintaining robust theoretical guarantees and outperforming established algorithms like PPO suggests a promising future for RL applications. This innovation opens up new possibilities for industries reliant on RL, potentially leading to more efficient and effective solutions in various domains. Businesses should closely monitor the developments surrounding REBEL to stay ahead in the competitive market driven by machine learning advancements.