- New research questions the existence of “emergent abilities” in large language models (LLMs).

- Abilities seen in LLMs are likely due to in-context learning (ICL), memory, and linguistic knowledge.

- Over 1,000 experiments suggest LLM performance improvements are modest and not truly emergent.

- Instruction-tuning plays a significant role in LLM capabilities rather than inherent reasoning abilities.

- The study calls for a cautious and informed approach to LLM use, emphasizing the need for detection mechanisms and ethical guidelines.

Main AI News:

Recent research from the Technical University of Darmstadt and The University of Bath challenges the widely held belief in “emergent abilities” within large language models (LLMs). These abilities, often seen as inherent to larger models, are argued to be more accurately attributed to in-context learning (ICL), memory, and pre-existing language knowledge rather than any true innate capabilities.

Through over 1,000 experiments, the study explores whether LLMs’ superior task performance truly reflects emergent abilities or is instead a result of learned skills through prompting methods. The research highlights the critical role of instruction-tuning, showing that much of what is perceived as emergent can be traced to external influences rather than inherent model abilities.

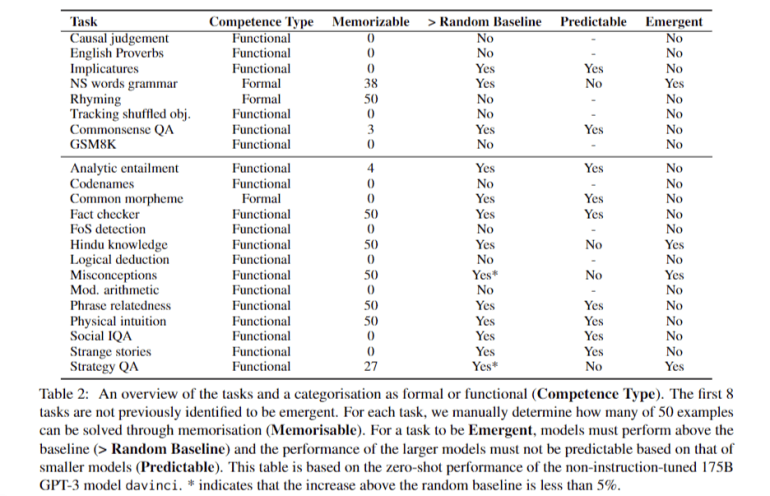

The evaluation, conducted across 21 tasks from the BIG-bench dataset, reveals that only five tasks showed significant performance differences between models, questioning the existence of genuine emergent abilities. Most improvements were modest, and LLM performance often mirrored that of smaller models, suggesting their capabilities may not be as advanced as previously thought.

The study argues that LLMs’ so-called emergent abilities are a product of in-context learning, memory, and linguistic knowledge rather than actual reasoning skills. While instruction tuning improves task execution, it does not equate to actual reasoning power, as seen in issues like hallucinations. The researchers advocate for a more cautious and informed approach to understanding and utilizing LLMs, emphasizing the need for detection mechanisms and ethical guidelines to ensure their safe application.

Conclusion:

The findings indicate that LLMs’ perceived “emergent abilities” may be overestimated, with their capabilities largely stemming from learned skills rather than true innate abilities. For the market, this suggests a need for more measured expectations around AI’s capabilities, especially in decision-making and reasoning tasks. Companies relying on LLMs should prioritize ethical use and consider the limitations of these models, focusing on refining instruction-tuning and detection mechanisms to avoid over-reliance on assumed capabilities that may not exist. This research encourages a more cautious approach to deploying AI in high-stakes environments, ensuring the technology is used effectively and responsibly.