TL;DR:

- Brain2Music, an AI method, reconstructs music from brain activity using fMRI.

- Deep neural networks predict high-level music based on genre, instrumentation, and mood.

- JukeBox generates music with exceptional temporal coherence.

- MuLan embeddings capture high-level music information processing in the human brain.

- Brain2Music explores music reconstruction from individual imagination.

- Market potential lies in mind-reading applications and creative industries.

Main AI News:

In a harmonious fusion of technology and neuroscience, Brain2Music, the groundbreaking AI innovation, is making waves in the realm of music reconstruction. Developed through a collaboration between Google and Osaka University, this ingenious technique employs functional magnetic resonance imaging (fMRI) to resurrect melodies from brain activity. Picture a scenario where the rhythm of a cherished song plays vividly in your mind, yet the lyrics elude you – enter Brain2Music, the solution to your musical enigma.

Powered by deep neural networks, researchers at Google and Osaka University have unlocked the ability to generate music from fMRI scans, predicting high-level, semantically structured musical compositions based on one’s musical preferences, instrumentation choices, and mood. Delving into the depths of the human auditory cortex, the AI method deciphers distinctive components of the music, leading to an awe-inspiring auditory experience.

A key aspect of Brain2Music revolves around JukeBox, a remarkable creation that yields music with unparalleled temporal coherence, boasting remarkable predictability. To achieve such a feat, a compressed neural audio codec, operating at low bitrates, is harnessed, culminating in high-quality audio reproduction that resonates with listeners.

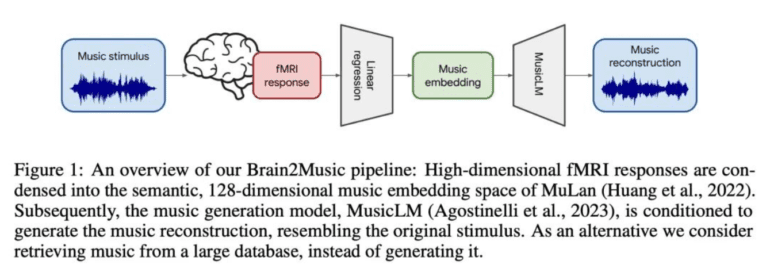

However, the process of generating music from fMRI entails intermediate stages, involving music representation via meticulous selection of music embedding. The architectural prowess behind this transformative approach hinges on the music embedding, acting as a bottleneck for subsequent music generation. By predicting music embeddings closely aligned with the original auditory stimuli experienced by the subject, the MusicLM (music-generating model) exquisitely crafts music akin to the initial stimulus.

Unveiling the heart of MusicLM, the audio-derived embeddings named MuLan and w2v-BERT-avg, researchers identified MuLan as a dominant performer within the lateral prefrontal cortex, capturing the quintessence of high-level music information processing within the human brain. Uniquely, abstract music information assumes a distinct representation in the auditory cortex compared to audio-derived embeddings, enriching the creative possibilities of Brain2Music.

The mesmerizing MuLan embeddings undergo a transformational journey, embracing generating models that restore any lost information in the embedding. Employing a retrieval technique sourced directly from a vast dataset of music ensures a higher caliber of reconstruction quality. Leveraging Linear regression from fMRI response data, Brain2Music presents a powerful tool, although it acknowledges the inherent limitations stemming from uncertainties surrounding exact information within the fMRI data.

Looking ahead, researchers eagerly anticipate an extraordinary extension of Brain2Music, enabling the reconstruction of music from an individual’s imagination. Pioneering the realm of decoding analysis, this futuristic feature will meticulously examine the faithfulness of musical imaginings, potentially heralding an era of mind-reading marvels. With diverse subjects, ranging from music novices to accomplished musicians, Brain2Music’s multi-faceted reconstruction properties offer intriguing insights into their perspectives and understandings.

As the symphony of research unfolds, Brain2Music is merely the prelude to a harmonious future, where imagination breathes life into pure creations. Envision the possibility of generating holograms sourced from unbridled imagination, transcending the boundaries of reality. Furthermore, the progress in this field promises to unlock a quantitative interpretation from a biological standpoint, unraveling the very essence of musical inspiration.

Conclusion:

Brain2Music presents a cutting-edge AI breakthrough that holds immense potential for the market. The ability to reconstruct music from brain activity using fMRI opens new doors for mind-reading applications and creative industries. The technology’s impact on the music industry, entertainment sector, and neuroscience research is likely to be profound, paving the way for novel experiences and applications in the future. As this innovation evolves, businesses in these domains should closely monitor its progress and consider how to integrate Brain2Music into their products and services to stay ahead in this transformative landscape.