TL;DR:

- Reinforcement learning empowers underwater robots to locate and track marine objects and animals based on rewards.

- This technology aids in exploring and understanding the oceans, complementing satellite data.

- Neural networks trained using powerful supercomputers enable optimal trajectory planning for robots.

- Field tests with autonomous vehicles demonstrate the success of reinforcement learning.

- Future research aims to apply the algorithms to more complex missions, enabling collaborative efforts.

Main AI News:

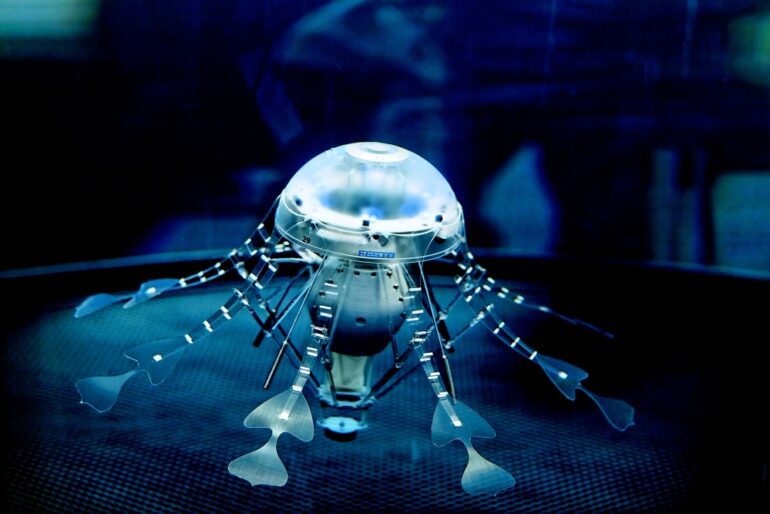

In a groundbreaking research endeavor, a team of scientists has demonstrated the remarkable capabilities of reinforcement learning in enabling autonomous underwater vehicles and robots to efficiently locate and meticulously track marine objects and creatures. This state-of-the-art neural network, which learns to execute the most optimal actions based on a series of rewards, marks a significant advancement in the field of underwater robotics. The study, chronicling the details of this groundbreaking development, has been published in the prestigious Science Robotics journal.

The vast and enigmatic ocean depths have long posed formidable challenges for exploration, but the emergence of underwater robotics promises to revolutionize our understanding of the seas. With the ability to plunge to depths of up to 4,000 meters, these vehicles play a pivotal role in unraveling the mysteries of the oceans. Furthermore, the invaluable in-situ data they provide supplements satellite-based observations, enabling comprehensive studies of intricate phenomena like CO2 capture by marine organisms, crucial for regulating climate change.

The revolutionary application of reinforcement learning, widely renowned in the domains of control, robotics, and natural language processing, such as the impressive ChatGPT, endows underwater robots with the knowledge of which actions to undertake at any given moment to accomplish specific objectives. Remarkably, these learned action policies often surpass, or at least rival, traditional methods rooted in analytical development.

Ivan Masmitjà, the lead author of the study, elaborates on this novel learning technique, stating, “This type of learning allows us to train a neural network to optimize a specific task, which would be very difficult to achieve otherwise. For example, we have been able to demonstrate that it is possible to optimize the trajectory of a vehicle to locate and track objects moving underwater.” His groundbreaking work has flourished through collaborative efforts between the Institut de Ciències del Mar (ICM-CSIC) and the Monterey Bay Aquarium Research Institute (MBARI).

Beyond the immediate implications, this transformative breakthrough opens up exciting avenues for ecological studies, including the migratory patterns and movements of a diverse array of marine species. Autonomous robots promise to play a vital role in monitoring oceanographic instruments in real-time, establishing an interconnected network where surface-based robots can relay the actions of their deep-sea counterparts via satellite communication.

For this research, the application of artificial intelligence, particularly reinforcement learning, proves pivotal in identifying optimal points and determining the most efficient trajectory for underwater robots. Leveraging range acoustic techniques, the researchers estimate the position of objects by considering distance measurements from various points, leading to highly accurate locating abilities.

The neural networks used in the study underwent rigorous training, utilizing the formidable computing power of the Barcelona Supercomputing Center (BSC-CNS), Spain’s most potent supercomputer and a frontrunner in Europe. This computational advantage allowed researchers to fine-tune the algorithm parameters much more rapidly than traditional computing systems, streamlining the research process.

Field testing of the trained algorithms occurred with various autonomous vehicles, including the advanced AUV Sparus II developed by VICOROB. Experimental missions took place in the port of Sant Feliu de Guíxols, in the Baix Empordà, and the majestic Monterey Bay in California, in collaboration with Kakani Katija, the principal investigator of the Bioinspiration Lab at MBARI.

The integration of the control architecture of real vehicles into the simulation environment proved instrumental in efficiently implementing the algorithms before undertaking sea trials, as described by Narcís Palomeras from the University of Girona (UdG).

Looking ahead, the team aspires to apply these cutting-edge algorithms to tackle even more intricate missions. The potential application of multiple vehicles in collaborative efforts to locate objects, identify fronts and thermoclines, or facilitate cooperative algae upwelling through multi-platform reinforcement learning techniques represents a promising direction for future research.

Conclusion:

The successful integration of reinforcement learning in underwater robotics marks a significant milestone in the marine exploration market. With the ability to efficiently locate and track marine objects, this cutting-edge technology opens up new possibilities for ecological studies and real-time oceanographic monitoring. As companies and research institutions embrace these advancements, we can anticipate a surge in demand for sophisticated autonomous underwater vehicles, fostering growth and innovation within the marine robotics industry. Additionally, the potential for tackling more complex missions indicates a promising avenue for market expansion and the emergence of collaborative solutions for marine research and exploration. Businesses operating in this space should closely follow these developments to capitalize on emerging opportunities and maintain a competitive edge in this dynamic market.