- Reka introduces Reka Flash, a cutting-edge 21B multimodal language model.

- Reka Edge, with 7B parameters, shows impressive performance despite its smaller size.

- Reka Flash and Reka Edge offer turbo-class and compact variant models, catering to diverse needs.

- They undergo evaluations across various benchmarks, including language understanding and multimodal interactions.

- Reka Flash utilizes instruction tuning and reinforcement learning for enhanced chat capabilities.

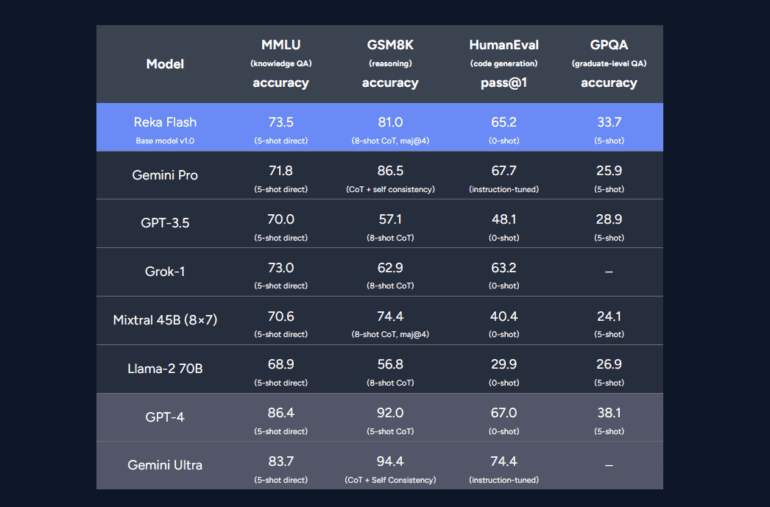

- Both models exhibit competitive prowess against established models like GPT-4 and Gemini Pro.

- Reka Edge outperforms comparable models in resource-constrained environments.

Main AI News:

In response to the escalating demand for sophisticated language and vision solutions, Reka has unveiled its groundbreaking multimodal and multilingual language model, Reka Flash. Despite its smaller size, the Reka Edge model, boasting a mere 7B trainable parameters, exhibits exceptional performance across various Language and Vision benchmarks, effectively addressing the challenges associated with achieving high efficiency across diverse tasks and languages with limited computational resources.

Comparisons with established models like Gemini Pro, GPT-3.5, and Llama-2 70B serve as key reference points. Reka Flash and Reka Edge introduce turbo-class and compact variant models, leveraging extensive pretraining on text from over 32 languages, and undergo evaluations across multiple benchmarks encompassing language understanding, reasoning, multilingual tasks, and multimodal interactions. Notably, Reka Flash rivals leading models in language benchmarks and vision tasks, while Reka Edge caters to local deployments with resource constraints.

Employing instruction tuning and reinforcement learning via Proximal Policy Optimization (PPO), Reka Flash enhances its conversational capabilities significantly. Its performance, evaluated across both text-only and multimodal chat domains, demonstrates competitive prowess against models such as GPT-4, Claude, and Gemini Pro. Meanwhile, Reka Edge, tailored for local deployments, outshines comparable models like Llama 2 7B and Mistral 7B on standard language benchmarks, signaling superior efficiency in resource-constrained environments.

Conclusion:

The unveiling of Reka Flash by Reka AI signifies a significant advancement in multimodal language models, offering solutions for diverse language and vision tasks. With impressive performance and efficiency demonstrated by both Reka Flash and Reka Edge, Reka AI is poised to capture a considerable market share and set new standards in the field of natural language processing and computer vision. Competitors must innovate rapidly to keep pace with Reka AI’s technological advancements and cater to the evolving needs of the market.