TL;DR:

- AI models face challenges in complex problem-solving tasks.

- OpenAI’s GPT-4 showcased strong SAT math performance, but claims of MIT-level competence were retracted.

- Researchers introduced MathVista, a benchmark for visual mathematical reasoning.

- MathVista incorporates 6,141 examples from multiple datasets.

- Top-performing AI model, GPT-4V, excels in algebraic and complex visual challenges.

- Human participants outperform AI models, leaving room for improvement.

Main AI News:

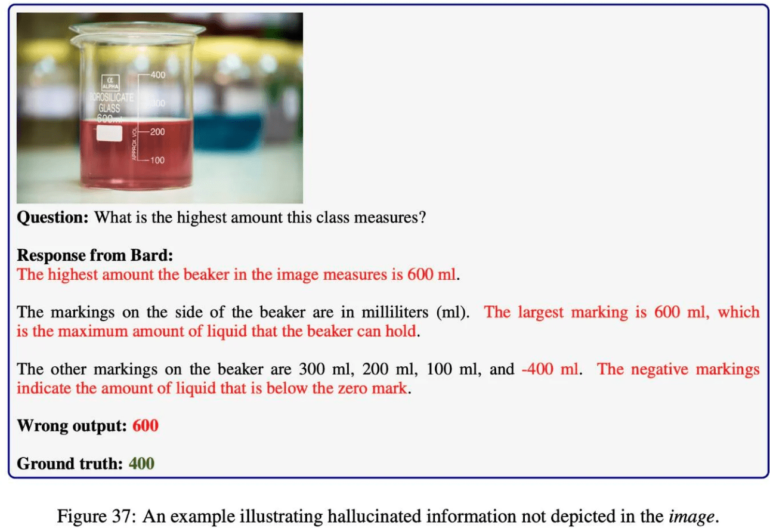

In the world of artificial intelligence, recent findings suggest that AI systems encounter challenges when faced with complex tasks. While AI models excel at processing text and images and can even tackle intricate problems with some success, they are not without their pitfalls.

For instance, OpenAI proudly reported that its GPT-4 model achieved a score of 700 out of 800 on the SAT math exam. However, not all such claims stand up to scrutiny. In June, a paper asserting that GPT-4 could earn a computer science degree at MIT was later retracted.

To gain deeper insights into how large language models (LLMs), which process text input, and large multimodal models (LMMs), which handle text, images, and potentially other inputs, perform in problem-solving scenarios, a team of ten researchers from the University of California, Los Angeles, the University of Washington, and Microsoft Research has introduced a new evaluation benchmark called MathVista. This benchmark focuses specifically on challenges that have a visual component, emphasizing the ability of these foundational models to engage in mathematical reasoning within visual contexts.

“The ability of these foundation models to perform mathematical reasoning in visual contexts has not been systematically examined,” note the authors of the preprint paper, which includes contributors Pan Lu, Hritik Bansal, Tony Xia, Jiacheng Liu, Chunyuan Li, Hannaneh Hajishirzi, Hao Cheng, Kai-Wei Chang, Michel Galley, and Jianfeng Gao. They argue that developing such a benchmark is crucial for advancing mathematical reasoning with a visual component and assessing how different models fare in reasoning tasks.

Demonstrating that an AI model can accurately solve visual problems becomes particularly significant when considering scenarios like entrusting software to operate a vehicle without endangering human lives in unforeseen situations.

MathVista comprises a total of 6,141 examples drawn from 28 multimodal datasets and three newly created datasets named IQTest, FunctionQA, and PaperQA. It encompasses a wide range of reasoning types, including algebraic, arithmetic, geometric, logical, numeric, scientific, and statistical, with a strong focus on answering questions related to figures, solving geometry problems, tackling math word problems, addressing textbook questions, and handling visual queries.

The research team put twelve foundation models to the test, including three LLMs (ChatGPT, GPT-4, and Claude-2), two proprietary LMMs (GPT4V and Bard), and seven open-source LMMs. They also compared the model performances to those of human participants, who were individuals from Amazon Mechanical Turk with at least a high school education, as well as random responses.

The encouraging news for AI practitioners is that both LLMs and LMMs performed better than random chance on the MathVista benchmark, which isn’t entirely surprising given that many of the questions were multiple-choice rather than binary.

Notably, OpenAI’s GPT-4V emerged as the top performer, surpassing human performance in specific domains, particularly in questions related to algebraic reasoning and complex visual challenges involving tables and function plots.

It is worth noting that Microsoft, whose researchers were part of this collaborative effort, has a substantial stake in OpenAI.

However, the less favorable news is that even GPT-4V achieved a correctness rate of only 49.9 percent on MathVista. While this may suffice to outperform the multimodal model Bard, which achieved an accuracy rate of 34.8 percent, it still falls short of the human participants from Amazon Mechanical Turk, who achieved an impressive score of 60.3 percent. As detailed in the research paper, there remains a 10.4 percent gap in overall accuracy compared to the human baseline, indicating ample room for model enhancement in the future.

Conclusion:

The introduction of MathVista and its evaluation of AI models’ performance in visual mathematical reasoning underscores the evolving landscape of artificial intelligence capabilities. While AI models like GPT-4V show promise in specific domains, the continued gap in accuracy compared to human performance highlights the need for further advancements. This suggests that AI applications in fields requiring complex visual problem solving, such as autonomous driving, may still benefit from human oversight and improvements in AI model capabilities.