TL;DR:

- SliceGPT, developed by ETH Zurich and Microsoft, tackles challenges in Large Language Model (LLM) deployment.

- Sparsification methods can optimize LLMs, but often introduce complexities.

- SliceGPT introduces a novel approach, reducing network embedding dimensions for faster inference.

- It leverages computational invariance in transformer networks, particularly focusing on RMSNorm operations.

- Empirical evidence shows SliceGPT outperforms SparseGPT, offering significant speed improvements.

- SliceGPT can compress LLMs, like LLAMA-2 70B, OPT 66B, and Phi-2, by up to 25% while maintaining high task performance.

- This innovation enables LLMs to run on fewer GPUs, reducing compute requirements during inference.

- SliceGPT’s potential impact on the market lies in improving the efficiency of deep learning models and inspiring new research insights.

Main AI News:

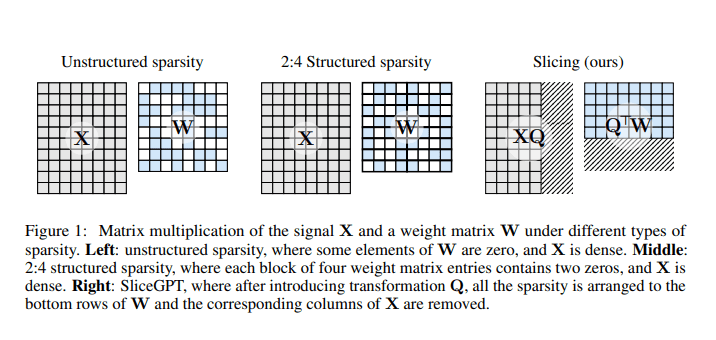

Researchers at ETH Zurich and Microsoft have unveiled SliceGPT, a pioneering solution designed for the efficient compression of Large Language Models (LLMs). These LLMs, exemplified by GPT-4, demand substantial computational power and memory resources, making their deployment a formidable challenge. While various sparsification techniques have emerged to alleviate these demands, they often introduce complexities that hinder practical implementation. These complications stem from the need for additional data structures to support sparse representations, which in turn can complicate the overall system architecture. Moreover, the potential performance enhancements from sparsification remain unrealized due to the constraints of current hardware architectures, which are primarily optimized for dense computations.

Among the methods for LLM compression are sparsification, low-rank approximation, and structured pruning. However, approaches like Optimal Brain Surgeon (OBS) are hindered by their exorbitant computational requirements. In contrast, GPTQ and SparseGPT concentrate on quantization and pruning techniques. Low-rank approximation simplifies weight matrices, while other methods propose the elimination of specific rows and columns. Techniques such as ThiNet and LLM-pruner leverage linear operations and fine-tuning strategies.

The novel contribution by researchers from ETH Zurich and Microsoft Research is SliceGPT, an ingenious post-training sparsification scheme that effectively reduces the embedding dimension of the network by substituting each weight matrix with a smaller, denser matrix. These sliced models under the SliceGPT framework operate with fewer GPUs and achieve swifter inference times without the need for additional code optimization. The approach capitalizes on computational invariance within transformer networks.

This research approach places particular emphasis on RMSNorm operations, which uphold transformation invariance, enabling the application of orthogonal transformations without altering the model’s core function. Networks utilizing LayerNorm can be transformed into RMSNorm by integrating LayerNorm’s linear components into adjacent blocks. Principal Component Analysis (PCA) plays a pivotal role in this process, identifying and projecting signals onto their principal components at each layer. Subsequently, minor components are sliced off, reducing the network’s size while maintaining performance integrity. Empirical validation has demonstrated that this technique outperforms SparseGPT, delivering substantial speed enhancements across diverse models and tasks.

SliceGPT represents a major breakthrough in the compression of LLMs such as LLAMA-2 70B, OPT 66B, and Phi-2. It adeptly trims up to 25% of model parameters, including embeddings, while preserving high task performance. This efficiency boost allows these models to operate on fewer GPUs, delivering quicker inference times without the need for additional code optimization. On both consumer and high-end GPUs, SliceGPT achieves a remarkable reduction in compute requirements during inference, reaching 64% and 66%, respectively. The research underscores that OPT models exhibit greater compressibility than LLAMA-2 models, with larger models experiencing less accuracy reduction. SliceGPT emerges as a promising avenue for mitigating the resource demands of LLMs, all without compromising their effectiveness.

SliceGPT introduces structured pruning as a means of reducing the inference cost of LLMs while maintaining superior performance compared to SparseGPT. Opportunities for further enhancements include exploring combined methodologies with SparseGPT, refining Q computation, and integrating complementary techniques like quantization and structural pruning. The identification of computational invariance within SliceGPT can potentially drive future research efforts aimed at enhancing the efficiency of deep learning models and inspiring novel theoretical insights.

Conclusion:

SliceGPT’s innovative approach to LLM compression promises to reshape the market by enabling more efficient deployment of large language models. This breakthrough technology not only addresses the resource demands of these models but also paves the way for improved performance and accessibility, potentially accelerating the adoption of advanced language models across various industries.