TL;DR:

- Researchers at UC Berkeley use AI to reproduce music from brain activity.

- Electrodes capture auditory processing signals as patients think about songs.

- Machine learning algorithms analyze neural patterns for pitch, tempo, vocals, and instruments.

- AI generates audio representations resembling specific songs.

- Neural decoding highlights connections between brain regions and musical elements.

- Potential applications include aiding paralyzed patients in speech rehabilitation.

Main AI News:

In the ever-evolving landscape of technological breakthroughs, a fascinating advancement has emerged, altering the dynamics of music reproduction and cognitive interaction. The sphere of artificial intelligence (AI) has not only transformed our daily experiences but has now ventured into decoding musical nuances directly from the mind, effectively eliminating the need for audible expression.

Researchers from the esteemed University of California at Berkeley have orchestrated a pioneering study that harnesses the potential of AI to translate brain activity into harmonious melodies. Through meticulous exploration, these scientists have achieved the unthinkable—recreating music through the mere contemplation of a song. This cutting-edge achievement has been documented in a groundbreaking paper published in PLOS Biology, revealing the astounding possibilities at the intersection of neuroscience and AI technology.

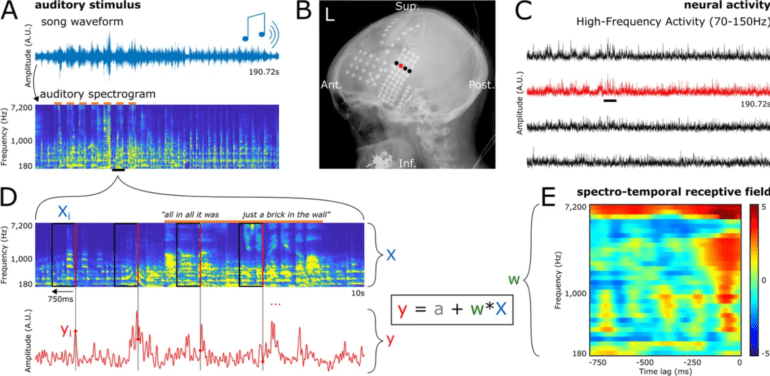

This remarkable endeavor involved tapping into the neural landscapes of epilepsy patients undergoing crucial monitoring for seizure treatment. As these individuals passively engaged with the timeless strains of a classic rock anthem, precisely positioned electrodes on the cortical surface delicately captured the intricate symphony of auditory processing within their minds. By seamlessly amalgamating AI algorithms with this intricate brain data, the research team masterfully engineered a bridge between cerebral activity and musical articulation.

Diving deeper into the mechanics, the AI models adeptly discerned distinctive neural reactions within the auditory cortex, responding to elements like pitch, tempo, vocals, and instrumental interplay. The synergy between these elements led to the creation of novel spectrographic representations solely from the canvas of neural signals. These ethereal visualizations, metamorphosed into audio waveforms, bore a captivating semblance to the iconic melodies of “Another Brick in the Wall, Part 1” by Pink Floyd.

Beyond mere resemblance, this groundbreaking technique unveiled a revelation—the profound association between neural responses and specific musical components. A harmonious dance between the superior temporal gyrus and vocal syllables emerged, while other enclaves of the brain resonated with the rhythmic cadence of the song’s guitar motifs. This profound capability lays bare the potential to deconstruct complex musical stimuli into their elemental fragments, all derived from the cerebral landscape.

The implications are staggering, transcending the realm of music and igniting profound possibilities for the future. Dr. Robert Knight, the visionary neuroscientist from UC Berkeley, noted that the selection of Pink Floyd’s composition was deliberate, owing to its intricate orchestration. However, this innovation’s canvas stretches far beyond a single piece; it extends to encompass music of diverse genres and even the melodic nuances nestled within natural speech.

As we envision the path ahead, the potential ramifications become awe-inspiring. This groundbreaking technology, once refined and validated through further research, holds the promise of empowering individuals rendered speechless due to paralysis or stroke. The future envisions a landscape where the neural symphony of thoughts translates into eloquent speech, merely through contemplation. Brain-computer interfaces, already burgeoning, could now metamorphose into conduits for thought-driven articulation, encompassing melody and emotional prosody to convey holistic narratives.

Conclusion:

The fusion of AI and neural decoding unveils a groundbreaking avenue for music reproduction directly from brain activity. This innovation has far-reaching implications for the market, potentially revolutionizing speech rehabilitation and brain-computer interfaces, opening new horizons for assistive technologies. The convergence of neuroscience and AI stands poised to redefine how we interact with music and decode cognitive processes.