TL;DR:

- Researchers from Bytedance and the King Abdullah University of Science and Technology introduced a groundbreaking framework for animating hair in still portrait photos.

- Dynamic elements, like hair in motion, enhance the appeal of images, particularly for social media sharing.

- Cinemagraphs, a favored format among professionals, seamlessly blend still images and motion, captivating viewers.

- Existing methods excel in animating fluids but fall short in capturing the intricacies of human hair.

- Animating hair in static photos is challenging due to the complexity of hair structures and dynamics.

- The innovative AI method automates hair animation by focusing on “hair wisps” instead of individual strands.

- Instance segmentation techniques simplify hair wisp extraction, accompanied by a dedicated dataset for training.

- The results showcase the model’s superiority over existing techniques.

Main AI News:

In the realm of visual storytelling, the mesmerizing allure of dynamic elements has always held a special place. In today’s digital age, where social media platforms like TikTok and Instagram serve as canvases for self-expression, the quest for creating visually captivating content knows no bounds. Researchers from Bytedance and the King Abdullah University of Science and Technology have embarked on a pioneering journey to breathe life into still portrait photos by animating the most intricate of human features – hair.

The dynamic nature of hair has long been a source of fascination, as it possesses the power to transform static images into living, breathing works of art. Recent advancements in the world of visual effects have seen the successful animation of various fluid substances like water, smoke, and fire within static frames. However, the intricate dance of human hair in real-life photographs has remained an overlooked terrain until now.

In this article, we delve into the artistic metamorphosis of human hair within the realm of portrait photography. The primary objective is to translate a mere photograph into a captivating cinemagraph, where subtle hair motions infuse life into the image without compromising its static essence. The result is a visual masterpiece that beckons the viewer to linger, to explore, and to engage.

Cinemagraphs, an innovative short video format, have gained immense popularity among professional photographers, advertisers, and artists alike. They seamlessly blend the best of both worlds – the tranquility of still images with the allure of motion. Certain elements within a cinemagraph gracefully sway in a perpetual dance, while the rest remains tranquil, creating a mesmerizing juxtaposition that holds the viewer’s gaze.

Yet, the challenge lies in the transformation of a static portrait into a cinemagraph, where the hair becomes the protagonist of the story. Conventional techniques and commercial software excel at generating cinemagraphs from video sources, but they falter when faced with still images. The animation of human hair in portrait photos, with its fibrous intricacies, remains an uncharted territory.

The complexity of animating hair within a static portrait photo becomes evident when we confront the intricate nature of hair structures and dynamics. Unlike the smooth surfaces of the human body or face, hair comprises a multitude of individual strands, creating complex and non-uniform structures. This intricacy gives rise to dynamic patterns within the hair, including interactions with the subject’s head. While techniques like dense camera arrays and high-speed cameras have been employed for hair modeling, their cost and time constraints render them impractical for real-world applications.

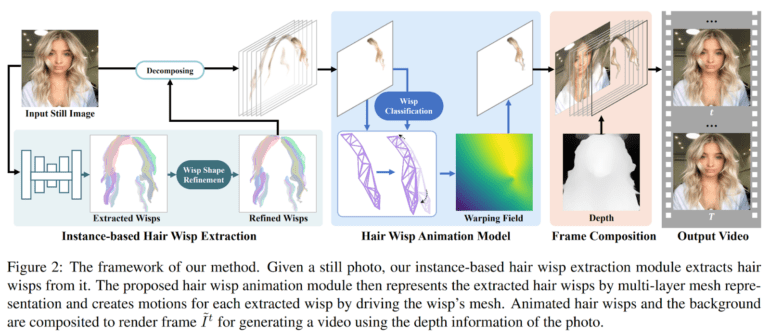

Enter the groundbreaking AI method presented in this article, a beacon of hope for those seeking to infuse life into still portraits effortlessly. The brilliance behind this approach hinges on the human visual system’s diminished sensitivity to individual hair strands and their movements within real portrait videos compared to digitalized counterparts in virtual environments. The solution: the animation of “hair wisps” instead of individual strands, creating a visually pleasing and immersive experience. This innovation introduces a dedicated hair wisp animation module, offering an efficient and automated solution.

The pivotal challenge in this endeavor is the extraction of these elusive hair wisps. While previous work in hair modeling has concentrated on hair segmentation, it often missed the mark, focusing on the entire hair region rather than the distinct wisps. Here, the researchers take a novel approach by framing hair wisp extraction as an instance segmentation problem, where each segment within a still image represents a unique hair wisp. This ingenious shift in perspective not only simplifies the extraction process but also empowers the use of advanced networks for precise results.

Moreover, the article showcases the development of a comprehensive hair wisp dataset, comprising authentic portrait photos, to train the networks effectively. A semi-annotation scheme is introduced to produce ground-truth annotations for the identified hair wisps, ensuring the accuracy and reliability of the model’s output. As a testament to their innovation, the paper presents sample results that stand head and shoulders above the state-of-the-art techniques.

Conclusion:

The collaboration between Bytedance and the King Abdullah University of Science and Technology brings forth a revolutionary framework for animating hair in still portrait photos. This breakthrough not only redefines the possibilities of visual storytelling but also paves the way for a new era of captivating and engaging content creation. As we bear witness to the fusion of art and technology, the future of dynamic portraiture looks brighter than ever.