TL;DR:

- Researchers from Google and John Hopkins University introduced an innovative method for faster text-to-image generation.

- Diffusion models have been dominant but slow, requiring 20-200 sample steps for high-quality images.

- Distillation techniques speed up sampling to 4-8 steps without compromising quality.

- A streamlined single-stage distillation process outperforms traditional two-stage methods.

- The approach eliminates the need for original data and enhances visual quality.

- Parameter-efficient distillation techniques are introduced, making conditional generation more efficient.

Main AI News:

In the realm of AI-driven generative tasks, the fusion of Google’s expertise and John Hopkins University’s research acumen has unveiled a game-changing breakthrough. Researchers have devised an innovative method to expedite and enhance text-to-image generation, surmounting the limitations of diffusion models. In the following pages, we delve into their pioneering approach, which promises to revolutionize the landscape of AI-driven image synthesis.

Overcoming Diffusion Model Limitations

Text-to-image diffusion models, powered by extensive datasets, have consistently delivered high-quality and diverse outputs. These models have asserted their dominance across a spectrum of generative tasks. A recent development, however, has ushered in a new era. Image-to-image transformations, encompassing tasks like image alteration, enhancement, and super-resolution, now leverage the power of diffusion models with external image conditions. This novel approach has significantly boosted the visual quality of generated images during conditional picture production. Yet, it comes at a cost – the iterative refinement process inherent in diffusion models can be time-consuming, particularly for high-resolution image synthesis.

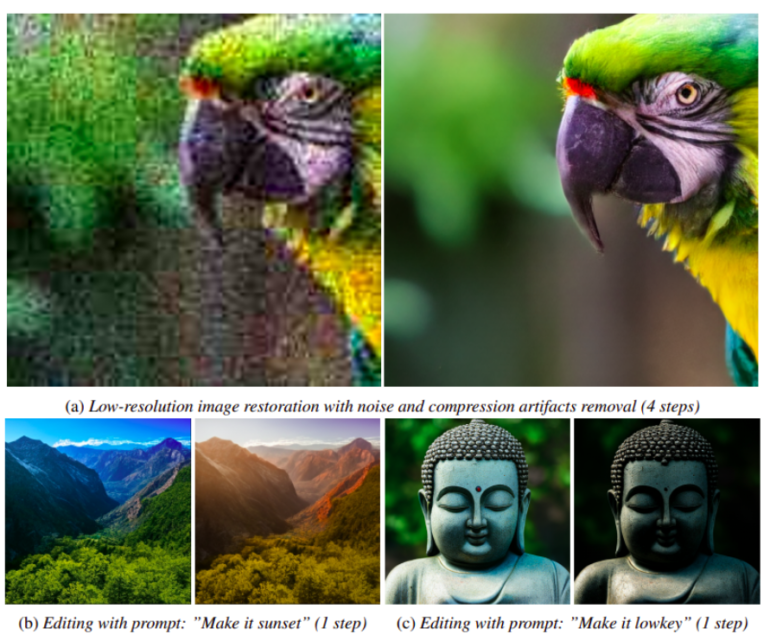

Even with advanced sampling techniques, state-of-the-art text-to-image latent diffusion models often demand 20 to 200 sample steps to achieve optimal visual quality. This prolonged sampling period limits the practical applicability of conditional diffusion models. Recognizing this bottleneck, recent efforts have harnessed distillation techniques to expedite sampling, reducing it to a mere 4 to 8 steps without compromising generative performance. Furthermore, these techniques have been applied to distill large-scale text-to-image diffusion models that were previously trained.

The Power of Distillation

The distilled models produced through these techniques exhibit remarkable prowess in various conditional tasks, showcasing the potential of this approach to replicate diffusion priors within a condensed sampling timeframe. Building upon these distillation methods, a two-stage distillation process—comprising distillation-first or conditional finetuning-first—has emerged. When subjected to the same sampling period, both techniques consistently outperform undistilled conditional diffusion models. However, they offer distinct advantages in terms of cross-task flexibility and learning complexity.

This work introduces an innovative distillation method for extracting a conditional diffusion model from an already-trained unconditional diffusion model. Departing from the traditional two-stage approach, this streamlined process begins with unconditional pretraining and culminates in the creation of a distilled conditional diffusion model.

Unveiling Efficiency: A Single-Stage Triumph

This streamlined learning approach eliminates the need for the original text-to-image data, a requirement in earlier distillation processes. Additionally, it sidesteps the common pitfall of compromising the diffusion prior in the pre-trained model, a drawback often associated with the finetuning-first method’s initial stage. Extensive experimental data confirms that this distilled model outperforms earlier distillation techniques in both visual quality and quantitative performance when given the same sample time.

The Path to Parameter Efficiency

Another frontier that beckons further exploration is parameter-efficient distillation techniques for conditional generation. The researchers present a novel distillation mechanism that excels in this regard, requiring only a modest increase in learnable parameters. This mechanism can swiftly convert an unconditional diffusion model into a powerful tool for conditional tasks. Its unique formulation seamlessly integrates with established parameter-efficient tuning techniques, such as T2I-Adapter and ControlNet. By harnessing the newly added learnable parameters of the conditional adaptor alongside the frozen parameters of the original diffusion model, this distillation technique excels at reproducing diffusion priors for dependent tasks with minimal iterative revisions. This innovative paradigm has significantly elevated the utility of numerous conditional tasks.

Conclusion:

The collaborative efforts of Google and John Hopkins University have birthed a paradigm-shifting advancement in the realm of text-to-image generation. This groundbreaking distillation technique not only accelerates the process but also preserves and enhances the quality of generative outcomes. As we delve deeper into the intricacies of this innovation, it becomes evident that the future of AI-driven image synthesis is set to be more efficient and versatile than ever before.