TL;DR:

- The collaboration of experts from Google, Carnegie Mellon University, and Bosch Center for AI introduces a pioneering method for enhancing adversarial robustness in deep learning models.

- Key takeaways include effortless robustness using pretrained models, breakthrough results with denoised smoothing, practicality without complex fine-tuning, and open access for research replication.

- DDS approach combines pretrained denoising diffusion models with high-accuracy classifiers, bridging image generation and adversarial robustness.

- Notable performance on ImageNet with a 71% accuracy under specific adversarial conditions, marking a 14% improvement.

- Applications span autonomous vehicles, cybersecurity, healthcare, and financial services, enhancing safety, threat detection, diagnostic accuracy, and fraud detection.

Main AI News:

In a remarkable collaboration, experts from Carnegie Mellon University, Google, and Bosch Center for AI have joined forces to revolutionize AI security. Their pioneering approach focuses on simplifying the process of enhancing the adversarial robustness of deep learning models, bringing about significant advancements with profound practical implications. Here, we delve into the key takeaways from this groundbreaking research:

- Effortless Robustness with Pretrained Models: This research introduces a streamlined method that achieves top-tier adversarial robustness against 2-norm bounded perturbations, all while utilizing readily available pretrained models. This innovation dramatically simplifies the task of fortifying models against adversarial threats.

- Breakthrough with Denoised Smoothing: By combining a pretrained denoising diffusion probabilistic model with a high-accuracy classifier, the research team achieved a remarkable 71% accuracy on ImageNet for adversarial perturbations. This result represents a substantial 14 percentage point improvement over previously certified methods.

- Practicality and Accessibility: What sets this approach apart is its practicality. It does not require complex fine-tuning or retraining, making it highly accessible for various applications, especially those necessitating defense against adversarial attacks.

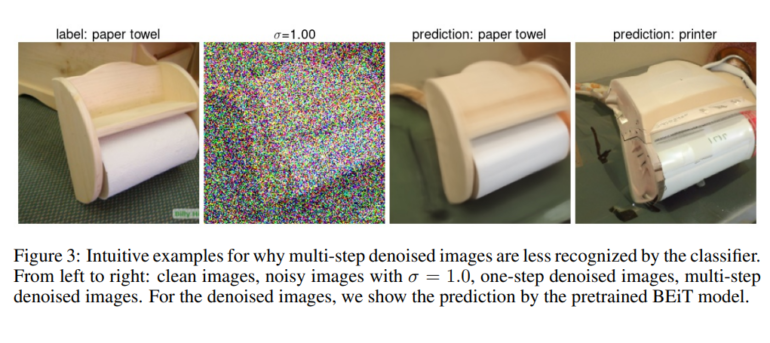

- Denoised Smoothing Technique Explained: The technique comprises a two-step process: first, applying a denoiser model to eliminate added noise, followed by a classifier to determine the label for the treated input. This process enables the application of randomized smoothing to pretrained classifiers.

- Leveraging Denoising Diffusion Models: The research underscores the suitability of denoising diffusion probabilistic models, renowned in image generation, for the denoising step in defense mechanisms. These models excel in recovering high-quality denoised inputs from noisy data distributions.

- Proven Efficacy on Major Datasets: This method showcases impressive results on ImageNet and CIFAR-10, surpassing previously trained custom denoisers even under stringent perturbation norms.

- Open Access and Reproducibility: Emphasizing transparency and further research, the researchers provide a link to a GitHub repository containing all necessary code for experiment replication.

Now, let’s take a closer look at the detailed analysis of this research and explore its potential real-world applications. In the rapidly evolving landscape of deep learning models, ensuring adversarial robustness is paramount to guarantee the reliability of AI systems in the face of deceptive inputs. This aspect of AI research carries significant weight across various domains, ranging from autonomous vehicles to data security, where the integrity of AI interpretations holds utmost importance.

One pressing challenge is the vulnerability of deep learning models to adversarial attacks, subtle manipulations of input data that often go unnoticed by human observers but can lead to erroneous model outputs. These vulnerabilities pose substantial threats, especially in contexts where both security and accuracy are critical. The objective is to develop models capable of maintaining accuracy and reliability even when confronted with meticulously crafted perturbations.

Previous methods aimed at countering adversarial attacks focused on bolstering the model’s resilience. Techniques such as bound propagation and randomized smoothing, while effective, often demanded intricate, resource-intensive processes, limiting their broader application.

The current research introduces a game-changing approach known as Diffusion Denoised Smoothing (DDS), representing a significant shift in the realm of adversarial robustness. This method ingeniously combines pretrained denoising diffusion probabilistic models with standard high-accuracy classifiers. The innovation lies in harnessing existing, high-performance models, bypassing the need for extensive retraining or fine-tuning. This strategy not only enhances efficiency but also broadens the accessibility of robust adversarial defense mechanisms.

The DDS approach combats adversarial attacks by applying a sophisticated denoising process to the input data. This process entails reversing a diffusion process, typically used in state-of-the-art image generation techniques, to restore the original, unaltered data. By effectively cleansing the data of adversarial noise, it prepares the input for accurate classification. The application of diffusion techniques, traditionally confined to image generation, to the realm of adversarial robustness is a notable innovation that bridges two distinct areas of AI research.

The performance on the ImageNet dataset stands out, with the DDS method achieving a remarkable 71% accuracy under specific adversarial conditions. This achievement signifies a 14 percentage point improvement over previous state-of-the-art methods. Such a leap in performance underscores the method’s ability to maintain high accuracy even in the face of adversarial perturbations.

In conclusion, this research represents a significant advancement in adversarial robustness by cleverly combining existing denoising and classification techniques. The DDS method offers a more efficient and accessible approach to achieving robustness against adversarial attacks, setting a new benchmark in the field and paving the way for streamlined and effective adversarial defense strategies.

The applications of this innovative approach to adversarial robustness in deep learning models span across various sectors:

- Autonomous Vehicle Systems: Enhancing safety and decision-making reliability by improving resistance to adversarial attacks that could mislead navigation systems.

- Cybersecurity: Strengthening AI-based threat detection and response systems, making them more effective against sophisticated cyber attacks designed to deceive AI security measures.

- Healthcare Diagnostic Imaging: Increasing the accuracy and reliability of AI tools used in medical diagnostics and patient data analysis, ensuring robustness against adversarial perturbations.

- Financial Services: Bolstering fraud detection, market analysis, and risk assessment models in finance, maintaining integrity and effectiveness against adversarial manipulation in financial predictions and analyses.

These applications demonstrate the potential of leveraging advanced robustness techniques to enhance the security and reliability of AI systems in critical and high-stakes environments.

Conclusion:

This groundbreaking approach to adversarial robustness holds immense promise for the AI market. By simplifying the process and achieving remarkable results without extensive retraining, it paves the way for more efficient and accessible AI defense mechanisms. The potential applications in autonomous vehicles, cybersecurity, healthcare, and financial services signify a significant market opportunity, as businesses seek to enhance the security and reliability of AI systems in critical domains.