TL;DR:

- Researchers propose the “Chiplet Cloud” architecture for AI supercomputing.

- Purpose-built ASIC chips and a 2D torus structure form the backbone of the architecture.

- Chiplet Cloud achieves a remarkable 94x improvement in performance compared to general-purpose GPUs.

- It outperforms Google’s TPUv4 by 15x in AI workloads.

- Cost savings are significant, with an estimated $35 million price tag compared to $40 billion for an equivalent GPU cluster.

- The use of customized chips and SRAM memory leads to improved efficiency and eliminates bottlenecking.

- The chiplet cloud offers flexibility and can be tailored to meet specific software and hardware requirements.

- Its cost-effectiveness, adaptability, and potential for significant efficiency gains make it an attractive option for AI-first companies.

- Microsoft’s research in chiplet cloud architecture could position them favorably against competitors like AWS and GCP.

- If implemented in Azure, it could enhance the capabilities of OpenAI’s APIs and Azure OpenAI service.

Main AI News:

The field of AI compute is experiencing a remarkable resurgence, fueled by the explosive growth of generative AI. Concurrently, researchers are actively exploring alternative methods to accelerate AI workloads. In a collaborative effort between the University of Washington and Microsoft, a groundbreaking solution has emerged, promising a more efficient approach to serving LLM workloads.

The outcome of their efforts is presented in a seminal paper titled “Chiplet Cloud,” which outlines a visionary plan to construct an AI supercomputer based on the chiplet manufacturing process. The performance gains achieved by this computing model are staggering, boasting a remarkable 94x improvement over general-purpose GPUs. Even when compared to Google’s cutting-edge TPUv4, purpose-built for AI, the new architecture showcases a remarkable 15x enhancement.

As previously reported by AIM, the industry as a whole is gravitating toward specialized chip design. Pioneering companies like Cerebras, Samba Nova, and GraphCore have paved the way, and now, the chiplet cloud emerges as a potential frontrunner for the future of AI compute in the enterprise.

Understanding the Chiplet Cloud

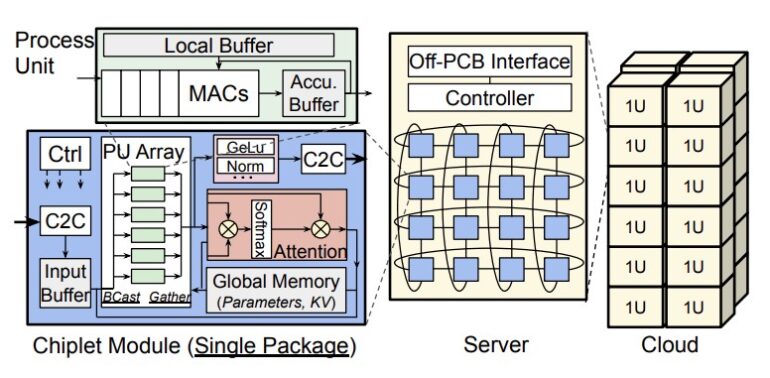

The crux of the architecture described in the paper revolves around the use of purpose-built ASIC (application-specific integrated circuit) chips, which form the backbone of computing power. These ASICs represent the pinnacle of specialized chip design, as evidenced by their adoption in Intel’s Meteor Lake and AMD’s Zen architectures. While chipmakers typically integrate ASICs as a small component within their general-purpose chips, this paper proposes constructing the entire architecture using ASICs exclusively.

By developing an ASIC optimized for math matrix calculations, which constitute the majority of AI compute workloads, the researchers demonstrated remarkable performance improvements and cost savings over GPUs. The chiplet cloud achieves an impressive 94x improvement in the total cost of ownership per generated tokens compared to a cloud infrastructure relying on NVIDIA’s last-gen A100 GPUs.

These cost savings primarily stem from silicon-level optimizations inherent in creating customized chips. In addition to the math matrix calculations, the chip features an abundance of SRAM (static random-access memory), providing a substantial memory capacity. This attribute is particularly crucial for LLM workloads, as it enables fast memory storage of the models.

Traditionally, GPUs have struggled with this aspect, as even the fastest memory available today fails to keep up with the demands of LLMs, resulting in a phenomenon known as bottlenecking. The chiplet cloud overcomes this challenge by incorporating low-latency memory directly adjacent to the processing chips. Consequently, bottlenecking becomes a non-issue, and the GPU can operate at maximum efficiency.

Interconnecting the chips within the chiplet cloud is accomplished using a 2D torus structure, which offers the necessary flexibility to support various AI workloads. However, these features only represent half of the story, as the primary advantage of the chiplet cloud lies in its ability to significantly reduce costs.

The Future of AI Compute

As previously highlighted, cloud service providers have been investing substantial resources in the development of specialized AI chips. AWS introduced Graviton and Inferentia, while Google pioneered TPUs. Until recently, Microsoft seemed to lag behind in this race. However, this research effort has the potential to reshape how enterprises approach AI cloud compute.

To begin with, the manufacturing costs associated with the chiplet cloud nodes are considerably lower compared to those of its competitors. Researchers estimate that building an equivalent GPU cluster would cost a staggering $40 billion, not to mention the ongoing operational expenses incurred by such powerful machines. In stark contrast, the chiplet cloud’s estimated cost amounts to a mere $35 million, making it highly competitive, especially when considering its tremendous efficiency gains. Moreover, breaking down the silicon chip into chiplets enhances manufacturing yield, further reducing the cost of ownership.

Furthermore, these ASICs can operate at full capacity due to the 2D torus architecture, in contrast to TPUs and GPUs, which typically achieve only 40% and 50% utilization, respectively, for LLM workloads. Additionally, the chiplets can be deployed according to the specific software and hardware requirements of each company, making it an ideal choice for cloud deployment.

The chiplet cloud’s compute type and memory capacity can be easily adjusted to accommodate different models. This adaptability will undoubtedly attract AI-first companies, as the tailored cloud infrastructure enables significant cost savings while optimizing for narrow use cases. Additionally, the cloud can be configured to prioritize either low latency or total cost of ownership per token, empowering companies to choose between speed and accuracy for their models.

Conclusion:

The Chiplet Cloud architecture represents a significant breakthrough in enterprise AI compute. Its performance improvements, cost savings, and flexibility make it an appealing choice for AI workloads. The potential market impact is substantial, with AI-first companies likely to embrace this technology for cost optimization and tailored cloud infrastructure. Furthermore, Microsoft’s involvement in chiplet cloud research positions them strategically to compete with other cloud service providers and gain an advantage in the AI market.