TL;DR:

- Generative AI models offer a potential solution for emergency physicians to interpret diagnostic images in the absence of radiologists.

- Researchers tested an AI model on 500 chest radiographs and found that it performed on par with in-house radiologists in terms of quality and clinical accuracy.

- AI-generated reports could be delivered in real-time, aiding in triage and flagging critical findings, potentially streamlining emergency care.

- Despite more disagreements among raters, AI reports mainly pertained to non-critical findings, showcasing the potential of AI in emergency radiology.

- Teleradiology services generated more reports requiring minor wording/style changes, resulting in a lower rating.

- A prospective study is needed to further validate AI’s role in emergency radiology.

Main AI News:

In the high-stakes realm of emergency medicine, time is often of the essence. When patients arrive overnight, in urgent need of diagnosis and treatment, the unavailability of a specialist can be a daunting challenge for physicians. While doctors are usually adept at interpreting diagnostic images, a previous study unearthed the unsettling fact that “rare though significant discrepancies” can occur in their assessments.

Enter teleradiology services, which aim to bridge the gap in the availability of in-house radiologists. However, a new dawn is breaking in the form of generative AI models, poised to revolutionize the field by not only interpreting images but also crafting detailed reports.

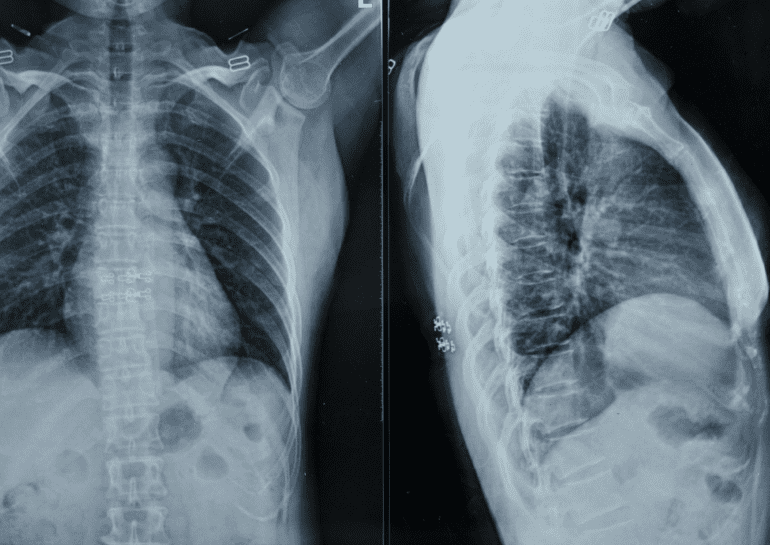

To assess the potential of this groundbreaking technology, a group of researchers meticulously crafted an AI model and put it to the test using 500 chest radiographs sourced from the Northwestern emergency department. Each image was meticulously examined, with both teleradiology and final radiologist reports at the ready. Notably, all images were from patients within the age range of 18 to 89 years.

Six distinguished board-certified emergency medicine physicians were tasked with scrutinizing these images and evaluating the accompanying reports, both in terms of quality and clinical accuracy. Astonishingly, the results unveiled a level playing field, with the AI reports scoring a commendable 3.22 and the in-house radiologists notching a slightly higher 3.34 on a five-point scale. Most reports received a perfect five, indicating minimal need for amendments.

The real game-changer, however, lies in the speed of AI-generated reports. As these reports can be generated within seconds of acquiring the radiograph, they offer the tantalizing prospect of real-time assessments. Physicians could potentially be notified promptly of any abnormalities, streamlining triage processes and swiftly flagging critical findings that necessitate immediate intervention. The conclusion drawn from this study suggests that the AI model demonstrated a striking proficiency in identifying clinical abnormalities, rivaling the expertise of a seasoned radiologist.

It is noteworthy that AI-produced reports did spark more disagreements among the raters when compared to their counterparts crafted by in-house radiologists. However, crucially, these disagreements tended to pertain to non-critical findings. In the grand scheme of things, the discrepancies were minor, reaffirming the potential of AI in emergency radiology.

Interestingly, when assessing the teleradiology service, it was found to generate more reports necessitating minor alterations in wording or style when compared to both AI and in-house radiologists. Consequently, it received a significantly lower rating. As the researchers aptly point out, further validation through a prospective study is essential to solidify the role of AI in this critical domain.

Conclusion:

The emergence of AI in emergency radiology presents an exciting opportunity for faster and more accurate assessments, potentially reshaping the market by enhancing diagnostic capabilities and expediting critical patient care. However, further research and validation are necessary to fully realize its potential in this domain.