- Convergence of 3D generation and reconstruction facilitated by advancements in generative model architectures.

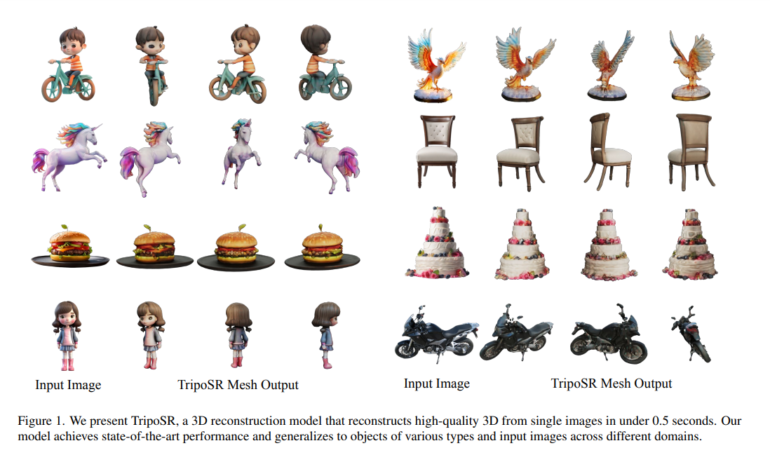

- TripoSR model by Stability AI and Tripo AI can generate 3D feedforward models from a single image in under half a second.

- Utilizes enhancements in data curation, rendering, model design, and training methodologies, expanding upon the LRM architecture.

- Incorporates transformer architecture similar to LRM, employing image encoder, NeRF based on triplanes, and image-to-triplane decoder.

- An image encoder, initialized with DINOv1, is crucial for capturing global and local properties that are essential for 3D reconstruction.

- The approach avoids explicit parameter conditioning, resulting in a robust and adaptable model.

- Two key enhancements in data collection: Data curation from the Objaverse dataset and data rendering techniques.

- Experiments demonstrate TripoSR outperforms competing open-source solutions numerically and qualitatively.

- Availability of pre-trained model, online demo, and source code under MIT license marks significant advancement in AI, CV, and CG fields.

Main AI News:

In the dynamic realm of 3D generative AI, the lines between 3D generation and reconstruction from limited views are increasingly indistinct. This merging is fueled by a string of breakthroughs, including the rise of extensive public 3D datasets and advancements in generative model architectures.

Pioneering research explores utilizing 2D diffusion models to craft 3D objects from input photos or textual cues, mitigating the scarcity of 3D training data. One standout example is DreamFusion, which spearheaded score distillation sampling (SDS) by refining 3D models with a 2D diffusion model. This methodology, leveraging 2D priors for detailed 3D object generation, marks a significant advancement. However, due to substantial computational demands and challenges in managing output models accurately, these approaches often face limitations regarding generation speed.

On the other hand, feedforward 3D reconstruction models offer superior efficiency in computational resources. Recent methodologies in this domain exhibit promising scalability in training across diverse 3D datasets. By enabling swift feedforward inference, these approaches markedly enhance the efficiency and feasibility of 3D model generation while potentially affording greater control over output quality.

A groundbreaking study conducted by Stability AI and Tripo AI introduces the TripoSR model, which is capable of generating 3D feedforward models from a single image in less than half a second utilizing an A100 GPU. The research team incorporates various enhancements to data curation, rendering, model design, and training methodologies, while building upon the foundations laid by the LRM architecture. Employing a transformer architecture akin to LRM, TripoSR employs an image encoder, a neural radiance field (NeRF) based on triplanes, and an image-to-triplane decoder.

The image encoder, initialized with the pre-trained vision transformer model DINOv1, serves as a pivotal component in TripoSR. By converting an RGB image into latent vectors, this encoder captures the global and local properties essential for reconstructing the 3D object.

A critical feature of the proposed approach is the avoidance of explicit parameter conditioning, resulting in a more robust and adaptable model capable of handling diverse real-world scenarios without precise camera data. Key design considerations encompass transformer layer count, triplane size, NeRF model intricacies, and primary training configurations.

Recognizing the paramount importance of data, two enhancements to data collection have been implemented:

- Data Curation: Selecting a subset of the Objaverse dataset distributed under the CC-BY license has enhanced the quality of training data.

- Data Rendering: Employing various data rendering techniques improves the model’s generalizability, even when trained solely on the Objaverse dataset, by better approximating the distribution of real-world photos.

Experiments demonstrate that the TripoSR model surpasses competing open-source solutions both numerically and qualitatively. The availability of the pre-trained model, an online interactive demo, and the source code under the MIT license represent a significant advancement in artificial intelligence (AI), computer vision (CV), and computer graphics (CG). The team anticipates a transformative impact on these fields by providing researchers, developers, and artists with state-of-the-art tools for 3D generative AI.

Conclusion:

The introduction of the TripoSR model represents a significant leap forward in the realm of 3D generative AI. Its ability to swiftly generate high-quality 3D models from single images opens new avenues for industries such as gaming, film production, architecture, and virtual reality. With its superior performance and accessibility through pre-trained models and open-source code, TripoSR is poised to disrupt traditional workflows and empower researchers, developers, and artists with cutting-edge tools for 3D content creation.