TL;DR:

- Three-dimensional (3D) modeling is essential in fields like architecture and engineering.

- Recent advancements in computer vision and machine learning allow for generating 3D models from a single image.

- 3D scene generation involves learning the structure, shape, and appearance of objects.

- Traditional approaches provide limited variations in 3D scenes.

- A novel approach aims to create unique scenes with interdependent geometry and appearance features.

- The exemplar-based paradigm is used to construct richer target models.

- The proposed patch-based algorithm utilizes a multi-scale generative framework and a GPNN module.

- Plenoxels, a grid-based radiance field, are employed for effective scene representation.

- The exemplar pyramid is created through a coarse-to-fine training process of Plenoxels.

- Diverse representations are utilized in the synthesis process within the generative nearest neighbor module.

- Patch matching and blending operate simultaneously to synthesize intermediate scenes.

- An exact-to-approximate patch NNF module manages computational demands.

- The proposed approach enhances the creation of natural and visually captivating 3D scenes.

Main AI News:

Three-dimensional (3D) modeling has emerged as a crucial aspect across various industries, including architecture and engineering. These computer-generated models offer the ability to manipulate, animate, and render objects and environments from multiple perspectives, resulting in a realistic visual representation of the physical world. However, the creation of complex 3D models can be both time-consuming and costly. Thankfully, recent advancements in computer vision and machine learning have paved the way for generating 3D models and scenes from a single input image.

The process of 3D scene generation involves leveraging artificial intelligence algorithms to decipher the underlying structure and geometrical properties of an object or environment based on a single image. Typically, this process unfolds in two stages. First, the object’s shape and structure are extracted, followed by the generation of its texture and appearance.

In recent years, this groundbreaking technology has captured the attention of the research community, sparking numerous discussions and advancements. Traditionally, the approach to 3D scene generation involved learning features or characteristics of a scene in two dimensions. However, innovative techniques now leverage differentiable rendering, enabling the computation of gradients or derivatives of rendered images in relation to the input geometry parameters.

Nevertheless, while these techniques have shown promise, they often provide 3D scenes with limited variations, merely capturing minor changes in terrain representations. To overcome this limitation, a novel approach to 3D scene generation has emerged, aiming to create natural scenes that boast unique features resulting from the interdependence between their constituent geometry and appearance. The distinctive nature of these features presents a challenge for the model to learn common characteristics shared by typical figures.

In similar cases, the exemplar-based paradigm proves useful, involving the manipulation of a suitable exemplar model to construct a richer target model. However, the presence of different exemplar scenes with specific characteristics makes it arduous to create ad hoc designs for every scene type. To address this issue, the proposed approach adopts a patch-based algorithm, which predates the era of deep learning.

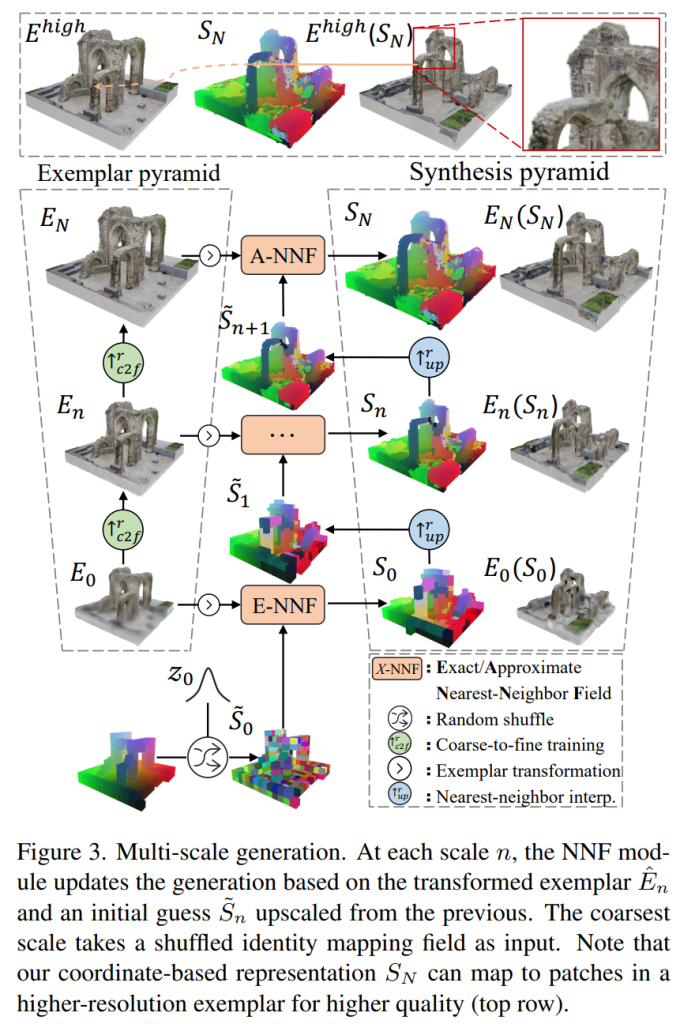

More precisely, this approach embraces a multi-scale generative patch-based framework, incorporating a Generative Patch Nearest-Neighbor (GPNN) module to maximize the bidirectional visual summary between the input and output. To represent the input scene effectively, Plenoxels, a grid-based radiance field renowned for its impressive visual effects, are utilized. While the regular structure and simplicity of Plenoxels benefit patch-based algorithms, certain crucial designs must be implemented.

Specifically, the exemplar pyramid is constructed by subjecting Plenoxels to a coarse-to-fine training process based on images of the input scene rather than simply downsampling a pre-trained model with high resolution. Additionally, the high-dimensional, unbounded, and noisy features of the Plenoxels-based exemplar at each level are transformed into well-defined and compact geometric and appearance features. This transformation enhances robustness and efficiency in subsequent patch matching.

Moreover, this study incorporates diverse representations within the generative nearest neighbor module to facilitate the synthesis process. Patch matching and blending operate simultaneously at each level, progressively synthesizing an intermediate value-based scene that will ultimately be transformed into a coordinate-based equivalent.

Finally, it is important to note that patch-based algorithms involving voxels can impose significant computational demands. To address this concern, an exact-to-approximate patch nearest-neighbor field (NNF) module is implemented within the pyramid. This module effectively manages the search space, keeping it within manageable bounds while minimizing compromises on visual summary optimality.

By combining the power of artificial intelligence, generative patch-based frameworks, and Plenoxels, the proposed approach to 3D scene generation pushes the boundaries of what can be achieved in terms of creating natural and visually captivating scenes. With its emphasis on interdependent geometry and appearance features, this technique holds tremendous.

Source: Marktechpost Media Inc.

Conlcusion:

The advancements in 3D modeling and scene generation, driven by computer vision and machine learning, have significant implications for the market. These technologies offer tremendous opportunities for industries such as architecture and engineering, enabling more efficient and cost-effective creation of complex 3D models. The ability to generate realistic visual representations from single input images opens doors for enhanced visualization, design, and decision-making processes.

Moreover, the emergence of novel approaches, incorporating interdependent geometry and appearance features paves the way for creating unique and visually captivating scenes. Businesses operating in these industries can leverage these advancements to streamline their workflows, improve client presentations, and drive innovation. It is crucial for companies to stay updated with these technological developments and explore their potential applications to gain a competitive edge in the market.