TL;DR:

- MIT’s Liquid Neural Networks (LNNs) offer an efficient solution to AI challenges in robotics and self-driving cars.

- LNNs are compact, adaptable, and utilize dynamically adjustable differential equations.

- Their unique wiring architecture enables continuous-time learning and dynamic behavior adaptation.

- LNNs require fewer neurons, making them suitable for small computing devices and increasing interpretability.

- They excel in understanding causal relationships, leading to better generalization in unseen situations.

- LNNs are ideal for handling continuous data streams, making them valuable in safety-critical applications.

- Market implications include enhanced AI capabilities in robotics, autonomous vehicles, and other safety-critical industries.

Main AI News:

MIT’s Liquid Neural Networks (LNNs) are emerging as a transformative solution in the realm of artificial intelligence (AI). While the focus on large language models (LLMs) has been prevalent, not all applications can accommodate the computational demands of these mammoth deep learning models. This has sparked intriguing research in alternative directions, leading to the development of Liquid Neural Networks by researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL).

The concept behind Liquid Neural Networks was to create compact and adaptable AI architectures that can effectively address challenges faced by traditional deep learning models, especially in robotics and self-driving cars. Daniela Rus, the director of MIT CSAIL, highlighted the inspiration behind LNNs: “On a robot, you cannot really run a large language model because there isn’t the computation power and storage space for that.”

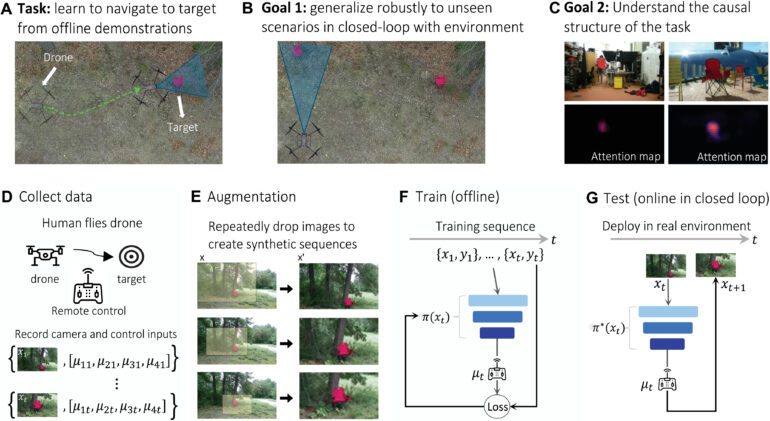

Drawing inspiration from biological neurons found in small organisms like the C. Elegans worm, which exhibits complex tasks with only 302 neurons, the researchers crafted Liquid Neural Networks. These networks significantly differ from conventional deep learning models in their use of a less computationally expensive mathematical formulation and stable neuron training. The key to LNNs’ efficiency lies in dynamically adjustable differential equations, enabling them to adapt to new situations post-training—an extraordinary capability absent in typical neural networks.

Moreover, LNNs employ a unique wiring architecture that allows lateral and recurrent connections within the same layer. These mathematical equations and the novel wiring architecture empower Liquid Neural Networks to learn continuous-time models and dynamically adjust their behavior, further enhancing their potential to solve complex AI problems.

One of the standout features of LNNs is their compactness. For instance, while a traditional deep neural network requires around 100,000 artificial neurons and half a million parameters to perform tasks like keeping a car in its lane, an LNN can achieve the same with just 19 neurons. This reduced size brings multiple advantages, making it feasible for LNNs to operate on small computers found in robots and edge devices. Additionally, with fewer neurons, the network’s interpretability is significantly enhanced, addressing a crucial challenge in AI.

LNNs also excel in understanding causal relationships, unlike traditional deep learning systems that often struggle with learning unrelated patterns. The ability of Liquid Neural Networks to better grasp causal relationships allows them to generalize more effectively to unseen situations. In comparative tests on object detection with video frames taken in different settings, LNNs demonstrated high accuracy across varied environments, while other neural networks experienced performance drops due to their reliance on context analysis.

Attention maps extracted from the models further revealed that LNNs concentrate on the main focus of the task, enabling them to adapt to changes in context effectively. Other models tend to disperse their attention to irrelevant parts of the input, hindering their adaptability.

Liquid Neural Networks excel in handling continuous data streams such as video and audio streams or sequences of temperature measurements. Their nature and characteristics make them particularly well-suited for computationally constrained and safety-critical applications in robotics and autonomous vehicles, where continuous data feeds are essential.

MIT CSAIL’s team has already conducted successful tests with LNNs in single-robot settings and has ambitious plans to explore their potential further in multi-robot systems and diverse datasets. The advent of Liquid Neural Networks promises to unlock new frontiers in AI and revolutionize fields like robotics and self-driving cars, offering efficient and adaptive solutions to real-world challenges.

Conclusion:

MIT’s Liquid Neural Networks have the potential to reshape the AI landscape, especially in the robotics and self-driving car sectors. Their compactness, adaptability, and focus on understanding causal relationships provide unique advantages, enabling efficient and interpretable AI solutions. As LNNs continue to be tested and implemented in various applications, businesses in the AI market must closely monitor these developments and consider integrating them into their products and services. Early adopters of LNN-based AI systems stand to gain a competitive edge, offering advanced and reliable solutions in safety-critical domains. The emergence of LNNs marks a significant step towards overcoming challenges posed by traditional deep learning models and unlocking new possibilities in AI-driven industries.