TL;DR:

- Researchers introduce an “easy-to-hard” generalization approach for training AI language models.

- Conventional hard data training is costly and prone to errors, hindering model performance.

- Easy data training followed by testing on hard data shows remarkable proficiency.

- Models trained on easy data bridge up to 70-100% of the performance gap compared to hard data-trained models.

- Scalable oversight problem becomes more manageable with this approach.

Main AI News:

Language models, crucial instruments across various industries, span from basic text generation to intricate problem-solving. Nevertheless, a formidable hurdle looms in the realm of training these models to excel in intricate or ‘hard’ data, frequently characterized by its specialized essence and heightened complexity. The precision and trustworthiness of a model’s performance on such data pivots significantly upon the caliber of its training, a process hampered by the inherent complexities of precisely annotating hard data.

Traditionally, instructing language models in the ways of hard data necessitated direct immersion in this data during the training phase. While this approach seems straightforward, it often encounters difficulties due to the exorbitant costs and time investments required for meticulous hard data labeling, thereby increasing the likelihood of noise and errors infiltrating the training process. Such a methodology must grapple with the intricate facets of hard data, ultimately yielding suboptimal model performance.

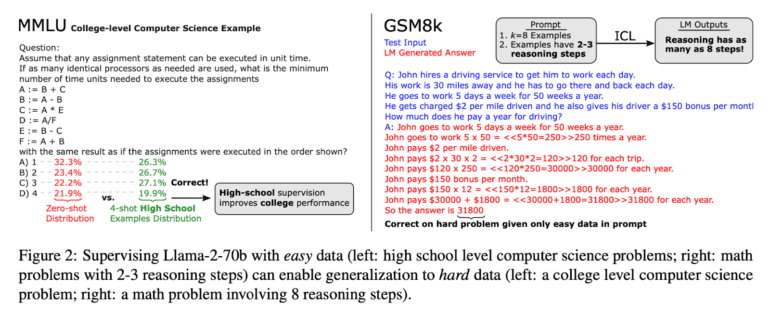

Enter a novel paradigm known as ‘easy-to-hard’ generalization, recently introduced by the adept researchers at Allen Institute for AI and UNC Chapel Hill. This innovative approach entails training language models on ‘easy’ data, characterized by its simplicity and cost-effectiveness in terms of accurate labeling, while subsequently evaluating the models on the challenging hard data. The underlying principle posits that a model, proficient in deciphering and processing easy data, can readily extrapolate this acumen to more intricate scenarios. This paradigm shift steers away from the conventional route of direct hard data training, favoring the construction of a robust foundation through the utilization of easier data.

The mechanics of easy-to-hard generalization hinge on streamlined training techniques such as in-context learning, linear classifier heads, and QLoRA. These methods leverage readily annotatable data, like elementary-level science queries, with the goal of establishing a solid foundational comprehension within the model. This reservoir of knowledge can then be applied to more intricate data domains, encompassing college-level STEM inquiries or advanced trivia.

Empirical investigations have convincingly demonstrated that models trained via the easy-to-hard generalization framework exhibit remarkable adeptness when confronted with hard test data, often performing at par with models directly trained on hard data. This surprising efficacy suggests that the vexing challenge of assessing the accuracy of a model’s outputs, the scalable oversight problem, maybe more tractable than previously presumed. In practical terms, models trained on the bedrock of easy data have demonstrated their capacity to bridge up to 70-100% of the performance divide in comparison to models explicitly trained on hard data.

Easy-to-hard generalization emerges as an efficient panacea to the formidable scalable oversight problem. By harnessing readily accessible and accurately labeled easy data for training, this approach mitigates the time and financial outlay entailed in the training endeavor. It adeptly sidesteps the pitfall of noise and inaccuracies often plaguing hard data. The remarkable ability of these models to proficiently navigate hard data terrains, solely grounded in easy data training, underscores the resilience and adaptability emblematic of contemporary language models.

Conclusion:

The introduction of the “easy-to-hard” generalization approach in AI training offers a game-changing solution for industries relying on language models. This innovative method reduces costs, saves time, and minimizes errors associated with hard data training. As a result, businesses can expect improved model performance and a more manageable scalable oversight problem, ultimately increasing the efficiency and reliability of AI applications in the market.