TL;DR:

- Stable Diffusion has revolutionized content generation by offering software to generate high-fidelity RGB images from text prompts.

- A research study introduces LDM3D, a Latent Diffusion Model for 3D, which generates both image and depth map data from text prompts.

- LDM3D allows the creation of full RGBD representations, providing immersive 360° perspectives.

- The model was trained on a dataset of 4 million tuples, incorporating RGB pictures, depth maps, and descriptions.

- DepthFusion, an application built on top of LDM3D, uses RGBD photos to create interactive and immersive 360° projections.

- DepthFusion showcases the potential of LDM3D in various sectors, including gaming, entertainment, design, and architecture.

- The study presents three contributions: introducing LDM3D, creating DepthFusion, and evaluating the effectiveness of RGBD photos and 360° immersive films.

- LDM3D and DepthFusion have the potential to transform how people interact with digital content.

- These advancements open up new possibilities for generative AI and computer vision research.

Main AI News:

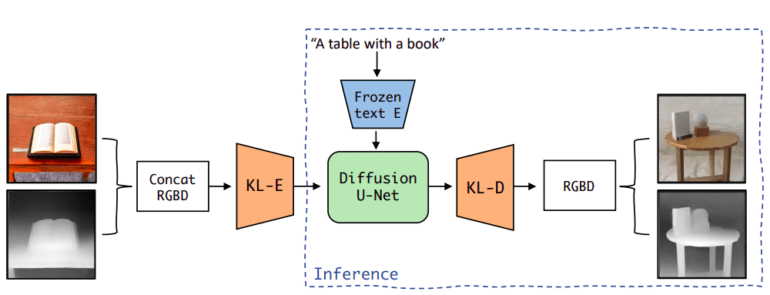

In the rapidly advancing field of generative AI, computer vision has reached new frontiers. Stable Diffusion, a prominent player in content production, has introduced Stable Diffusion v1.4, enabling the generation of high-fidelity RGB images from text prompts. Now, a groundbreaking research study presents the Latent Diffusion Model for 3D (LDM3D), an evolution of Stable Diffusion capable of producing both depth maps and picture data based on a given text prompt. Figure 1 showcases the immense potential of LDM3D, enabling the creation of full RGBD representations that immerse users in captivating 360° perspectives.

The LDM3D model was fine-tuned using a dataset comprising approximately 4 million tuples, including RGB pictures, depth maps, and descriptions. Leveraging a portion of the LAION-400M dataset, which boasts over 400 million image-caption pairings, the researchers crafted a comprehensive dataset for training. To ensure precise depth estimates for each pixel, the DPT-Large depth estimation model was employed, enabling the creation of realistic and immersive 360° views from accurate depth maps.

Building upon LDM3D’s potential, the researchers from Intel Labs and Blockade Labs developed DepthFusion, an application that harnesses the power of 2D RGB photos and depth maps to create mesmerizing 360° projections using TouchDesigner. This innovative technology showcases the transformative capabilities of LDM3D, promising to reshape how people engage with digital content. By providing interactive and immersive multimedia experiences, DepthFusion, powered by TouchDesigner, unlocks new possibilities for various sectors, including gaming, entertainment, design, and architecture.

The study’s contributions are threefold: (1) Introducing LDM3D, a novel diffusion model that generates RGBD images from text prompts. (2) Creating DepthFusion, a program that utilizes RGBD photos produced by LDM3D to deliver captivating 360°-view experiences. (3) Conducting comprehensive studies to evaluate the effectiveness of the generated RGBD images and immersive 360° films. This research presents LDM3D as an innovative diffusion model for producing RGBD visuals from text cues. Additionally, DepthFusion showcases the potential of LDM3D through immersive and interactive 360° experiences created with TouchDesigner.

The implications of this study are profound, potentially transforming how people interact with digital content across a wide range of industries. From entertainment and gaming to architecture and design, LDM3D’s capabilities open up new opportunities for generative AI and computer vision research. As the field continues to evolve, the researchers eagerly anticipate further developments and encourage the community to leverage their findings for mutual benefit.

Conlcusion:

The introduction of LDM3D and its associated applications, such as DepthFusion, presents significant opportunities within the market. The ability to generate high-fidelity RGB images and depth maps from text prompts opens up new avenues for content creation, particularly in sectors such as gaming, entertainment, design, and architecture.

This technology has the potential to revolutionize the way people interact with digital material, providing immersive and interactive experiences. The advancements in generative AI and computer vision showcased by LDM3D and DepthFusion have the capacity to drive innovation and transform the market landscape, offering businesses new ways to engage with their audience and deliver captivating experiences.