TL;DR:

- Meta GenAI’s groundbreaking study presents an innovative quantization strategy for Latent Diffusion Models (LDMs).

- The strategy combines global and local quantization techniques, utilizing Signal-to-Quantization Noise Ratio (SQNR) as a key metric.

- LDMs, known for capturing temporal evolution, face deployment challenges on edge devices due to their parameter count.

- The research introduces the concept of relative quantization noise and offers efficient solutions at both global and local levels.

- Performance evaluation using FID and SQNR metrics on text-to-image generation demonstrates the strategy’s effectiveness.

- The study highlights the need for more efficient systems tailored to LDMs in the market.

Main AI News:

In the ever-evolving landscape of edge computing, the deployment of complex models, such as Latent Diffusion Models (LDMs), onto resource-constrained devices presents an array of formidable challenges. These dynamic models, renowned for their ability to capture temporal evolution in data, require ingenious strategies to overcome the limitations inherent to edge devices. In response to this challenge, Meta GenAI’s groundbreaking study introduces a cutting-edge quantization strategy for LDMs.

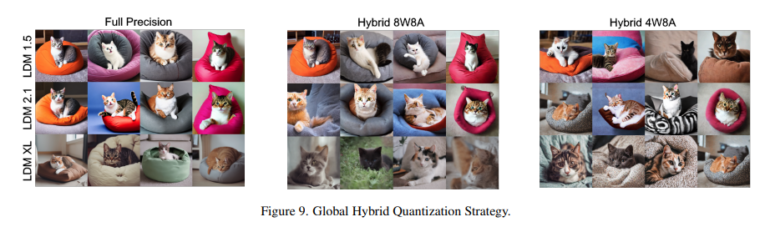

Meta GenAI’s team of researchers has harnessed an effective quantization approach that surmounts the hurdles posed by post-training quantization (PTQ) for LDMs. This pioneering methodology seamlessly integrates global and local quantization strategies, all while leveraging the crucial Signal-to-Quantization Noise Ratio (SQNR) as a pivotal metric. This innovative approach tackles relative quantization noise head-on, identifying and expertly addressing sensitive blocks within LDMs. Global quantization optimizes precision for these identified blocks, whereas localized treatments cater to specific challenges encountered within quantization-sensitive and time-sensitive modules.

LDMs, lauded for their ability to encapsulate dynamic temporal evolution within data representations, have long grappled with deployment issues on resource-constrained edge devices, primarily due to their substantial parameter count. Traditional PTQ, a model compression method, faces substantial hurdles when dealing with the temporal and structural complexities inherent to LDMs. This transformative study advocates for an efficient quantization strategy for LDMs, grounded in the evaluation prowess of SQNR. The system proficiently deploys both global and local quantization techniques to tackle relative quantization noise and overcome the complexities within quantization-sensitive and time-sensitive modules. This research is poised to provide comprehensive and effective quantization solutions for LDMs, operating at both global and local scales.

Moreover, this study introduces a sophisticated quantization strategy for LDMs, elevating the importance of SQNR as a key evaluation metric. The meticulously crafted design amalgamates global and local quantization methodologies to mitigate relative quantization noise and surmount challenges encountered within quantization-sensitive and time-sensitive modules. The researchers conducted a thorough analysis of LDM quantization, introducing an inventive strategy to pinpoint sensitive blocks within the models. By harnessing the MS-COCO validation dataset and FID/SQNR metrics, the performance evaluation for conditional text-to-image generation beautifully showcases the efficacy of the proposed procedures. Ablations carried out on LDM 1.5 8W8A quantization settings ensure an exhaustive examination of the suggested techniques.

Conclusion:

Meta GenAI’s pioneering quantization strategy for Latent Diffusion Models (LDMs) represents a significant advancement in the field of edge computing. By addressing the challenges of deploying LDMs on resource-constrained devices and introducing innovative quantization techniques, this research sets a new standard for efficiency. The market can expect to see a growing demand for such advanced strategies to enable the seamless integration of LDMs into edge devices, revolutionizing the capabilities of edge computing technologies.