TL;DR:

- Robotic learning is undergoing a transformative phase, inspired by the adaptable learning patterns of human toddlers.

- Recent breakthroughs include VRB (Vision-Robotics Bridge) and RT-2 (Robotic Transformer 2), showcasing the application of YouTube-derived insights and abstraction of task intricacies.

- The research draws parallels between robotic AI agents and early-stage human learning, categorizing it into active and passive learning modes.

- RoboAgent merges active and passive learning, mirroring human cognition by observing tasks online and remote control.

- The open-source dataset supports versatile robotics hardware, fostering a repository of diverse skills and data.

- The trajectory promises multipurpose robotic systems moving towards general-purpose robots, albeit with implementation challenges.

Main AI News:

The realm of robotic learning is currently undergoing a remarkable evolution, akin to the captivating development phases of human toddlers. Over the decades, organizations have meticulously curated complex datasets and explored diverse methodologies to impart new skills to robotic systems. The horizon is ablaze with the prospect of revolutionary advancements in technology that can swiftly adapt and learn in real-time.

Recent times have witnessed an influx of captivating research studies. Notably, the Vision-Robotics Bridge (VRB), showcased by Carnegie Mellon University in June, has garnered significant attention. This cutting-edge system adeptly transfers insights gleaned from YouTube videos to distinct environments, liberating programmers from the exhaustive task of accounting for every conceivable variation.

DeepMind, Google’s powerhouse in robotics, seized the spotlight with its formidable creation, Robotic Transformer 2 (RT-2). This system possesses the innate ability to distill the intricacies of task execution. To illustrate, commanding a robot to discard a piece of trash doesn’t necessitate programming it to recognize individual pieces of debris, pick them up, and discard them – a seemingly straightforward action for humans.

Furthermore, recent research spotlighted by Carnegie Mellon University draws intriguing parallels between robotic AI agents and the early stages of human learning. Drawing inspiration from three-year-old toddlers, the learning process is dissected into two modes: active and passive learning.

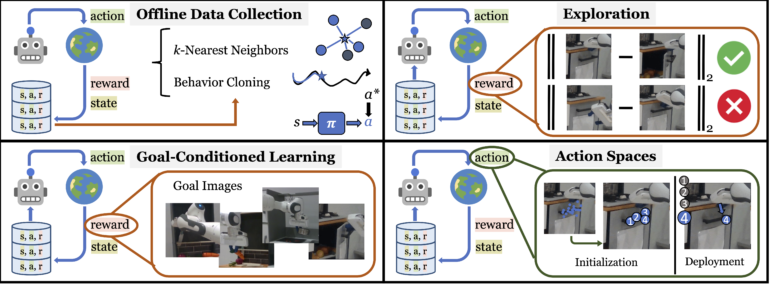

Passive learning, in this context, entails instructing a system via videos or training it with established datasets. In contrast, active learning mirrors its name – involving hands-on task performance and iterative adjustments until mastery is achieved.

Enter RoboAgent, a collaborative endeavor between CMU and Meta AI. This innovation ingeniously amalgamates both forms of learning, akin to human cognition. This implies observing task executions online while infusing active learning by remotely controlling the robot. Remarkably, this system transposes learnings from one setting to another, reminiscent of the VRB concept.

Shubham Tulsiani from CMU’s Robotics Institute envisions this milestone as a significant stride towards creating versatile robots that excel across an array of tasks in diverse, uncharted environments. Tulsiani posits, “RoboAgent’s unique approach involves swift training using domain-specific data, supplemented by an extensive array of free data sourced from the internet. This could potentially elevate robots’ utility in unstructured settings like homes, hospitals, and public spaces.”

One intriguing facet is the open-source and universally accessible nature of the dataset. This resource is tailored for compatibility with off-the-shelf robotics hardware, offering both researchers and corporations the opportunity to harness and cultivate a burgeoning repository of robot data and proficiencies.

Abhinav Gupta, a luminary from the Robotics Institute, underscores the exceptional complexity and proficiency achieved by RoboAgents. He remarks, “The versatility of skills exhibited by RoboAgents surpasses preceding accomplishments, boasting a spectrum of proficiencies unparalleled by any real-world robotic entity. Its efficiency and capacity for generalization to unfamiliar scenarios are truly remarkable.”

Conclusion:

The fusion of toddler-inspired learning principles with robotics heralds a pivotal era. Innovations like VRB and RT-2 demonstrate the integration of external insights and task abstraction. The comparison to human learning underscores the dawn of a new phase. RoboAgent’s synthesis of active and passive learning imitates human cognition. This shift towards multipurpose systems marks a significant market evolution, potentially transforming industries with adaptable and versatile robotic technologies.