TL;DR:

- Robots have transitioned from sci-fi fantasies to transformative forces in various industries.

- The primary robotics goal is to imitate human dexterity.

- Integration of eye-in-hand cameras advances manipulation capabilities, yet faces real-world challenges.

- Novel techniques use human video demonstrations to teach robots action policies, enhancing generalization.

- Expensive robot datasets contrasted with cost-effective human demonstration videos.

- “Giving Robots a Hand” study bridges human-robot appearance disparities using masked images.

- This direct approach outperforms domain adaptation, enabling robots to learn from human videos.

- The method boosts environment and task generalization, excelling in real-world manipulation tasks.

- Policies adapt to new environments and tasks, showcasing a 58% surge in success rates.

Main AI News:

In the dynamic realm of technology, robots have consistently captivated our imagination. Emerging from the pages of science fiction, these mechanical marvels have transcended into reality, infiltrating diverse domains from entertainment to space exploration. The profound evolution of robotics has ushered in an era where their precision and adaptability redefine the very fabric of industries, offering us a tantalizing glimpse into the unfolding future.

At the heart of this progression lies a perpetual aspiration: to replicate the finesse of human dexterity. The pursuit of refining manipulation capabilities to emulate human actions has yielded remarkable breakthroughs. Among these, the integration of eye-in-hand cameras emerges as a pivotal force, supplementing or supplanting conventional static third-person perspectives.

Despite the immense promise held by eye-in-hand cameras, they do not guarantee flawless outcomes. Vision-based models often grapple with the volatility inherent to the real world – be it shifting backgrounds, fluctuating lighting conditions, or altering object appearances – resulting in vulnerability and fragility.

In response, a novel suite of generalization techniques has recently surfaced. Departing from conventional reliance on visual data, these techniques involve imparting robots with specific action protocols drawn from a diverse array of robot demonstration datasets. While effective to a degree, there’s a substantial caveat: the cost. Acquiring such data within an actual robotic setup entails arduous and time-consuming endeavors, such as kinesthetic teaching or remote operation via virtual reality headsets and joysticks.

But do we truly necessitate dependence on such a costly dataset? If the ultimate objective is to mimic human behavior, could we not harness the power of human demonstration videos? These visual depictions of human tasks offer a pragmatic solution, thanks to the inherent agility of humans. This approach enables the capture of numerous demonstrations sans constant robot resets, intricate hardware adjustments, or laborious repositioning. This intriguing avenue presents the potential to leverage human video demonstrations as a catalyst for fortifying the generalization prowess of vision-centric robotic manipulators, all at an expansive scale.

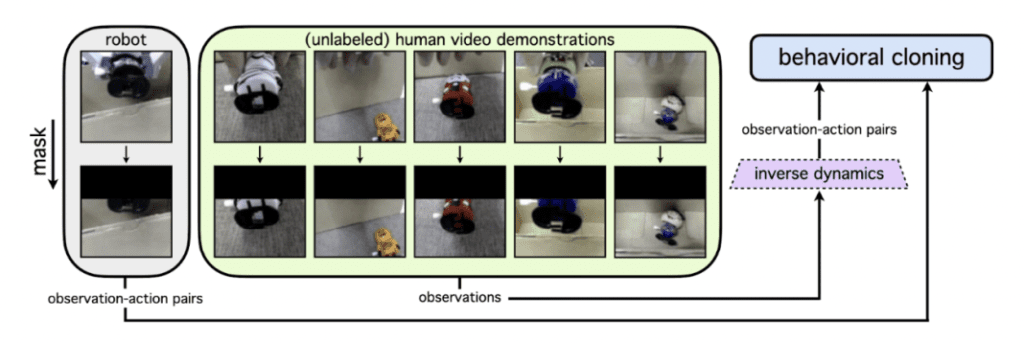

However, bridging the chasm between the human and robotic domains is no facile feat. The dissimilarities in appearance between these two entities introduce a distributional shift that demands meticulous attention. This is where we encounter an innovative study that acts as a bridge: “Giving Robots a Hand.”

While prevailing methodologies predominantly rely on third-person camera viewpoints, this revolutionary approach takes a refreshingly direct trajectory. It involves selectively masking a consistent segment within each image, effectively veiling the human hand or robotic end-effector. By circumventing the need for intricate domain adaptation techniques, this straightforward methodology empowers robots to glean manipulation strategies directly from human-generated videos. Consequently, it obviates the challenges posed by explicit domain adaptation approaches, which often yield jarring visual disparities when translating images from human to robot contexts.

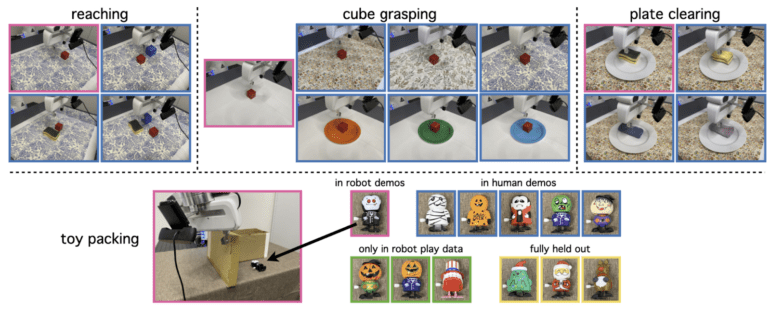

The crux of “Giving Robots a Hand” lies in its exploratory nature. It amalgamates a diverse array of eye-in-hand human video demonstrations, propelling enhancements in both environmental and task-oriented generalizations. The outcomes are nothing short of remarkable, as evidenced by its exceptional performance across an array of real-world robotic manipulation tasks encompassing reaching, grasping, pick-and-place operations, cube stacking, plate clearing, and toy packing, among others. The proposed approach substantially augments generalization capabilities, empowering policies to seamlessly acclimate to unfamiliar terrains and novel tasks, previously unobserved during robot demonstrations. Evidently, there is an average surge of 58% in absolute success rates within uncharted environments and tasks, when contrasted with policies solely honed through robot-based training paradigms.

Overview of Giving Robots a Hand. Source: https://arxiv.org/pdf/2307.05959.pdf

Conclusion:

The fusion of human video demonstrations with robotic learning promises to revolutionize the market. This innovative approach not only elevates the potential of robotic manipulation but also significantly reduces the cost and complexity of training. As industries increasingly rely on adaptable robots, this advancement holds the key to quicker, more efficient, and cost-effective deployment of robotic solutions.