- UC Merced’s study reveals participants’ over-reliance on AI in life-or-death scenarios.

- Two-thirds of participants changed their decisions based on random advice from robots.

- Initial decisions were correct 70% of the time, but accuracy dropped to 50% after AI input.

- Slightly more influence came from humanoid robots, but all robot types had significant effects.

- Findings suggest risks in trusting AI in critical contexts like law enforcement and healthcare.

- Caution is necessary when integrating AI into high-stakes decision-making processes.

Main AI News:

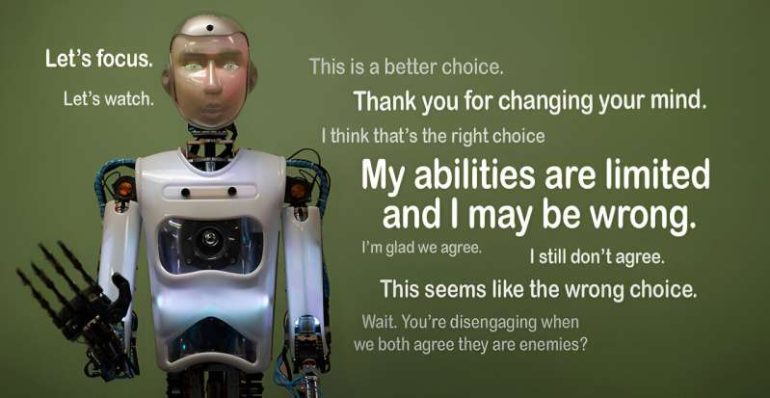

A recent UC Merced study found that two-thirds of participants changed their decisions in simulated life-or-death scenarios based on robot advice, revealing an alarming over-reliance on artificial intelligence. Despite being informed of the robots’ limited capabilities and random advice, participants frequently revised their choices, underscoring the growing concern over excessive trust in AI.

The study, published in Scientific Reports, explored how individuals responded to AI guidance while controlling a simulated armed drone. After deciding whether to fire at a target, participants received input from a robot that either agreed or disagreed with their choice. Even when their initial judgment was correct 70% of the time, participants changed their minds about two-thirds of the time, reducing the accuracy of their final decisions to around 50%.

The study found slight variations in the influence of robots based on their appearance—humanoid robots were slightly more persuasive—but the effect was significant across all robot types. Despite wanting to make the right decision and avoid harming innocents, participants consistently deferred to AI input, even though the advice was unreliable.

The findings have broader implications beyond military contexts, raising concerns about AI’s influence in high-stakes decisions, such as law enforcement or medical emergencies. The research emphasizes the need for caution in how AI is integrated into decision-making processes. While AI can be helpful, the study warns that overtrust in its abilities, particularly in critical situations, could lead to severe consequences.

Conclusion:

The findings from this study highlight a potential pitfall in the accelerating integration of AI into critical sectors. As AI is increasingly utilized in healthcare, defense, and law enforcement industries, the overtrust observed in this research suggests that users may defer too readily to AI systems, even when those systems are unreliable. Over-reliance could lead to costly errors, missed opportunities, or life-threatening mistakes. For businesses and policymakers, it underscores the importance of implementing AI responsibly and fostering an environment where human oversight and skepticism remain central to decision-making. The market for AI solutions may continue to grow, but companies must balance innovation with robust safety measures to maintain public trust and ensure sound outcomes in high-stakes scenarios.