TL;DR:

- Rite Aid faces a five-year ban on using facial recognition software imposed by the FTC.

- FTC alleges “reckless use of facial surveillance systems” causing customer humiliation and data risks.

- The order mandates image deletion and data security improvements.

- A 2020 report exposed secret facial recognition implementation in 200 U.S. stores.

- Biases in Rite Aid’s technology led to false positives, especially in non-white communities.

- Rite Aid was accused of not informing customers and instructing employees to stay silent about facial recognition.

- Rite Aid responds, disagreeing with the allegations, citing a discontinued pilot program.

Main AI News:

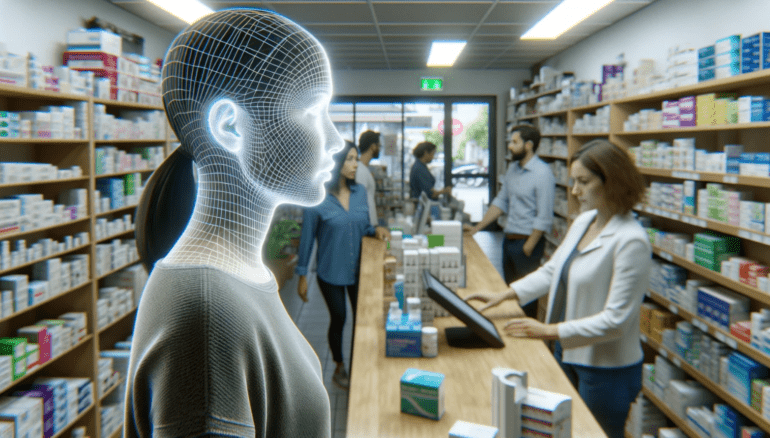

Rite Aid, the U.S. drugstore giant, finds itself at the center of a major controversy as the Federal Trade Commission (FTC) imposes a five-year ban on its use of facial recognition software. The FTC’s stern action comes in response to what it terms Rite Aid’s “reckless use of facial surveillance systems,” which not only left customers humiliated but also jeopardized their sensitive information.

The FTC’s Order, pending approval from the U.S. Bankruptcy Court following Rite Aid’s Chapter 11 bankruptcy protection filing, mandates several critical actions. Firstly, Rite Aid must promptly delete all images collected through its facial recognition system deployment and any products derived from these images. Furthermore, the company is required to establish a robust data security program to safeguard the personal data it gathers.

A 2020 Reuters report uncovered that Rite Aid had surreptitiously implemented facial recognition systems in approximately 200 U.S. stores over an eight-year span, starting in 2012. These systems were largely tested in lower-income, non-white neighborhoods.

With the FTC intensifying its scrutiny of biometric surveillance, Rite Aid found itself firmly within the agency’s crosshairs. Among the allegations, it is revealed that Rite Aid, in collaboration with two contracted companies, maintained a “watchlist database” containing images of customers suspected of criminal activity within their stores. These images, often of subpar quality, were sourced from CCTV cameras or employees’ mobile phones.

Whenever a customer entered a store and purportedly matched an image in the database, employees received automatic alerts, instructing them to take action. Unfortunately, many of these alerts were false positives, leading to incorrect accusations against innocent customers. This resulted in embarrassment, harassment, and other harm, as stated by the FTC.

Employees, acting on these false alerts, trailed consumers throughout the stores, conducted searches, ordered them to leave, and even called the police to confront or eject them. In some instances, customers were publicly accused of shoplifting or other wrongdoing, often in front of friends and family.

Furthermore, the FTC found that Rite Aid neglected to inform customers about the use of facial recognition technology and, shockingly, instructed employees not to disclose this information to customers.

The Unmasking of Facial Recognition

Facial recognition software has emerged as one of the most contentious aspects of AI-powered surveillance in recent years. Several cities have enacted comprehensive bans on its use, and politicians have strived to regulate law enforcement’s utilization of this technology. Companies like Clearview AI have faced lawsuits and fines globally due to major data privacy breaches linked to facial recognition technology.

The FTC’s latest findings regarding Rite Aid also highlight inherent biases within AI systems. Notably, the FTC observes that Rite Aid’s technology was more prone to generate false positives in stores situated in predominantly Black and Asian communities compared to predominantly White communities.

Additionally, the FTC criticizes Rite Aid for failing to test or measure the accuracy of its facial recognition system before or after deployment.

Rite Aid’s Response

In response, Rite Aid issued a press release expressing its contentment with reaching an agreement with the FTC. However, the company vehemently disagrees with the core allegations. Rite Aid clarifies that the allegations pertain to a facial recognition technology pilot program that it implemented in a limited number of stores. Importantly, the company asserts that it discontinued the use of this technology in these select stores more than three years ago, well before the FTC’s investigation began.

Conclusion:

Rite Aid’s ban on facial recognition software serves as a cautionary tale for businesses in the market. It highlights the necessity of responsible and ethical use of biometric surveillance technology, emphasizing the importance of oversight and accountability to address privacy concerns and biases in AI systems. Companies in this space must prioritize transparency and accuracy to navigate regulatory challenges and maintain public trust.