- Salesforce AI introduces the SFR-Embedding-Mistral model for text retrieval and NLP tasks.

- The model enhances existing text-embedding models like E5-mistral-7b-instruct and Mistral-7B-v0.1.

- It leverages multi-task training, task-homogeneous batching, and hard negatives for improved performance.

- Techniques such as contrastive loss and teacher models are employed for fine-tuning.

- Trained on diverse datasets, the model shows remarkable generalization across various benchmarks.

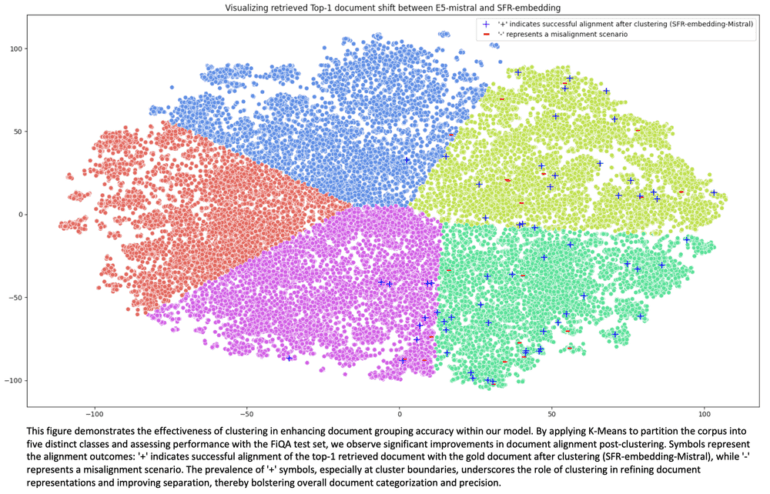

- The integration of clustering tasks with retrieval tasks boosts retrieval performance significantly.

- Task-homogeneous batching and strategic hard negative selection contribute to enhanced accuracy and generalization.

Main AI News:

In the ever-evolving landscape of natural language processing (NLP), Salesforce AI Researchers have introduced the groundbreaking SFR-Embedding-Mistral model. This innovative model aims to tackle the inherent challenges associated with text-embedding models, particularly in tasks such as retrieval, clustering, classification, and semantic textual similarity.

While current text-embedding models like E5-mistral-7b-instruct and Mistral-7B-v0.1 have demonstrated remarkable performance in specific domains, there remains ample room for advancement to achieve superior results across diverse benchmarks.

The SFR-Embedding-Mistral model builds upon the foundations laid by existing models, offering a fresh perspective on enhancing model performance. By incorporating techniques such as multi-task training, task-homogeneous batching, and hard negatives, the researchers have significantly elevated the capabilities of text-embedding models.

Through meticulous fine-tuning on the e5-mistral-7b-instruct model, employing cutting-edge methods like contrastive loss and teacher models for hard negative mining, the SFR-Embedding-Mistral model emerges as a formidable contender in the realm of NLP.

Trained on a diverse array of datasets spanning retrieval, clustering, classification, and semantic textual similarity tasks, the SFR-Embedding-Mistral model exemplifies a paradigm shift in model training methodologies. By embracing multi-task training, the model achieves remarkable generalization capabilities, thereby outperforming its predecessors across various benchmarks.

The integration of clustering tasks alongside retrieval tasks proves to be a pivotal strategy, resulting in substantial improvements in retrieval performance. Furthermore, techniques such as task-homogeneous batching and strategic selection of hard negatives further bolster model accuracy and generalization, cementing the SFR-Embedding-Mistral model’s position at the forefront of text-embedding research and development.

Conclusion:

The introduction of Salesforce AI’s SFR-Embedding-Mistral model marks a significant advancement in text retrieval and NLP capabilities. Its innovative techniques and superior performance across diverse tasks signal a transformative shift in the market, promising enhanced efficiency and accuracy in language processing applications. Organizations can leverage this breakthrough to streamline their text-based operations and gain a competitive edge in an increasingly data-driven landscape.