TL;DR:

- SAM-Med2D aims to bridge the gap between SAM’s proficiency in natural images and its application in medical 2D image analysis.

- Medical imaging’s complexity, diverse modalities, and limited annotated data have posed challenges for AI algorithms.

- SAM, a cutting-edge vision model, has shown promise in zero-shot and few-shot learning for natural photos.

- SAM struggles to generalize to multi-modal and multi-object medical datasets due to significant domain differences.

- The study emphasizes the need to adapt SAM for specialized medical imaging and the challenges of acquiring quality medical data.

- SAM-Med2D offers benchmark models and evaluation frameworks, enhancing the field of medical image analysis.

Main AI News:

In the realm of medical image analysis, the quest for precision and versatility is unceasing. The accurate segmentation of tissues, organs, and regions of interest within medical images is pivotal for clinicians striving to diagnose and treat patients with pinpoint accuracy. Furthermore, delving into the intricate morphology, structure, and function of bodily components through quantitative and qualitative analysis of medical images is indispensable for advancing our understanding of diseases. Yet, the multifaceted nature of medical imaging, characterized by diverse modalities, intricate tissue and organ architecture, and a dearth of annotated data, has confined many existing approaches to specific modalities, organs, or pathologies.

This confinement poses a formidable challenge: the difficulty of adapting algorithms for diverse clinical contexts, thus hindering their generalization. However, a paradigm shift is underway in the AI community, driven by the emergence of large-scale models like ChatGPT2, ERNIE Bot 3, DINO, SegGPT, and the remarkable Spatial Attention Model (SAM). SAM, the latest entrant to the league of large-scale vision models, promises a singular solution for multiple tasks. It empowers users to craft masks for regions of interest through interactive means, be it clicking, drawing bounding boxes, or providing verbal cues. SAM’s prowess in zero-shot and few-shot learning has garnered considerable acclaim in the realm of natural photos.

Efforts have not been in vain to extend SAM’s zero-shot capabilities to the intricate realm of medical imaging. Nonetheless, SAM encounters hurdles in adapting to multi-modal and multi-object medical datasets, resulting in variable segmentation performance across datasets. The crux of the issue lies in the stark domain gap between natural and medical images. This divergence can be attributed to the disparate data acquisition methods employed: medical images are acquired with precise clinical protocols and dedicated scanners, encompassing a spectrum of modalities such as electrons, lasers, X-rays, ultrasound, nuclear physics, and magnetic resonance. Consequently, medical images deviate significantly from their natural counterparts, bearing the imprints of physics-based features and energy sources.

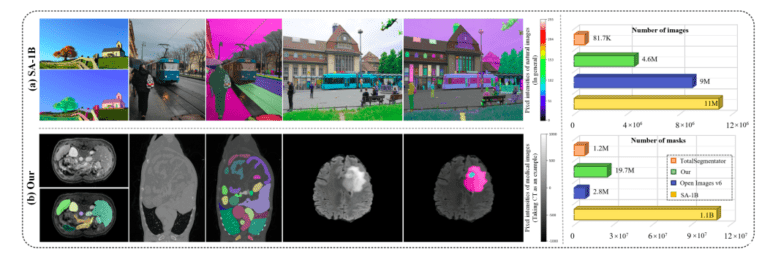

Pixel intensity, color, texture, and distribution characteristics of natural and medical images diverge starkly. SAM, having been nurtured on a diet of natural photos, yearns for specialized knowledge in the domain of medical imaging. However, furnishing SAM with this knowledge is a formidable task, plagued by exorbitant annotation costs and inconsistent annotation quality. Preparing medical data necessitates expertise, and data quality can fluctuate widely across institutions and clinical trials. The quantity of available medical images pales in comparison to the vast trove of natural images, further complicating the situation.

Consider the data volume disparity between publicly available natural image datasets and medical image datasets, vividly exemplified by Totalsegmentor versus Open Image v6 and SA-1B. It is in this chasm that the study of SAM-Med2D emerges as a beacon of hope. Conceived by researchers from Sichuan University and Shanghai AI Laboratory, SAM-Med2D is poised to be the most exhaustive exploration of SAM’s potential in the realm of medical 2D images.

Conclusion:

SAM-Med2D represents a significant step forward in the convergence of AI and medical image analysis. As AI models like SAM become more versatile and adaptable to complex medical data, the market can anticipate accelerated advancements in diagnostics, treatment planning, and research, ultimately leading to improved healthcare outcomes. This underscores the growing synergy between AI and the healthcare industry, presenting lucrative opportunities for businesses involved in AI-driven medical solutions.