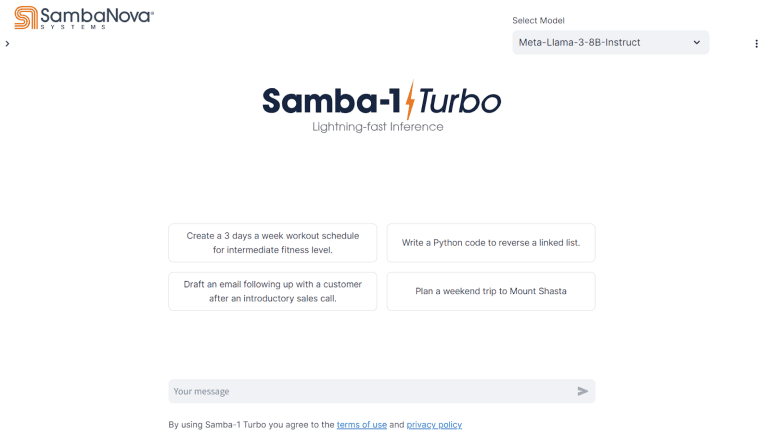

- SambaNova Systems launches Samba-1-Turbo, setting a new standard in AI model processing.

- Samba-1-Turbo achieves 1000 tokens per second processing speed with 16-bit precision.

- Powered by the SN40L chip and Llama-3 Instruct (8B) model, featuring the Reconfigurable Dataflow Unit (RDU) for unparalleled performance.

- RDU’s Pattern Memory Units (PMUs) minimize data movement, enhancing operational efficiency.

- SambaFlow compiler maps entire neural network models as dataflow graphs onto RDU, reducing latency and boosting performance.

- RDU architecture automates data and model parallelism, simplifying complex processes and ensuring optimal performance.

- Supported by advanced models like Meta-Llama-3-8B-Instruct, Mistral-T5-7B-v1, and versatile language support with SambaLingo suite.

Main AI News:

In a landscape where the necessity for swift and effective AI model processing is on the rise, SambaNova Systems has made history with the introduction of Samba-1-Turbo. This pioneering technology achieves a monumental feat, processing 1000 tokens per second with 16-bit precision, all powered by the SN40L chip and executing the advanced Llama-3 Instruct (8B) model. At the heart of Samba-1-Turbo’s prowess lies the Reconfigurable Dataflow Unit (RDU), a groundbreaking component that distinguishes it from conventional GPU-based systems.

The conventional GPUs have often been hindered by their limited on-chip memory capacity, leading to frequent data transfers between the GPU and system memory. This continuous data exchange significantly underutilizes the GPU’s computing power, especially when dealing with extensive models that can only be partially accommodated on-chip. However, SambaNova’s RDU introduces a paradigm shift by incorporating a vast pool of distributed on-chip memory through its Pattern Memory Units (PMUs). Strategically positioned near the compute units, these PMUs drastically reduce the necessity for data movement, thereby vastly enhancing operational efficiency.

Unlike traditional GPUs that execute neural network models in a kernel-by-kernel manner, SambaFlow compiler revolutionizes the process by mapping the entire neural network model as a dataflow graph onto the RDU fabric. This enables seamless pipelined dataflow execution, minimizing latency and memory access overhead, thus significantly boosting performance.

The task of handling large models on GPUs often entails complex model parallelism, requiring the partitioning of the model across multiple GPUs. This process is not only intricate but also demands specialized frameworks and coding expertise. However, SambaNova’s RDU architecture simplifies this complexity by automating data and model parallelism when deploying multiple RDUs in a system, eliminating the need for manual intervention and ensuring optimal performance.

The cutting-edge Meta-Llama-3-8B-Instruct model, along with other remarkable offerings such as Mistral-T5-7B-v1, v1olet_merged_dpo_7B, WestLake-7B-v2-laser-truthy-dpo, and DonutLM-v1, fuels the unprecedented speed and efficiency of Samba-1-Turbo. Furthermore, SambaNova’s SambaLingo suite supports a plethora of languages, including Arabic, Bulgarian, Hungarian, Russian, Serbian (Cyrillic), Slovenian, Thai, Turkish, and Japanese, underscoring the system’s versatility and global relevance.

The seamless integration of hardware and software in Samba-1-Turbo is pivotal to its success. This innovative approach democratizes generative AI, making it more accessible and efficient for enterprises and is poised to drive significant advancements in AI applications, spanning from natural language processing to intricate data analysis.

Conclusion:

The introduction of Samba-1-Turbo by SambaNova Systems marks a significant leap forward in AI processing capabilities. With its unmatched speed, efficiency, and innovative technology, it not only revolutionizes AI model processing but also streamlines complex processes, making generative AI more accessible and efficient for businesses across various industries. This breakthrough sets a new benchmark in the market, driving advancements and shaping the future of AI applications.