TL;DR:

- SecFormer is introduced as an advanced optimization framework for Privacy-Preserving Inference (PPI) in large language models, addressing privacy concerns in cloud-hosted services.

- Large language models based on the Transformer architecture face performance bottlenecks when applying Secure Multi-Party Computing (SMPC) for PPI.

- SecFormer optimizes the balance between performance and efficiency through innovative model design enhancements, replacing high-overhead operations with SMPC-friendly alternatives.

- The framework includes a privacy-preserving GeLU algorithm, efficient algorithms for LayerNorm and Softmax, and employs knowledge distillation to refine the Transformer model for SMPC compatibility.

- Evaluation using the GLUE benchmark dataset shows that SecFormer outperforms existing approaches with an average improvement of 5.6% and 24.2% in performance and efficiency, respectively.

- SecFormer achieves a notable speedup of 3.4 and 3.2 times in PPI compared to other frameworks while maintaining comparable performance levels.

Main AI News:

In the era of cloud-hosted large language models, privacy concerns loom large, especially when dealing with sensitive data. The advent of Secure Multi-Party Computing (SMPC) has provided a glimmer of hope, offering a way to safeguard both inference data and model parameters. Yet, when it comes to Privacy-Preserving Inference (PPI) for large language models, especially those built on the Transformer architecture, challenges arise. For example, the renowned BERTBASE takes a staggering 71 seconds per sample via SMPC, a stark contrast to the less than 1-second plain-text inference. This performance bottleneck can be attributed to the numerous nonlinear operations within the Transformer architecture, which are not well-suited for SMPC. To surmount this hurdle, we introduce SecFormer, an advanced optimization framework meticulously designed to strike the perfect equilibrium between performance and efficiency in PPI for Transformer models.

The Dominance of Transformer-Based Large Language Models

Large language models, particularly those rooted in the Transformer architecture, have consistently dazzled with their exceptional performance across a myriad of tasks. However, the rise of the Model-as-a-Service (MaaS) paradigm has cast a shadow over privacy, with recent investigations revealing the potential extraction of sensitive information from models like GPT-4, using only a handful of samples. Attempts to accelerate PPI for Transformer models by replacing nonlinear operations with SMPC-friendly alternatives have often led to a degradation in performance.

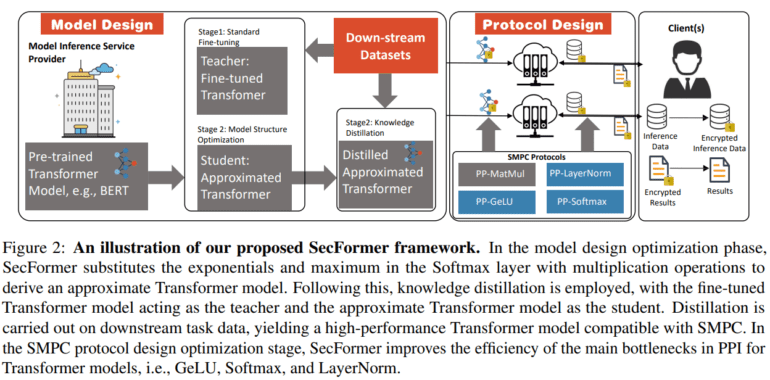

Enter SecFormer, taking an unconventional route by optimizing the delicate balance between performance and efficiency through ingenious model design enhancements. It dares to replace high-overhead operations with innovative alternatives, such as substituting the notorious Softmax with a clever combination of multiplication and division operations. Moreover, knowledge distillation steps in to refine the Transformer model further, ensuring it aligns seamlessly with SMPC. SecFormer introduces a privacy-preserving GeLU algorithm rooted in segmented polynomials and employs efficient privacy-preserving algorithms for LayerNorm and Softmax, all while ensuring the utmost privacy without compromising on performance.

SecFormer’s Remarkable Performance

An evaluation using the GLUE benchmark dataset, involving Transformer models like BERTBASE and BERTLARGE, unequivocally demonstrates that SecFormer surpasses existing state-of-the-art approaches in terms of both performance and efficiency. With an impressive average improvement of 5.6% and 24.2%, SecFormer masterfully achieves the elusive balance between performance and efficiency in PPI. When compared to existing frameworks that rely on model design and SMPC protocol optimizations, SecFormer shines with a remarkable speedup of 3.4 and 3.2 times in PPI while maintaining performance levels that are on par. Through a series of meticulously executed experiments, SecFormer’s effectiveness is not just proven, but celebrated, as it emerges as a potent tool to enhance large language models, while also upholding stringent privacy standards in the ever-evolving landscape of complex linguistics.

Conclusion:

SecFormer’s introduction signifies a major breakthrough in the market of large language models. It offers a solution that not only enhances performance but also prioritizes privacy, making it a valuable tool in industries that rely on sensitive data. As privacy concerns continue to grow, SecFormer’s ability to strike the right balance between performance and efficiency positions it as a game-changer in the landscape of large language models, ensuring both data security and optimal performance.