TL;DR:

- Security is a growing concern in AI/ML chips and tools due to the rapidly evolving nature of the industry.

- The constant changes in algorithms, EDA tools, and chip disaggregation increase the potential for malicious activities.

- Adversarial attacks, data poisoning, model inversion, model extraction, and supply chain vulnerabilities pose significant risks.

- Over-reliance and over-trust in AI systems can lead to security breaches.

- Enhanced tools and limited AI, such as reinforcement learning and scan insertion, help mitigate security risks.

- Developing standards and ensuring the provenance of chips and their components are crucial for maintaining security.

- Innovative approaches, such as running AI on bare metal and securing data in use, are being explored.

Main AI News:

The rapidly evolving landscape of AI and machine learning (ML) chips is giving rise to significant security challenges. As the chip industry races to introduce new devices, the task of ensuring security becomes even more complex. It is particularly difficult to secure a technology that is expected to adapt and evolve over time.

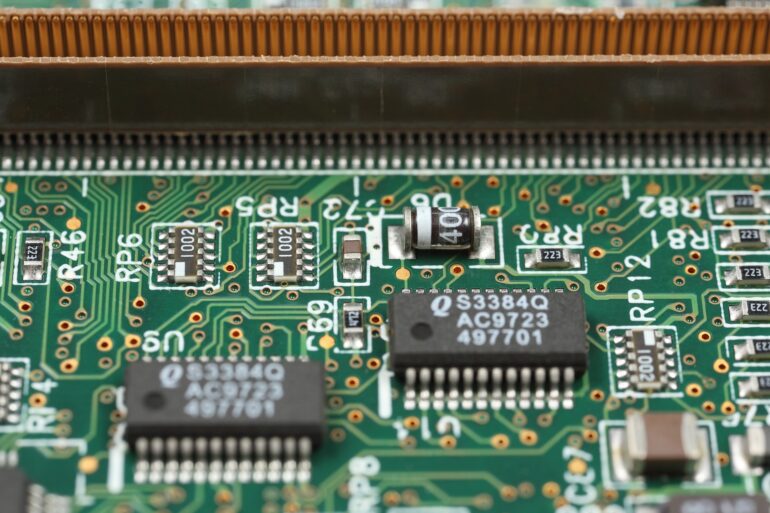

Unlike in the past, where tools and methodologies were relatively static, everything is now in a state of constant motion. Algorithms are being modified, electronic design automation (EDA) tools are incorporating new features, and chips themselves are disaggregating into various components, some of which include AI/ML/DL capabilities.

This dynamic environment introduces a higher potential for malicious activities, intellectual property (IP) theft, and various types of attacks that can compromise data integrity or lead to ransomware incidents. These attacks can range from obvious to subtle, generating errors that may not be readily apparent in a world governed by probabilities. They can distort accuracy for specific purposes, causing significant disruptions.

The challenge lies in defining accuracy and developing algorithms that can reliably determine the correctness of AI models. Jeff Dyck, senior director of engineering at Siemens Digital Industries Software, highlights the difficulty of proving the correctness of an AI model, explaining that gathering data and comparing it to the model’s predictions is a step in the right direction, though not foolproof.

However, these efforts also open the door to less obvious issues, such as programming bias. Bias can infiltrate the deep-learning process at various stages, including long before data collection begins. The traditional practices in computer science are ill-equipped to detect and address bias effectively.

The fundamental question is whether algorithms possess inherent resilience and programmability to minimize inaccuracies and withstand attacks on the data they rely on. Harvard’s Belfer Center conducted a study in 2019, asserting that AI attacks expand the range of entities capable of executing cyberattacks. These attacks also introduce novel ways of weaponizing data, necessitating changes in data collection, storage, and utilization practices.

Initially, the primary challenge for AI/ML/DL developers was to rapidly deploy functional systems and subsequently improve their accuracy. However, many of these systems rely on off-the-shelf algorithms that offer limited visibility into their inner workings, and the hardware they run on can quickly become outdated. Consequently, these systems act as black boxes, and when problems arise, companies utilizing the technology often lack the knowledge to address them effectively.

A continuous study at the University of Virginia indicates that the odds of compromising a commercial AI system are as high as 1 in 2. In contrast, a commonly used digital device employing commercial encryption faces odds of 1 in 400 million. The National Security Commission on AI (NSCAI) confirmed these findings in a 756-page report in 2021, emphasizing that adversarial attacks on commercial ML systems are not theoretical but already happening.

It is important to note that the terminology used in this context can quickly become convoluted. ML and AI are increasingly utilized to develop AI chips as they can efficiently identify flaws and optimize designs for performance, power efficiency, and area utilization. However, if an AI chip malfunctions, it becomes challenging to identify the root cause due to its opaque nature, adaptability to different applications, and unexpected behaviors.

Adversarial Attacks A group of white-hat hackers from the Infosec.live online community, comprised of cybersecurity professionals, identified five potential vulnerabilities associated with AI adoption in semiconductor development:

1. Adversarial machine learning: This branch of AI research, dating back to the early 2000s, focuses on manipulating AI algorithms to introduce subtle errors. For example, an adversary could cause an autonomous vehicle to occasionally misinterpret GPS data.

2. Data poisoning: By injecting faulty training data, an attacker can manipulate the behavior of an AI-driven device in ways that are not immediately apparent. Consequently, the device may exhibit unacceptable behaviors, even though the calculations appear to be correct. This type of attack is particularly insidious as compromised AI systems may be used to train other systems.

3. Model inversion: An attacker can infer information about a chip’s architecture by analyzing the output of an AI algorithm. This knowledge can then be used to reverse-engineer the chip, potentially leading to unauthorized replication or the introduction of vulnerabilities.

4. Model extraction: If an attacker manages to extract the model used to design a semiconductor, they can exploit this information to create unauthorized copies of the chip or modify it to introduce vulnerabilities.

5. Supply chain attacks: Modifying the algorithms used in AI systems is a challenging task to detect. Determining the source and integrity of commercial algorithms, as well as ensuring they remain uncorrupted during download or updates, poses significant difficulties.

Thomas Andersen, vice president for AI and machine learning at Synopsys, emphasizes that AI systems are susceptible to various attacks, including evasion, data poisoning, and software flaw exploitation. Data security is also a growing concern, as a significant portion of an AI system’s value lies in its data.

Enhancing Tools and Limiting AI On the EDA side, reinforcement learning forms the basis of many existing approaches. It functions as a tool rather than an autonomous system, leveraging knowledge gained from previous designs, which increases the difficulty of compromising its security. While it is impossible to claim complete immunity to attacks, there is a high probability that such attacks will be detected.

AI is primarily used in the analysis of tape-out and silicon-level designs. These materials are typically well-controlled, and the AI tools employed have extensive training sets derived from best-practice design data collected over the years. The vetting process for these training sets is rigorous, ensuring limited access and providing an effective defense against compromises in design.

Additionally, integrating scan insertion techniques into the design process contributes to enhanced security. Scan chains, a part of digital chips, facilitate testing during production. These chains are designed to detect potential trojans or other suspicious elements that could compromise security.

While progress has been made on the hardware side, the AI systems developed using these chips present a different set of challenges. Microsoft data scientists Ram Shankar and Hyrum Anderson argue that proprietary ML-powered systems are no more secure than open-source alternatives. Once users can access and replicate an ML-powered system, they gain the ability to attack it.

One of the difficulties stems from the fact that many AI designs are relatively new, with algorithms still undergoing significant modifications and updates. While design teams are aware of known vulnerabilities, there are numerous vulnerabilities yet to be discovered.

Overconfidence in automated systems has long been a security problem. People are more likely to trust an automated system that has previously demonstrated vulnerabilities, as various studies have shown. The issue lies in over-trusting these systems and having unrealistic expectations. Addressing this challenge requires establishing standards encompassing both hardware and software aspects.

Raj Jammy, the chief technologist at MITRE Engenuity and executive director of the Semiconductor Alliance, stresses the importance of quantifiable security measures and provenance for chips and their constituent components. Carefully considering the origin, version, and maintenance of chip design components, including IP blocks, is vital. Implementing a standard of care ensures comprehensive security throughout the entire semiconductor design process.

Diverse Approaches As weaknesses in AI systems become apparent, innovative approaches are emerging to tackle these challenges. Two notable examples are Axiado and Vaultree.

Axiado, a startup, adopts a unique approach by running AI on bare metal, which inherently enhances security. By storing models on secure bare-metal systems rather than conventional higher-level systems like Linux, vulnerabilities arising from the higher levels of the system are significantly reduced. Axiado continuously builds data lakes consisting of various vulnerability and attack data sets, further reinforcing its security measures.

Vaultree, an Irish cybersecurity startup, addresses the vulnerability of data in use. Their tool enables the encryption of sensitive information during the processing of generative AI services, even when used with public accounts. Data shared publicly on servers become open source, but utilizing the Vaultree tool with a registered business account ensures encryption and secure processing of sensitive information.

These are just a few examples of the diverse strategies being employed to enhance security in AI systems. With the constant evolution of AI and ML chips, comprehensive security measures must be implemented at various levels, including algorithms, data, hardware, and standards. By leveraging multiple AI algorithms, securing data access, and emphasizing thorough testing and verification, the semiconductor industry can better safeguard against potential attacks and vulnerabilities.

Conclusion:

The escalating security challenges in AI/ML chips and tools necessitate a comprehensive approach to safeguarding the market. Industry stakeholders must prioritize the development of secure tools and algorithms while ensuring transparency and explainability. Establishing rigorous standards, verifying the provenance of components, and adopting secure practices throughout the supply chain is essential. Furthermore, innovative solutions like running AI on secure bare metal and encrypting data in use showcase the market’s commitment to addressing security concerns. By proactively addressing these challenges, the market can build trust, enhance resilience, and foster the widespread adoption of AI/ML technologies.