- Security experts uncover a sophisticated Russian disinformation campaign dubbed “CopyCop” that leverages AI to manipulate public opinion.

- CopyCop utilizes Generative AI (GenAI) to plagiarize and distort content from reputable news outlets, tailoring narratives to sow discord and influence Western voters.

- Concerns escalate over the potential impact of AI-driven disinformation on upcoming elections in the UK and US.

- Recorded Future emphasizes the need for proactive measures by media outlets and governments to counter such influence networks.

- Collaboration between stakeholders, including tech companies and civil society, is deemed essential to combat the proliferation of disinformation online.

Main AI News:

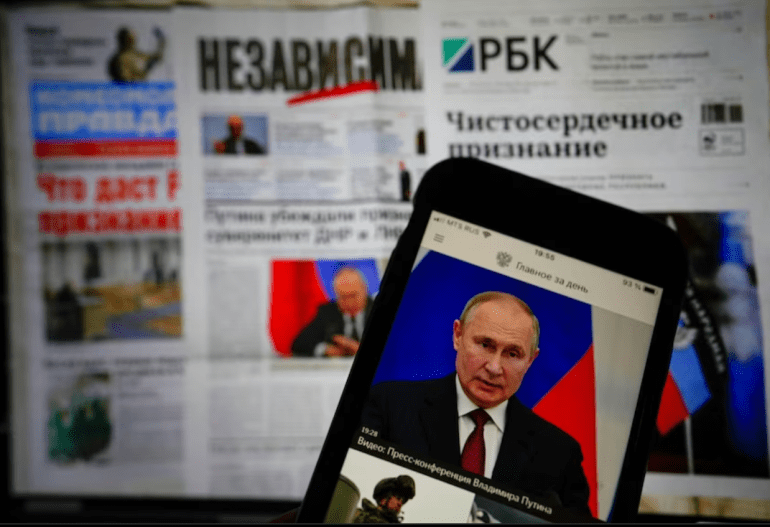

Heightened scrutiny looms over the looming specter of election disinformation, as security experts raise alarms about the proliferation of deceptive narratives. With the emergence of sophisticated AI-driven networks, such as the recently uncovered Russian disinformation campaign named “CopyCop,” the stakes have never been higher.

Recorded Future, a prominent player in threat intelligence, has sounded the alarm about the insidious tactics employed by CopyCop. Leveraging advanced Generative AI (GenAI), this network strategically appropriates and distorts content sourced from reputable news outlets. The objective? To manipulate public sentiment, especially within Western democracies, by injecting tailored political biases into the narrative.

Under the guise of legitimate news sources, CopyCop disseminates its concocted stories across a spectrum of platforms. From mimicking established media outlets like Al-Jazeera and Fox News to fabricating entirely fictitious news domains like London Crier, the network’s reach is extensive and insidious. Its narratives aim to exacerbate existing societal fault lines, from fanning flames of conflict over the Israel-Hamas crisis to casting doubt on government policies and fostering discord between nations.

The implications are dire. With pivotal elections looming in both the UK and the US, the specter of AI-fueled manipulation casts a long shadow over the democratic process. Clément Briens, a threat intelligence analyst at Recorded Future, underscores the urgency of the situation, warning of CopyCop’s potential to wield unprecedented influence over public opinion.

Addressing this menace requires a concerted effort from all fronts. Briens advocates for proactive measures by media outlets and governmental bodies to identify and dismantle such influence networks. Collaboration with internet governance bodies and hosting providers is paramount to curb the proliferation of infringing domains. Moreover, transparency in exposing these operations and accurately gauging their impact is crucial in mitigating their sway.

The ramifications extend beyond the immediate threat posed by CopyCop. As AI technology evolves, the likelihood of similar disinformation campaigns proliferating increases exponentially. The erosion of trust in mainstream media and the integrity of democratic processes hangs in the balance.

Recorded Future underscores the imperative of collective action. Governments, tech firms, and civil society must forge stronger alliances to combat the scourge of disinformation and safeguard the sanctity of democratic discourse in the digital age. Only through concerted, coordinated efforts can we hope to stem the tide of deception and preserve the integrity of our electoral systems.

Conclusion:

The emergence of CopyCop underscores the evolving landscape of disinformation, fueled by advanced AI technologies. As such campaigns pose a significant threat to democratic processes and public trust, businesses must invest in robust strategies to counteract these manipulative tactics. Moreover, collaboration among stakeholders, including governments, tech companies, and civil society, is imperative to safeguard the integrity of information ecosystems and protect democratic ideals.