- Large Language Models (LLMs) are evolving rapidly, showcasing human-like capabilities in text generation, question answering, and coding.

- Reinforcement Learning from Human Feedback (RLHF) offers promise in fine-tuning LLMs to align with human preferences.

- SPPO, an innovative approach, addresses RLHF challenges by framing it within two-player constant-sum games and leveraging self-play mechanisms.

- Developed by researchers from UCLA and Carnegie Mellon University, SPPO demonstrates enhanced convergence and scalability for large language models.

- Evaluation using GPT-4 on platforms like AlpacaEval 2.0 and MT-Bench showcases SPPO’s superior performance, particularly in controlling output length and avoiding over-optimization.

Main AI News:

In the realm of Large Language Models (LLMs), advancements have been awe-inspiring, showcasing capabilities akin to human text generation, question answering, and even coding. Yet, the journey towards reliability, safety, and ethical integrity remains fraught with challenges. Enter Reinforcement Learning from Human Feedback (RLHF), also known as Preference-based Reinforcement Learning (PbRL), offering a beacon of hope. This paradigm has proven its mettle in refining LLMs to resonate with human inclinations, thereby amplifying their utility.

Traditionally, RLHF methods such as InstructGPT leaned on either explicit or implicit reward frameworks, like the Bradley-Terry model. However, contemporary scholarship delves into the realm of direct preference probabilities to mirror human predilections more accurately. Some scholars conceptualize RLHF as a quest for Nash equilibriums within constant-sum games, advocating for mirror descent and Self-Play Preference Optimization (SPO) techniques. Direct Nash Optimization (DNO) also made waves by leveraging win rate differentials, although its real-world application hinges on iterative DPO frameworks.

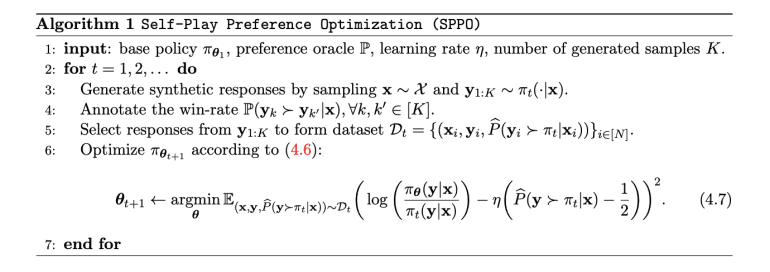

Enterprising minds from the University of California, Los Angeles, and Carnegie Mellon University have introduced an ingenious self-play paradigm, dubbed Self-Play Preference Optimization (SPPO), designed to tackle RLHF obstacles head-on. Offering concrete assurances in navigating two-player constant-sum games and scalability tailored for expansive language models, SPPO redefines the landscape. By framing RLHF within this gaming context, the aim is to pinpoint the Nash equilibrium policy, ensuring a consistent alignment with preferred responses. Their proposed adaptive methodology, rooted in multiplicative weights, leverages a self-play mechanism where the policy fine-tunes itself using synthetic data annotated by the preference model.

This self-play framework operates with remarkable efficiency, scaling adeptly for large language models while targeting two-player constant-sum games. It operates within an iterative framework, integrating multiplicative weight updates and a self-play mechanism. Through this approach, the algorithm asymptotically homes in on the optimal policy, discerning the elusive Nash equilibrium. Rigorous theoretical scrutiny underpins this endeavor, offering tangible assurances of convergence. Compared to counterparts like DPO and IPO, SPPO showcases enhanced convergence rates and adeptly handles data sparsity challenges.

In evaluating these methodologies, researchers employ GPT-4 for automated assessment, presenting findings via AlpacaEval 2.0 and MT-Bench platforms. Iteration by iteration, SPPO models exhibit consistent enhancements, with SPPO Iter3 emerging as the pinnacle, boasting the highest win rate. Notably, SPPO outshines DPO and IPO, demonstrating superior performance metrics and precise control over output length. Furthermore, test-time reranking, utilizing the PairRM reward model, consistently elevates model performance without succumbing to over-optimization. In the competitive arena of AlpacaEval 2.0 and MT-Bench, SPPO stands tall, surpassing numerous state-of-the-art chatbots and maintaining parity with GPT-4.

Conclusion:

The introduction of SPPO marks a significant advancement in refining Large Language Models, promising enhanced alignment with human preferences and superior performance metrics. This innovation has the potential to reshape the landscape of language model development and applications across various industries, from customer service chatbots to content generation platforms. Organizations invested in AI-driven language technologies should closely monitor SPPO’s developments and consider its integration to stay competitive in the evolving market.