TL;DR:

- Chinese researchers propose SyntHesIzed Prompts (SHIP) to enhance fine-tuning methods.

- SHIP synthesizes features for categories without data, overcoming data limitations.

- VAE framework combined with CLIP enables efficient feature synthesis.

- SHIP outperforms traditional methods in various experiments.

- It promises more data-efficient AI solutions with revolutionary potential.

Main AI News:

In a groundbreaking research endeavor, Chinese AI experts have unveiled a cutting-edge approach called SyntHesIzed Prompts (SHIP) that promises to revolutionize existing fine-tuning methods. Fine-tuning, as we know, involves honing a model on a specialized dataset after its initial pre-training, effectively refining its general knowledge for task-specific applicability. However, a crucial obstacle arises when certain classes lack data, hindering the model’s potential to deliver optimal performance.

The ingenious solution proposed by these researchers is to develop a generative model capable of synthesizing features by leveraging class names. By harnessing this innovative methodology, SHIP empowers AI systems to generate features for categories without access to actual data, thus overcoming the limitations posed by data scarcity or challenging data collection scenarios.

The researchers set out to fine-tune CLIP (Contrastive Language–Image Pretraining), an OpenAI masterpiece renowned for its exceptional prowess in comprehending and generating images from textual descriptions, using both original labeled data and the novel synthesized features. However, a formidable hurdle emerged—traditional generative models typically demand substantial data for training, which runs contrary to the team’s goal of achieving data efficiency.

Ingeniously, the researchers turned to a variational autoencoder (VAE) as the framework of choice, recognizing its superior suitability for low-data scenarios compared to models that rely on adversarial training. This strategic move was instrumental in bridging the gap between the dream of synthesized data efficiency and the reality of training constraints.

Distinctions between GANs (Generative Adversarial Networks) and VAEs were also highlighted, emphasizing the unique advantages and challenges of each approach. GANs boast the ability to generate highly realistic samples, while VAEs, in contrast, provide a more approachable probabilistic framework, particularly beneficial in scenarios with limited data.

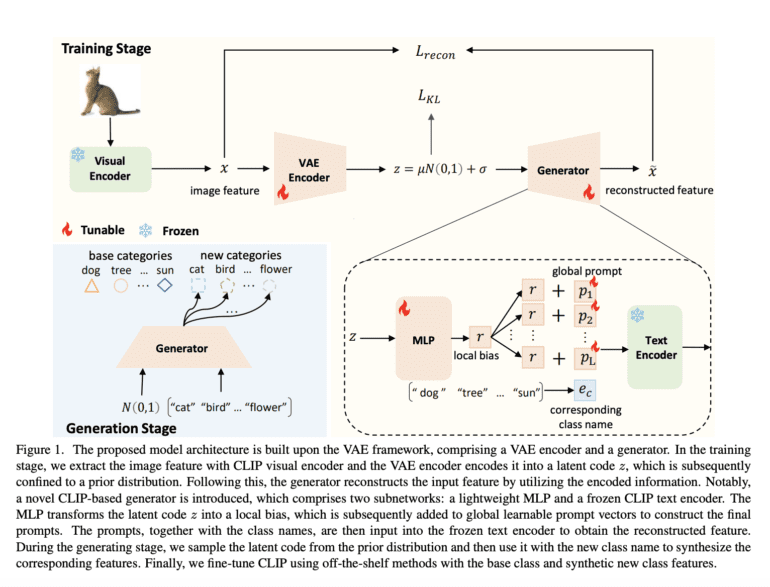

The novel architectural design combines the power of the VAE framework with CLIP, skillfully extracting and reconstructing image features. During training, the model encodes features into a latent space and then reconstructs them—a pivotal process in mastering the art of feature synthesis for new classes. The pivotal CLIP-based generator, comprising a streamlined MLP and a frozen CLIP text encoder, is the key to unlocking the potential of latent code transformation and constructing final prompts for feature reconstruction.

The results of the comprehensive experiments speak volumes about the effectiveness of SHIP. Rigorous evaluations encompassed base-to-new generalization, cross-dataset transfer learning, and generalized zero-shot learning on a diverse set of 11 image classification datasets, including renowned ones like ImageNet, Caltech101, OxfordPets, StanfordCars, Flowers102, Food101, FGVCAircraft, SUN397, DTD, EuroSAT, and UCF101.

To mirror real-world conditions, the researchers evaluated SHIP under a generalized zero-shot setting, where the base and new data merged in the test dataset. While traditional methods showed a notable decrease in performance, SHIP continued to shine brightly, delivering superior results for new classes.

In comparison with other methods such as CLIP, CoOp, CLIP-Adapter, and Tip-Adapter, SHIP consistently demonstrated an edge, showcasing its exceptional potential across various datasets.

Conclusion:

The introduction of SHIP represents a significant advancement in the field of AI fine-tuning. By harnessing the power of generative models and synthesizing features for data-limited classes, SHIP opens new avenues for more efficient AI solutions in various industries. Businesses looking to optimize their AI models and tackle real-world challenges can benefit greatly from this innovative approach. As SHIP continues to refine and democratize AI capabilities, it could lead to transformative changes in the market, propelling the development and adoption of data-efficient AI technologies. Organizations that embrace SHIP’s potential early on may gain a competitive edge, ensuring they stay ahead in an increasingly AI-driven landscape.