- UC Berkeley researchers introduce a pioneering reinforcement learning approach for humanoid robot locomotion.

- The methodology focuses on organic walking behaviors adaptable to diverse real-world environments.

- Utilizes simulated training data to achieve zero-shot execution in real-world scenarios.

- Real-world testing demonstrates consistent performance across varied terrains without safety precautions.

- The emergence of adaptive gait alterations highlights the flexibility and efficacy of the proposed approach.

Main AI News:

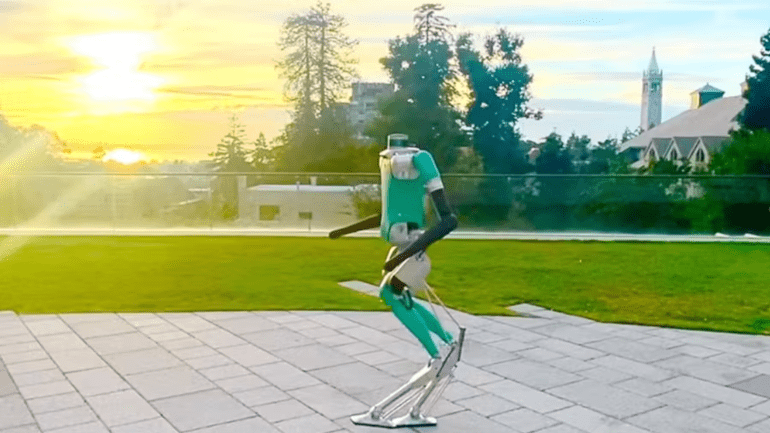

Innovative strides from UC Berkeley researchers showcase a groundbreaking reinforcement learning methodology tailored to facilitate the seamless mobility of bipedal humanoid robots within real-world scenarios. Their approach instills organic walking patterns that dynamically adapt to diverse environmental settings.

The team underscores the transformative potential of autonomous humanoid robots across various domains, from alleviating labor deficits in industrial settings to providing aid for the elderly in domestic environments, even extending to interplanetary colonization. Despite the commendable performance of classical controllers in certain contexts, their limitations in generalization and adaptability to novel environments prompt the need for a paradigm shift. Enter a fully learning-based approach to humanoid locomotion in real-world settings.

At the heart of their methodology lies reinforcement learning, leveraging a causal transformer to anticipate optimal actions based on a continuous stream of the robot’s past movements and environmental observations. Crucially, this approach undergoes comprehensive training within simulated environments, culminating in its remarkable zero-shot execution when deployed on an Agility Robotics Digit humanoid robot in real-world scenarios.

The research team elaborates on the extensive real-world testing, encompassing diverse terrains with varying material compositions and conditions, such as concrete, rubber, and grass, spanning dry afternoon surfaces to dewy morning landscapes. Notably, the terrain properties encountered during testing diverged significantly from those present in the training data. Despite these challenges, the deployed controller exhibited consistent performance, traversing all tested terrains with reliability and confidence, obviating the need for safety precautions even in prolonged outdoor trials.

An intriguing outcome of the experimentation was the emergence of adaptive gait alterations, wherein the controller autonomously adjusted the robot’s walking dynamics in response to encountered terrain features. Specifically, the robot transitioned seamlessly from standard walking on level ground to adopting shorter steps without elevating its legs to the usual height on downward slopes, before reverting to standard gait on flat surfaces. These nuanced behavioral adaptations, characterized as emergent phenomena, underscore the efficacy and adaptability of the proposed learning-based approach in real-world locomotion scenarios.

Conclusion:

The development of a simulated learning-based approach for humanoid robot mobility marks a significant advancement in robotics research. This breakthrough not only promises enhanced adaptability and performance in real-world scenarios but also opens avenues for applications across industries, from manufacturing to healthcare and beyond. As the technology matures, it could revolutionize automation, productivity, and assistance services, offering tangible benefits for businesses seeking to optimize operations and improve human-robot collaboration.