TL;DR:

- GenAI tools are on the ascent, with organizations set to increase AI investments by 10-15%.

- Early adopters report significant gains in innovation, sustainability, and retention due to AI.

- Massive general-purpose LLMs pose challenges like compute resource strain and high costs.

- Smaller, domain-specific models are gaining traction and offer easier customization.

- Large LLMs are susceptible to errors when fed with extensive data, reducing accuracy.

- The concentration of AI power in tech giants is a concern, leading to centralization.

- Organizations are turning to fine-tuned models for precise, business-oriented AI applications.

Main AI News:

In the relentless pursuit of AI excellence, organizations have often equated “larger” with “better” when it comes to their language models. However, the tide is turning as discerning enterprises recognize that bigger isn’t always superior.

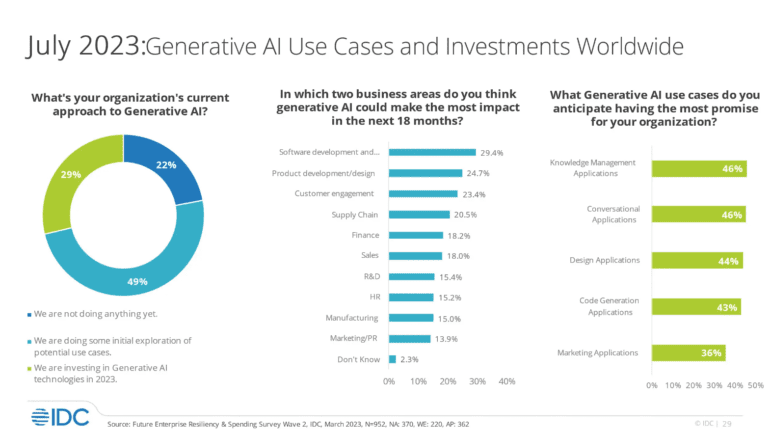

The ascent of generative artificial intelligence (genAI) tools has been nothing short of remarkable. A recent IDC survey of over 2,000 IT and business leaders reveals that organizations plan to allocate 10% to 15% more resources to AI endeavors in the next 18 months, compared to 2022. The impact of genAI is already palpable across various sectors, with early adopters reporting a 35% boost in innovation and a 33% surge in sustainability over the past three years, according to IDC.

Moreover, genAI has been a catalyst for a 32% improvement in customer and employee retention. As Ritu Jyoti, Group Vice President for AI & Automation Research at IDC, affirms, “AI will be just as crucial as the cloud in providing customers with a genuine competitive advantage over the next five to 10 years.” In essence, visionary organizations hold the key to a significant competitive edge.

Yet, while massive general-purpose Large Language Models (LLMs) boasting hundreds of billions or even trillions of parameters may seem like formidable tools, they come with their own set of challenges. These LLMs are voraciously consuming computational resources, straining server capacity, and protracting model training times to impractical extents. Avivah Litan, a Distinguished Analyst at Gartner Research, succinctly points out, “So, continuing to make models bigger and bigger is not a viable option.”

Dan Diasio, Ernst & Young’s Global Artificial Intelligence Consulting Leader, corroborates this by highlighting the current backlog of GPU chip orders, exacerbating the issue for both tech companies and user organizations. The costs associated with fine-tuning and building specialized corporate LLMs are soaring, driving the trend toward knowledge enhancement packs and prompt libraries containing specialized expertise.

Furthermore, it’s increasingly clear that smaller domain-specific models, enriched with extensive training data, will challenge the supremacy of today’s prominent LLMs like OpenAI’s GPT-4, Meta AI’s LLaMA 2, or Google’s PaLM 2. These compact models also offer the advantage of easier training for specific use cases.

Regardless of size, all LLMs rely on prompt engineering, a process of feeding queries and correct responses to fine-tune algorithmic responses. However, a surge in data ingestion can lead to a higher likelihood of erroneous outputs. GenAI tools essentially predict the next word in a sequence, making them susceptible to flawed results when confronted with flawed input. Vertical industries and specialized use cases often find large general-purpose LLMs inaccurate and non-specific, despite their astronomical parameter counts.

The concentration of technological power in the hands of a few tech giants raises concerns about centralization, as articulated by Avivah Litan. This centralization lacks meaningful checks and balances, and the chip industry struggles to keep pace with the rapid expansion of model sizes.

To address these challenges, organizations are turning to domain-specific LLMs and fine-tuned models that allow them to leverage proprietary or industry-specific information for more precise, business-oriented applications. These solutions are essential in overcoming the hurdles faced by organizations seeking to harness the potential of AI.

Conclusion:

The market is witnessing a shift towards smaller, more specialized AI models to enhance efficiency and precision. While large LLMs have their advantages, they also bring about substantial challenges, including resource constraints and high costs. This trend signifies that organizations are keen on optimizing AI for specific use cases, ensuring that AI’s potential is harnessed effectively and efficiently.