- Snap announces plan to add watermarks to AI-generated images on its platform.

- Watermarks feature a translucent Snap logo with sparkle emoji, which is applied automatically to exported or saved AI images.

- Removing watermarks violates Snap’s terms of use; the detection method is undisclosed.

- Similar initiatives by tech giants like Microsoft, Meta, and Google to label AI-generated images.

- Snap’s paying subscribers can create or edit AI images; Dreams feature enhances selfies with AI.

- The company emphasizes safety and transparency in AI use; visual markers and context cards have been introduced.

- Collaboration with HackerOne for bug bounty program to stress-test AI image-generation tools.

- The aim is to ensure equitable access and minimize biased AI results.

- Measures follow controversy around Snapchat’s “My AI” chatbot discussing sensitive topics.

- Controls introduced in Family Center for monitoring children’s interactions with AI.

Main AI News:

Snap, the social media giant, has announced its intention to integrate watermarks into AI-generated images across its platform. The watermark, featuring a translucent rendition of the Snap logo accompanied by a sparkle emoji, will be automatically applied to any AI-generated image exported from the app or saved to the camera roll. Snap emphasizes that removing these watermarks will contravene its terms of use, although the methodology for detecting such removals remains undisclosed.

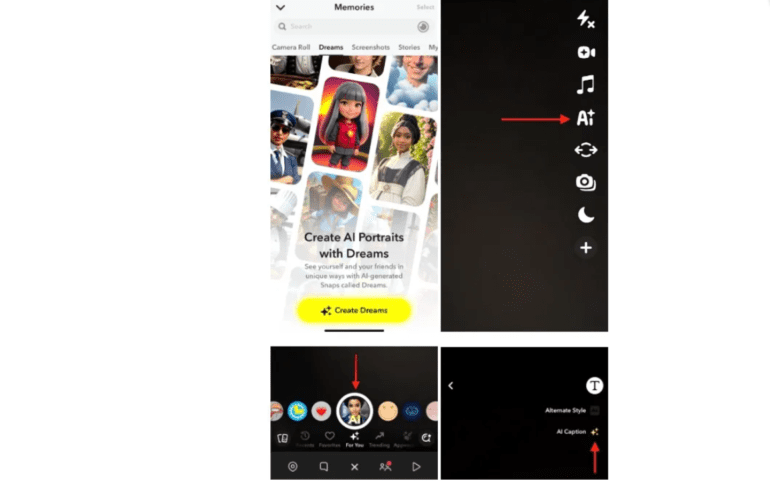

This move by Snap mirrors similar initiatives undertaken by other tech behemoths such as Microsoft, Meta, and Google, who have all implemented measures to label or identify images created through AI-powered tools. Snap’s current framework allows paying subscribers to leverage Snap AI for creating or modifying AI-generated images, with its selfie-centric feature, Dreams, offering users the ability to enhance their photos using AI technology.

In a recent blog post outlining its commitment to safety and transparency in AI utilization, Snap elucidated its practice of visually marking AI-powered features, such as Lenses, with a sparkle emoji. Moreover, the company has introduced context cards for AI-generated images produced via tools like Dream to furnish users with additional information.

Snap’s proactive stance towards enhancing AI safety and moderation is further underscored by its collaboration with HackerOne to implement a bug bounty program aimed at rigorously testing its AI image-generation tools. This initiative aims to ensure equitable access and expectations for Snapchatters across diverse demographics, particularly in relation to AI-powered functionalities within the app. Snap’s commitment to mitigating potential biases in AI outcomes is paramount, as emphasized in its statement regarding the bug bounty program.

These developments come in the wake of Snapchat’s earlier endeavors to address concerns regarding AI usage, notably following the launch of its “My AI” chatbot in March 2023. The chatbot elicited controversy when users succeeded in prompting discussions on topics such as sex and alcohol, prompting Snap to introduce controls within the Family Center for parents and guardians to supervise and regulate their children’s interactions with AI.

Conclusion:

Snap’s implementation of watermarks for AI-generated images reflects a commitment to enhancing transparency and safety within its platform. This move aligns with broader industry trends towards responsible AI use and underscores the company’s proactive approach to addressing user concerns. As tech companies increasingly prioritize ethical AI practices, Snap’s initiatives signal a maturing market where accountability and user protection are paramount.