- Snowflake Arctic, an open-source LLM, challenges industry leaders Llama and Mixtral.

- Arctic boasts affordability and efficacy, positioning it as the top choice for Enterprise AI.

- With a training budget under $2 million, Arctic outperforms similar models at a fraction of the cost.

- Innovative Dense-MoE Hybrid transformer architecture powers Arctic’s exceptional performance.

- Strategic partnerships ensure accessibility, with plans for integration across major cloud platforms.

- Arctic’s emergence reflects significant advancements in the LLM landscape, promising a transformative era in Enterprise AI.

Main AI News:

Snowflake Arctic, a groundbreaking, open-source LLM, emerges as a formidable contender against industry stalwarts Llama and Mixtral. In the realm of open-source LLMs, options have traditionally been scarce. However, Snowflake’s introduction of the Arctic promises a paradigm shift in this landscape.

Touted as the epitome of Enterprise AI, Arctic distinguishes itself with its unparalleled combination of affordability and efficacy. Positioned as the premier choice for Enterprise AI solutions, Arctic’s unveiling marks a pivotal moment in the industry.

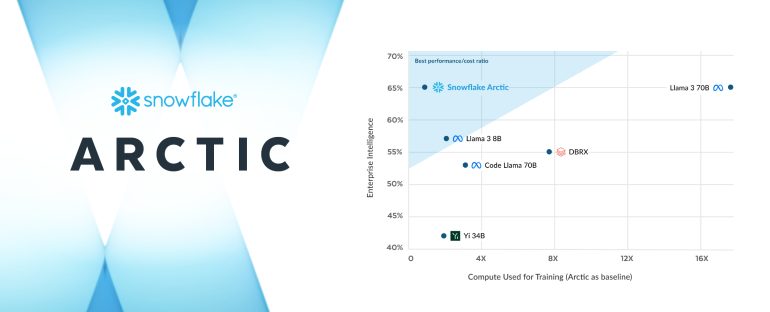

In a recent blog post, Snowflake asserts Arctic’s superiority in enterprise intelligence among open-source LLMs, achieved at a training compute budget of under $2 million, equivalent to less than 3K GPU weeks. This underscores Arctic’s superiority over other open-source models within a comparable budget, offering heightened capabilities at a fraction of the cost. Moreover, Arctic’s exceptional training efficiency paves the way for Snowflake’s customers and the broader AI community to develop custom models affordably.

Snowflake’s data further substantiates Arctic’s prowess, demonstrating superior performance at a reduced computing power and cost compared to LLAMA 3 and Mixtral 8x7B. Anchoring Arctic’s impressive performance is its innovative Dense-MoE Hybrid transformer architecture, boasting 480B MoE Parameters and 17B Active Parameters.

Equally pivotal is Arctic’s Inference Efficiency, a facet meticulously refined through collaboration with NVIDIA. Achieving a remarkable throughput of 70+ tokens per second with a batch size of 1, Arctic’s prowess in inference efficiency sets a new benchmark in the industry.

Snowflake’s strategic partnerships extend beyond NVIDIA, encompassing collaborative efforts with Hugging Face and GitHub for accessibility. Moreover, plans for integration with major cloud platforms, including Amazon Web Services, Lamini, Microsoft Azure, and NVIDIA API Catalog, ensure widespread availability.

Reflecting on the evolution of the LLM landscape, Arctic stands as a testament to the strides made in AI technology. With corporations increasingly investing in AI solutions, the trajectory for innovation appears exceedingly promising. Snowflake’s Arctic emerges not just as a product but as a harbinger of a transformative era in Enterprise AI.

Conclusion:

The introduction of Snowflake Arctic represents a seismic shift in the Enterprise AI market, offering unparalleled affordability and performance. With its innovative technology and strategic partnerships, Arctic sets a new standard for LLMs, signaling a promising future for AI-driven solutions. Companies embracing Arctic stand to gain a competitive edge in harnessing the power of AI for their business operations.