- Sony AI and KAUST unveil FedP3, a solution for federated learning (FL) addressing model heterogeneity and privacy concerns.

- FedP3 personalizes models for individual devices, employing dual pruning techniques to optimize efficiency.

- Privacy is paramount in FedP3, minimizing data shared with servers and exploring differential privacy variants.

- Extensive experiments confirm FedP3’s ability to reduce communication costs while maintaining performance across datasets.

- FedP3 sets a new standard in FL, reshaping decentralized machine learning paradigms.

Main AI News:

Sony AI and KAUST researchers have collaboratively unveiled FedP3, a pioneering solution tailored to tackle the intricate challenges posed by federated learning (FL). FL, a paradigm where a global model is trained using decentralized data stored on individual devices, encounters significant hurdles due to the diversity in device capabilities and data distributions, commonly referred to as model heterogeneity. FedP3 emerges as a beacon of innovation in this landscape, offering a holistic approach that prioritizes both performance optimization and privacy preservation.

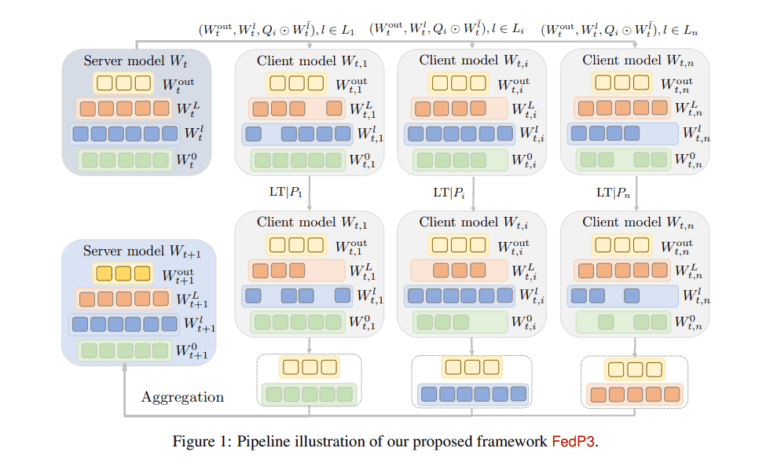

The conventional FL approaches often fall short in accommodating the inherent disparities among client devices, leading to suboptimal performance and compromised privacy. FedP3 distinguishes itself by ushering in a new era of personalized model development and sophisticated pruning techniques aimed at streamlining model complexity without sacrificing performance. The framework introduces a dual pruning methodology encompassing global and local strategies, thereby ensuring a harmonious balance between model size reduction and client-specific adaptation.

Central to the FedP3 framework is its emphasis on privacy preservation. In an era where data security and confidentiality reign supreme, FedP3 pioneers privacy-enhancing mechanisms that safeguard sensitive client data throughout the FL process. By minimizing the data shared with the central server and exploring innovative privacy-preserving techniques such as differential privacy variants like DP-FedP3, the framework stands as a testament to its unwavering commitment to client confidentiality.

FedP3’s efficacy is substantiated through rigorous experimental validation conducted across diverse datasets, including CIFAR10/100, EMNIST-L, and FashionMNIST. These experiments underscore FedP3’s remarkable ability to significantly reduce communication costs while upholding performance standards akin to traditional FL methods. Notably, evaluations on more complex models like ResNet18 further underscore FedP3’s prowess in heterogeneous FL environments.

Conclusion:

The introduction of FedP3 by Sony AI and KAUST heralds a new era in federated learning, where efficiency and privacy converge seamlessly. This innovation not only addresses existing challenges but also paves the way for widespread adoption of FL across diverse industries. As organizations seek to leverage decentralized machine learning paradigms while safeguarding data privacy, FedP3 emerges as a frontrunner, poised to redefine the market landscape and unlock new possibilities for secure, efficient collaboration in the realm of AI.