TL;DR:

- SPHINX-X represents the next evolution in Multimodal Large Language Models (MLLMs), enhancing language comprehension with vision integration.

- Developed by a collaboration of leading research institutions, SPHINX-X streamlines architecture, optimizes training efficiency, and offers multilingual capabilities.

- It addresses the limitations of existing MLLMs by improving adaptability across tasks and optimizing for efficient, large-scale multimodal training.

- Recent advancements in LLMs highlight innovations in Transformer architectures and the integration of non-textual encoders for comprehensive visual understanding.

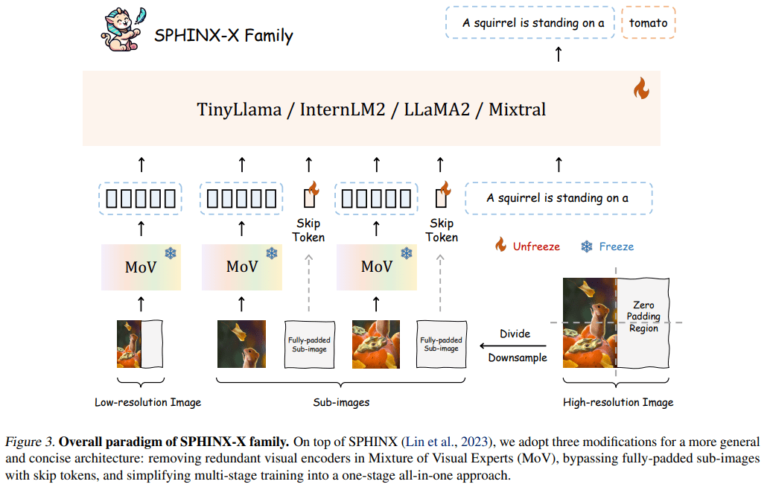

- The proposed enhancements to SPHINX-X focus on compacting visual encoders, implementing learnable skip tokens, and adopting simplified one-stage training.

- SPHINX-X MLLMs demonstrate state-of-the-art performance across various multi-modal tasks, showcasing superior adaptability and resilience against visual illusions.

Main AI News:

The evolution of Multimodal Large Language Models (MLLMs), including GPT-4 and Gemini, has ignited a profound interest in amalgamating language comprehension with diverse modalities like vision. This convergence heralds a new era of possibilities, from imbuing intelligence into physical entities to crafting intuitive GUI agents. While open-source MLLMs like BLIP and LLaMA-Adapter have made strides, there’s room for enhancement through increased training data and model parameters. Although some excel in natural image processing, they falter in tasks demanding specialized knowledge. Moreover, the current model sizes might not be conducive to mobile deployment, necessitating exploration into leaner yet more parameter-rich architectures for widespread adoption and enhanced efficacy.

Enter SPHINX-X, an innovative MLLM series stemming from the renowned SPHINX framework, developed by a consortium of researchers from Shanghai AI Laboratory, MMLab, CUHK, Rutgers University, and the University of California, Los Angeles. This groundbreaking series introduces several enhancements, including streamlined architecture by eliminating redundant visual encoders, enhanced training efficiency via skip tokens for fully padded sub-images, and a transition to a single-stage training paradigm. Leveraging a rich multimodal dataset augmented with curated OCR and Set-of-Mark data, SPHINX-X is trained across various base LLMs, offering a gamut of parameter sizes and multilingual capabilities. Benchmark assessments underscore SPHINX-X’s superior adaptability across tasks, overcoming prior limitations of MLLMs while optimizing for efficient, large-scale multimodal training.

In the ever-evolving landscape of Large Language Models (LLMs), recent advancements have centered on Transformer architectures, epitomized by GPT-3’s staggering 175B parameters. Innovations abound with models like PaLM, OPT, BLOOM, and LLaMA, introducing features such as Mistral’s window attention and Mixtral’s sparse MoE layers. Concurrently, bilingual LLMs like Qwen and Baichuan have emerged, while TinyLlama and Phi-2 prioritize parameter reduction for edge deployment. MLLMs continue to integrate non-textual encoders for holistic visual understanding, with notable models such as BLIP, Flamingo, and the LLaMA-Adapter series pushing the boundaries of vision-language fusion. Fine-grained MLLMs like Shikra and VisionLLM showcase prowess in specific tasks, while others extend LLMs to encompass diverse modalities.

Drawing from the rich legacy of SPHINX, researchers propose three key enhancements to SPHINX-X, focusing on compacting visual encoders, implementing learnable skip tokens to filter extraneous optical signals, and adopting a simplified one-stage training approach. This endeavor is supported by a comprehensive multi-modality dataset spanning language, vision, and vision-language tasks, enriched with curated OCR intensive and Set-of-Mark datasets. The SPHINX-X family of MLLMs undergoes training across different base LLMs, ranging from TinyLlama-1.1B to Mixtral-8×7B, thereby yielding a spectrum of MLLMs with diverse parameter sizes and multilingual proficiencies.

The prowess of SPHINX-X MLLMs shines through in their state-of-the-art performance across a myriad of multi-modal tasks, encompassing mathematical reasoning, intricate scene comprehension, low-level vision tasks, visual quality evaluation, and resilience against visual illusions. Rigorous benchmarking underscores a strong correlation between the MLLMs’ multi-modal performance and the scale of data and parameters utilized during training. This study presents a comprehensive evaluation of SPHINX-X across curated benchmarks such as HallusionBench, AesBench, ScreenSpot, and MMVP, showcasing its aptitude in language hallucination, visual illusion mitigation, aesthetic perception, GUI element localization, and nuanced visual comprehension.

Source: Marktechpost Media Inc.

Conclusion:

The introduction of SPHINX-X signifies a significant leap forward in AI capabilities, offering enhanced language understanding with integrated vision processing. Its advancements address key limitations of existing models, paving the way for more efficient and effective multimodal applications. This innovation holds promising implications for various industries, from improved natural language processing to advanced visual comprehension systems, fostering greater innovation and productivity in the market.