- SpiceAI introduces a novel approach to data access by materializing and co-locating data with applications, reducing latency and improving efficiency.

- The platform offers a unified SQL interface, leveraging technologies like Apache DataFusion and Apache Arrow for robust performance.

- Functioning as an application-specific Database CDN, SpiceAI optimizes data delivery for applications, machine-learning models, and AI backends.

- Key benefits include accelerated application performance, enhanced dashboard responsiveness, and optimized data pipelines for machine learning.

- Supports federated SQL queries across multiple databases, data warehouses, and data lakes, enhancing flexibility and integration capabilities.

Main AI News:

In the fast-paced realm of cloud applications, the demand for speed and efficiency continues to grow exponentially. These applications rely on diverse data sources such as S3 knowledge bases, SQL databases, and vector stores. However, fetching data from these sources introduces challenges like network latency, escalating costs, and complex concurrency management.

Current solutions often optimize network infrastructure or implement caching mechanisms to mitigate these issues. While beneficial, these approaches may not fully integrate with application logic or scale effectively.

Enter Spice.ai: a revolutionary solution that reimagines data accessibility by materializing and co-locating data with applications. This innovative approach eliminates the drawbacks of traditional remote data querying, delivering unparalleled benefits in latency reduction, cost efficiency, and concurrency management.

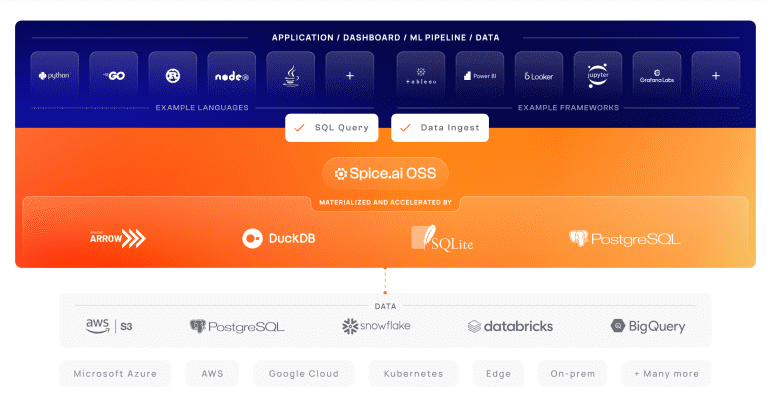

Spice.ai operates as an open-source, portable runtime that offers developers a unified SQL interface. Leveraging cutting-edge technologies including Apache DataFusion, Apache Arrow, and SQLite, Spice.ai ensures robust performance and flexibility across various data environments.

Functioning akin to an application-specific Database CDN, Spice.ai optimizes data delivery for applications, machine-learning models, and AI backends. By locally materializing essential datasets, Spice.ai facilitates low-latency access and high concurrency, catering to a wide range of use cases:

- Accelerated Applications and Frontends: Enhance application performance with accelerated dataset retrieval, ensuring faster page loads and real-time data updates.

- Responsive Dashboards and BI: Improve dashboard responsiveness without significant compute overhead, enhancing user experience in data visualization.

- Optimized Data Pipelines and Machine Learning: Streamline data movement and query performance by co-locating datasets within pipelines, optimizing machine learning workflows.

- Federated SQL Queries: Enable seamless SQL queries across multiple databases, data warehouses, and data lakes through integrated Data Connectors.

Supporting various data connectors and stores such as Databricks, PostgreSQL, and S3, Spice.ai also provides local materialization options with In-Memory Arrow Records, Embedded DuckDB, and SQLite, alongside PostgreSQL integration.

Not merely a cache, Spice.ai proactively prefetches and materializes filtered data, effectively functioning as a CDN for databases. By bringing data closer to frequent access points, Spice.ai ensures rapid and efficient data retrieval, bolstering system responsiveness and reliability across diverse data environments.

Conclusion:

SpiceAI’s innovative approach to data management represents a significant advancement in cloud application efficiency. By reducing latency and improving data accessibility through local materialization, SpiceAI not only enhances system performance but also streamlines data-intensive processes across various industries. This evolution signifies a shift towards more integrated and efficient data handling solutions, promising heightened productivity and responsiveness in the market.