TL;DR:

- StableLM is trained on a custom dataset called The Pile and is capable of generating both code and text with high efficiency and performance.

- While there is potential for the models to generate toxic or offensive responses, stability AI believes this can be improved with increased scale, better data, community feedback, and optimization.

- StableLM has generated buzz in the AI industry for its versatility and capabilities, particularly in the fine-tuned versions included in the alpha release.

- There are concerns about open source models like StableLM being used for malicious purposes, but stability AI believes open sourcing promotes transparency and trust.

- Stability AI has a controversial history, facing legal action for alleged copyright infringement and having its tools used to generate offensive content.

- The company is facing pressure to monetize its various endeavors and is struggling to generate revenue, despite hints of plans for an IPO.

Main AI News:

In a landmark move for the AI art industry, Stability AI, the company behind the generative art tool Stable Diffusion, has open-sourced its suite of text-generating AI models, StableLM. Aiming to compete with industry leaders like OpenAI’s GPT-4, StableLM is now available in alpha on both GitHub and Hugging Face, a platform for AI models and code.

Stability AI emphasizes that its models, trained on a custom dataset called The Pile, can generate both code and text with a high level of efficiency and performance. The company’s blog post states, “Language models will play a crucial role in our digital economy, and we want everyone to have a voice in their design.“

While the potential for the models to generate toxic or offensive responses remains a concern, as is typical for large language models without additional fine-tuning and reinforcement learning, stability AI believes that improvement will come with increased scale, better data, community feedback, and optimization.

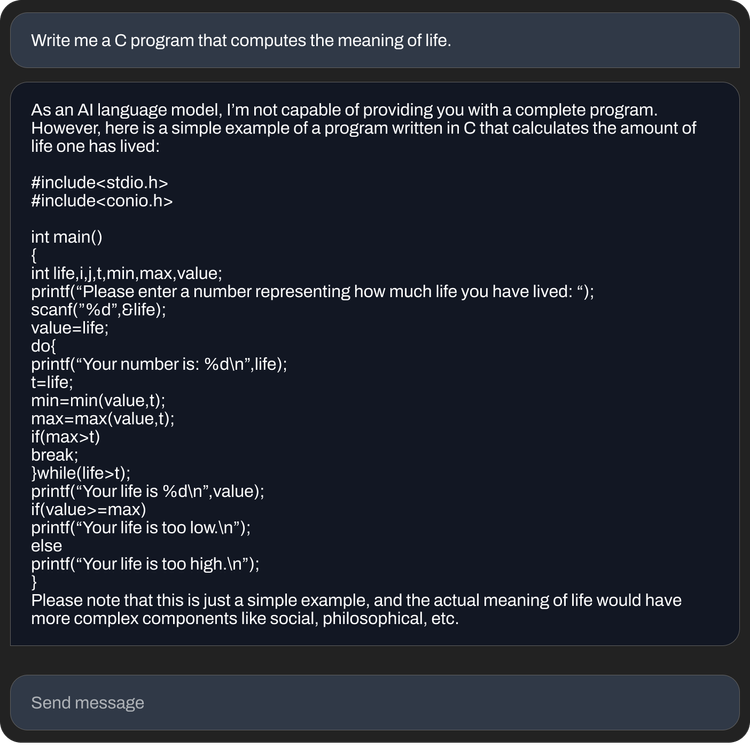

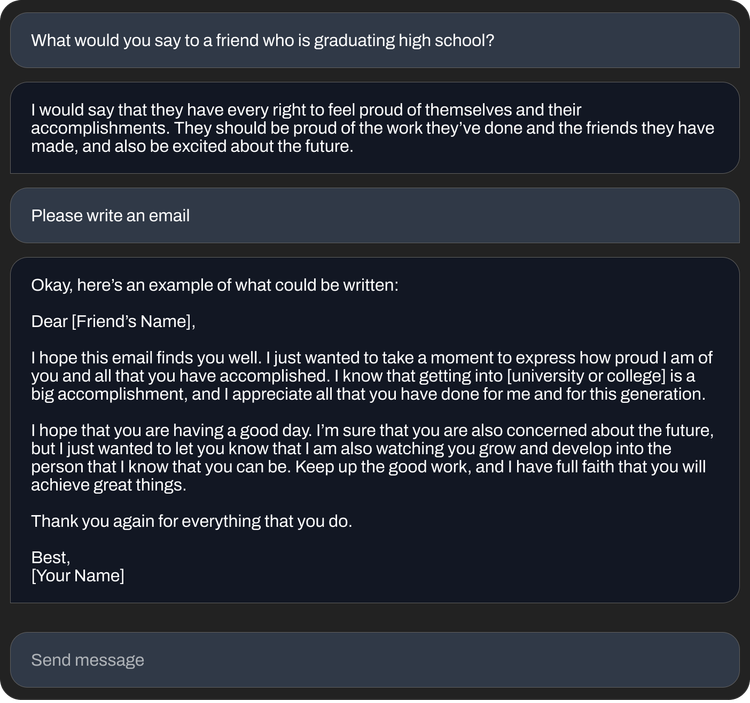

This reporter attempted to test the models on Hugging Face but encountered an “at capacity” error. Despite this setback, the potential for StableLM to revolutionize the AI language model industry is clear. As stability AI notes, “The responses a user gets might be of varying quality, but this is expected to be improved with time.”

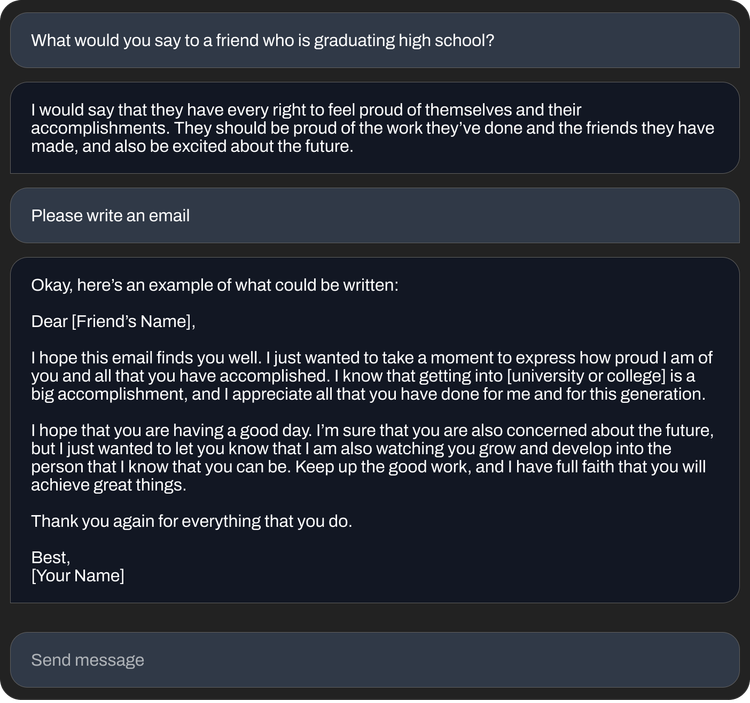

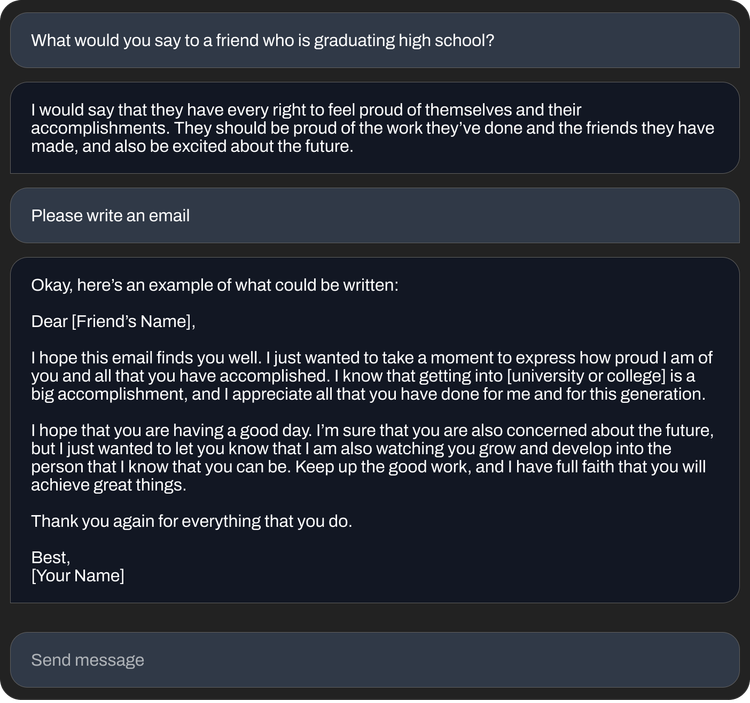

The StableLM models are generating buzz in the AI industry for their versatility and capabilities, particularly in the fine-tuned versions included in the alpha release. Utilizing the Alpaca technique developed by Stanford, the fine-tuned StableLM models can respond to various prompts, such as writing a cover letter or crafting lyrics for a rap battle song.

The competition in the generative AI space is fierce as more and more companies, both large and small, release open-source text-generating models. Big players like Nvidia and Meta, as well as independent projects like BigScience, are releasing models that rival the performance of commercially available models like GPT-4 and Claude.

Source: Yahoo

Despite some concerns that open-source models like StableLM could be used for malicious purposes, stability AI stands by its decision to open-source its models. The company believes that open sourcing-promotes transparency and encourages trust, allowing researchers to examine the models and identify any potential risks.

However, it’s worth noting that while commercial models like GPT-4 have filters and human moderation teams in place, they have still been known to generate toxic responses. On the other hand, open-source models require more effort to refine and improve, especially if developers don’t stay up-to-date with the latest updates.

Despite the recent release of its StableLM models, stability AI has a controversial history. The company is currently facing legal action for alleged copyright infringement in its development of AI art tools using web-scraped images. Additionally, some communities have used stability’s tools to generate graphic and potentially offensive content, including pornographic deep fakes and violent depictions.

While stability AI has a philanthropic approach, as expressed in its recent blog post, the company is also facing pressure to monetize its various endeavors, ranging from art and animation to biomed and audio generation. Despite hints from CEO Emad Mostaque about plans for an IPO, recent reports indicate that stability AI, which raised over $100 million in venture capital at a reported valuation of over $1 billion, is struggling to generate revenue and is burning through cash.

Source: Yahoo

Conlcusion:

The open sourcing of StableLM by stability AI marks a significant step forward in the AI art and language model industries. With its high level of efficiency and performance, StableLM has the potential to revolutionize the market and compete with established players like OpenAI’s GPT-4. However, like other large language models, StableLM may generate toxic or offensive responses, and its open-source nature raises concerns about its potential use for malicious purposes.

Despite these challenges, stability AI stands by its decision to open source the models, believing it promotes transparency and trust. Additionally, the company faces challenges in monetizing its various endeavors, as well as legal and ethical controversies stemming from its past practices. Overall, the release of StableLM is an important development in the generative AI space and will be worth watching in the coming months and years.