TL;DR:

- Stability AI introduces StableCode, an open large language model (LLM) for code generation.

- StableCode offers three tiers: base model, instruction model, and long-context-window model.

- Initial data from the BigCode project, enhanced by Stability AI’s filtering and fine-tuning.

- Python, Go, Java, JavaScript, C, markdown, and C++ are supported in StableCode.

- StableCode aims to enable users to easily write problem-solving programs.

- Training process involves meticulous filtering and successive language-specific training.

- Long-context-window model with 16,000 tokens for intricate code generation prompts.

- Rotary position embedding (RoPE) is used for code structure representation.

- StableCode is poised to transform code generation and empower developers.

Main AI News:

Stability AI, renowned for its groundbreaking Stable Diffusion text-to-image generation model, has broadened its innovative pursuits beyond visual arts. The company’s latest stride comes in the form of StableCode, an exceptional open large language model (LLM) tailored for code generation, destined to reshape the landscape of software development.

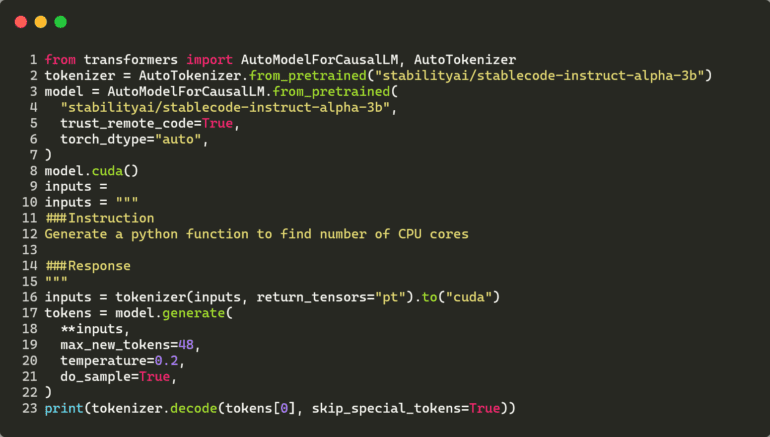

In a momentous announcement, Stability AI has introduced the public to StableCode, marking a significant leap forward in the realm of programming language code synthesis. The StableCode offering encompasses three distinctive tiers: a foundational model suited for general programming needs, an instruction-focused model, and a long-context-window variant capable of accommodating an impressive 16,000 tokens.

The foundation of StableCode’s prowess lies in an initial dataset drawn from the esteemed open-source BigCode initiative. Through meticulous filtration and precision fine-tuning executed by Stability AI, the StableCode model has been uniquely sculpted for optimal performance. This ingenious creation will debut with support for prominent programming languages, including Python, Go, Java, JavaScript, C, markdown, and C++.

Christian Laforte, the Head of Research at Stability AI, articulated the company’s aspirations for StableCode, drawing parallels to their previous success with Stable Diffusion. He revealed, “What we would like to do with this kind of model is to do a similar thing as we did for Stable Diffusion, which helped everyone in the world to become an artist. We’d like to do the same thing with the StableCode model: basically allow anyone that has good ideas [and] maybe has a problem, to be able to write a program that would just fix that problem.”

Training a sophisticated LLM hinges on the quality of data. In StableCode’s case, the bedrock is formed by the BigCode project. This strategic choice aligns with the pioneering spirit of the endeavor. HuggingFace and ServiceNow pioneered this approach with the launch of the StarCoder LLM earlier in May, which also finds its roots in the BigCode initiative.

Nathan Cooper, Lead Research Scientist at Stability AI, delved into the intricacies of the StableCode training process. Cooper emphasized the rigorous process of refining and cleansing the BigCode data. “We love BigCode, they do amazing work around data governance, model governance and model training,” Cooper said. “We took their datasets and we applied additional filters for quality and also for constructing the large-context-window version of the model, and then we trained it on our cluster.”

Cooper also illuminated the progressive steps Stability AI undertook, diverging from the core BigCode model, including successive training on distinct programming languages. “It follows a very similar approach [to what’s] done in the natural language domain, where you start off with pre-training a generalist model and then you fine-tune it on a special set of tasks, or in this case languages,” Cooper said.

The extended context window of StableCode’s long-context version stands as a hallmark feature, affording users unparalleled advantages. Encompassing an expansive context window of 16,000 tokens, Stability AI asserts its dominance with the most capacious model in existence. This distinctive attribute empowers the utilization of intricate and specialized code generation prompts, enabling users to delve into medium-sized code bases across multiple files. This capability aids in comprehending, analyzing, and ultimately generating novel code structures.

Cooper elucidated the potential of the extended context window, highlighting its transformative impact. “You can use this longer context window to let the model know more about your code base and what other functions are defined in other files,” Cooper said. “So that when it does suggest code, it can be more tailor-made to your code base and to your needs.”

StableCode is firmly rooted in the framework of transformer neural networks, a cornerstone of modern generative AI models. A distinct departure from the conventional approach, StableCode harnesses rotary position embedding (RoPE) as opposed to the ALiBi (Attention with Linear Biases) mechanism employed by similar models.

Cooper expounded upon the rationale behind this choice, emphasizing its applicability to coding scenarios. Unlike narrative-driven natural language, coding’s dynamic structure necessitates an alternative approach. “I don’t think that coding lends itself to this idea of weighing the present more important than the past, so we use … RoPE, [which] does not have this sort of bias where you’re weighing the present more than the past.“

As StableCode emerges onto the scene, the focus now rests on gauging developer reception and utilization. The company is primed for engagement with the developer community, eager to explore novel directions and embrace the burgeoning landscape of generative development.

Conclusion:

The introduction of Stability AI’s StableCode LLM signifies a monumental leap in the field of code generation. With its versatile tiers, extensive context window, and innovative RoPE mechanism, StableCode has the potential to reshape how developers approach software creation. This innovation is set to catalyze a paradigm shift in the market, empowering developers with unprecedented tools for streamlined and efficient code generation across various programming languages.