- Relational databases are crucial for sectors like e-commerce, healthcare, and social media, but their predictive potential is often underutilized due to complex table management.

- Traditional methods flatten relational data, losing predictive information and requiring complex, error-prone data extraction pipelines.

- Manual feature engineering is labor-intensive, prone to errors, and limits the scalability of predictive models.

- RelBench, developed by Stanford University, Kumo.AI, and the Max Planck Institute for Informatics, introduces a new benchmark for deep learning on relational databases.

- RelBench converts relational data into graph representations, utilizing Graph Neural Networks (GNNs) to capture complex relational patterns.

- RDL models outperformed traditional methods, reducing human effort and code complexity by over 90%.

- RDL models achieved higher accuracy and efficiency in predictive tasks, including entity classification and recommendation.

Main AI News:

Relational databases are essential to modern digital infrastructures, playing a pivotal role in sectors such as e-commerce, healthcare, and social media. Their structured, table-based format streamlines data storage and access through robust query languages like SQL. These databases are fundamental for data management, supporting a broad spectrum of digital operations by efficiently organizing and retrieving critical data. Despite their significance, the depth of relational information is often not fully leveraged due to the complexities involved in managing interconnected tables.

The challenge with relational databases lies in extracting meaningful predictive signals from the complex relationships between tables. Traditional approaches often flatten relational data into simpler structures, typically a single table, which results in a significant loss of predictive information and requires elaborate data extraction pipelines. These pipelines are prone to errors, add to software complexity, and demand extensive manual intervention. Hence, there is an urgent need for methods that can fully utilize the relational nature of the data without oversimplifying it.

Current methods for handling relational data heavily rely on manual feature engineering. Data scientists manually convert raw data into formats suitable for machine learning models, a process that is not only labor-intensive but also prone to inconsistencies and errors. This approach limits the scalability of predictive models, as every new dataset or task involves considerable rework. Although manual feature engineering is still prevalent, it is inefficient and fails to fully exploit the predictive capabilities of relational databases.

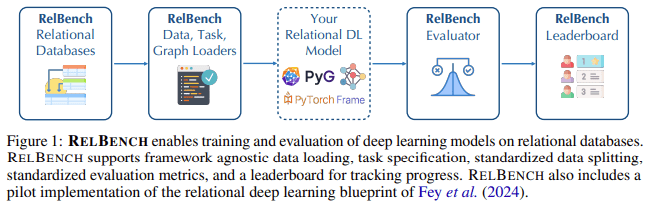

In response to these challenges, researchers from Stanford University, Kumo.AI, and the Max Planck Institute for Informatics have unveiled RelBench, a novel benchmark designed to advance deep learning on relational databases. RelBench aims to standardize the evaluation of deep learning models across different domains and scales. It offers a comprehensive framework for developing and assessing relational deep learning (RDL) techniques, allowing researchers to benchmark their models against consistent standards.

RelBench innovates by transforming relational databases into graph representations, enabling the application of Graph Neural Networks (GNNs) for predictive analytics. This involves constructing a heterogeneous temporal graph where nodes represent entities and edges illustrate relationships. Initial node features are derived from deep tabular models that accommodate various data types, including numerical, categorical, and text data. The GNN updates these node embeddings based on their connections, capturing complex relational patterns effectively.

In comparative studies, RDL methods demonstrated superior performance compared to traditional manual feature engineering techniques. RDL models consistently matched or exceeded the accuracy of manually engineered models while significantly reducing human effort and code complexity by over 90%. For example, RDL models achieved AUROC scores of 70.45% for user churn and 82.39% for item churn in entity classification tasks, outperforming the conventional LightGBM classifier.

In entity regression tasks, RDL models showed a reduction in Mean Absolute Error (MAE) for user lifetime value predictions by over 14%, highlighting their precision and efficiency. In recommendation tasks, RDL models realized exceptional improvements, with Mean Average Precision (MAP) scores increasing by over 300% in some cases. These findings illustrate the potential for RDL models to automate and enhance predictive tasks on relational databases, paving the way for new research and practical applications.

Conclusion:

RelBench represents a significant advancement in deep learning for relational databases by offering a standardized benchmark and innovative methodology. The use of Graph Neural Networks to handle relational data more effectively addresses the inefficiencies of traditional methods, leading to improved predictive accuracy and reduced manual effort. This development could enhance the scalability and applicability of machine learning models across various industries, potentially driving greater efficiency and innovation in data-driven decision-making processes.