TL;DR:

- Technological advancements empower neuroscience research, unlocking deeper insights into brain function and behavior.

- Large-scale neural recordings are crucial for understanding neuronal dynamics and computational function.

- Recent developments in recording modalities increase the need for efficient data analysis tools.

- Data-constrained recurrent neural networks (dRNNs) are proposed for real-time training.

- CORNN, or Convex Optimization of Recurrent Neural Networks, improves training speed and scalability.

- CORNN achieves training speeds up to 100 times faster without compromising modeling accuracy.

- It excels in simulating complex neural network tasks and exhibits robustness in replicating network dynamics.

Main AI News:

In the realm of cutting-edge neuroscience research, technological advancements have ushered in a new era of possibilities. These advancements empower scientists to delve deeper into the intricate interplay between brain function and behavior in living organisms. Central to this pursuit is the critical connection between neuronal dynamics and computational function. Researchers harness vast datasets from large-scale neural recordings obtained through optical or electrophysiological imaging methods to unravel the computational intricacies of neuronal population dynamics.

Recent progress in recording modalities has expanded our ability to record and manipulate a greater number of cells. Consequently, there is a growing demand for the development of theoretical and computational tools capable of efficiently analyzing the vast datasets generated by these diverse recording techniques. While manually constructed network models have served their purpose, they prove inadequate when confronted with the massive datasets of contemporary neuroscience.

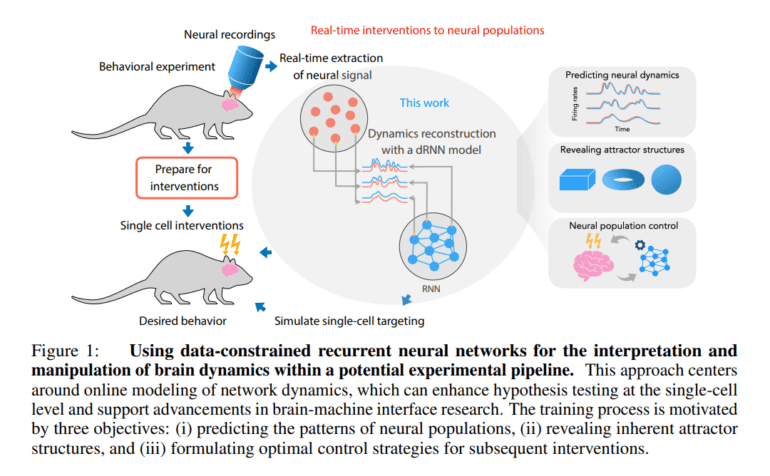

In the quest to extract computational principles from these extensive datasets, researchers have proposed employing data-constrained recurrent neural networks (dRNNs) for training. The ultimate goal is to conduct this training in real-time, facilitating applications in the medical field and enabling research methodologies to model and regulate treatments at a single-cell resolution, thereby influencing specific animal behavior patterns. Nonetheless, the limited scalability and inefficiency of current dRNN training methods pose a significant challenge. Even in offline scenarios, this constraint hampers the analysis of extensive brain recordings.

To surmount these hurdles, a team of dedicated researchers has introduced a novel training technique known as Convex Optimization of Recurrent Neural Networks (CORNN). By eliminating the inefficiencies inherent in conventional optimization methods, CORNN aims to enhance training speed and scalability. In simulated recording experiments, CORNN has demonstrated training speeds approximately 100 times faster than traditional optimization techniques, all without compromising and, in some cases, even improving modeling accuracy.

The research team has revealed that CORNN’s effectiveness has been rigorously assessed using simulations involving thousands of cells performing fundamental operations, such as executing timed responses or 3-bit flip-flops. This showcases CORNN’s adaptability in tackling demanding neural network tasks. Moreover, the researchers emphasize CORNN’s remarkable robustness in replicating attractor structures and network dynamics. It consistently delivers accurate and reliable results, even when faced with challenges such as variations in neural time scales, significant subsampling of observed neurons, or discrepancies between generator and inference models.

Conclusion:

The introduction of CORNN, with its remarkable training efficiency and robustness, marks a significant advancement in neuroscience research. This innovation has the potential to revolutionize the market by enabling faster and more accurate analysis of large-scale neural recordings, opening doors to new possibilities in medical applications and research methodologies. CORNN’s adaptability and versatility position it as a game-changer in the field of machine learning for neuroscience, promising to accelerate progress and drive innovation.